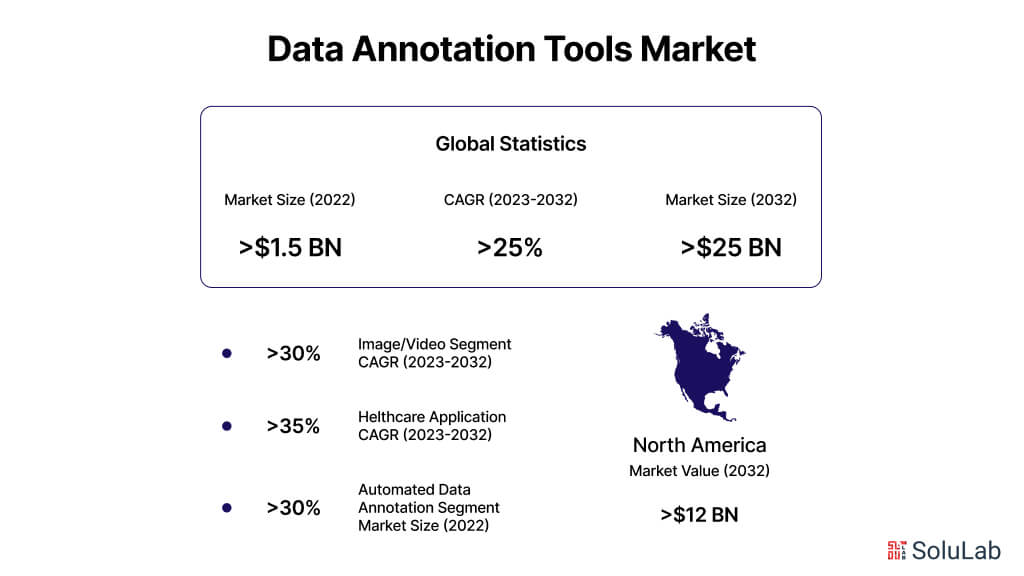

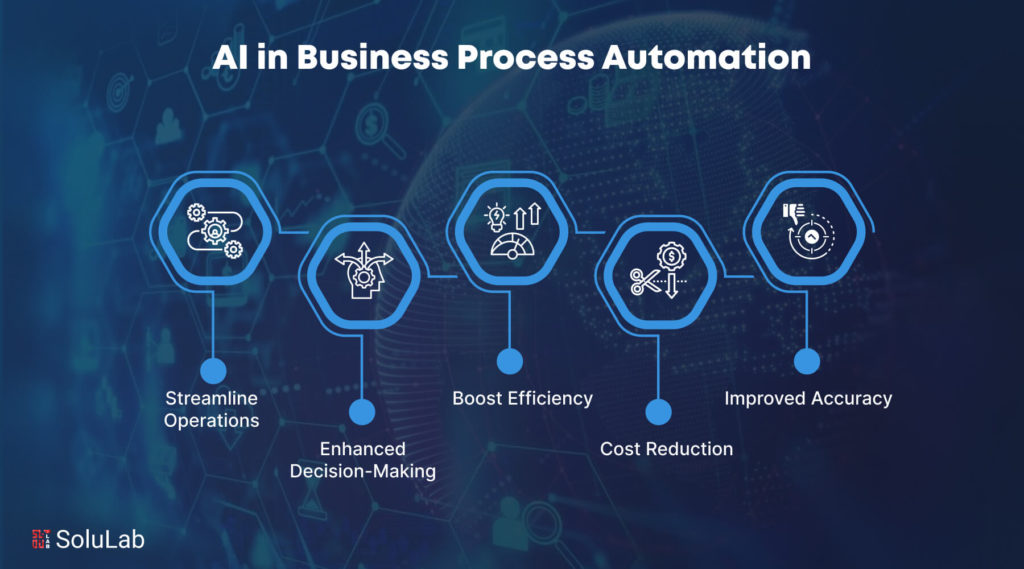

AI in business process automation makes monotonous operations easy, freeing up employees to concentrate on more strategic tasks and improving efficiency, accuracy, processing speed, decision-making, and cost savings.

Business process automation (BPA) and artificial intelligence (AI) are two technologies that are changing the face of contemporary business. The integration of these technologies is becoming more and more important as businesses aim for clarity, efficiency, and creativity.

Implementing AI in BPA can lead to substantial cost savings. Amazon’s investment in AI and robotics has reduced operational costs by 25% in its Shreveport fulfillment center. In this blog, we’ll explore AI in business process automation use cases, benefits, challenges, and more.

AI and BPA: Complementary Technologies

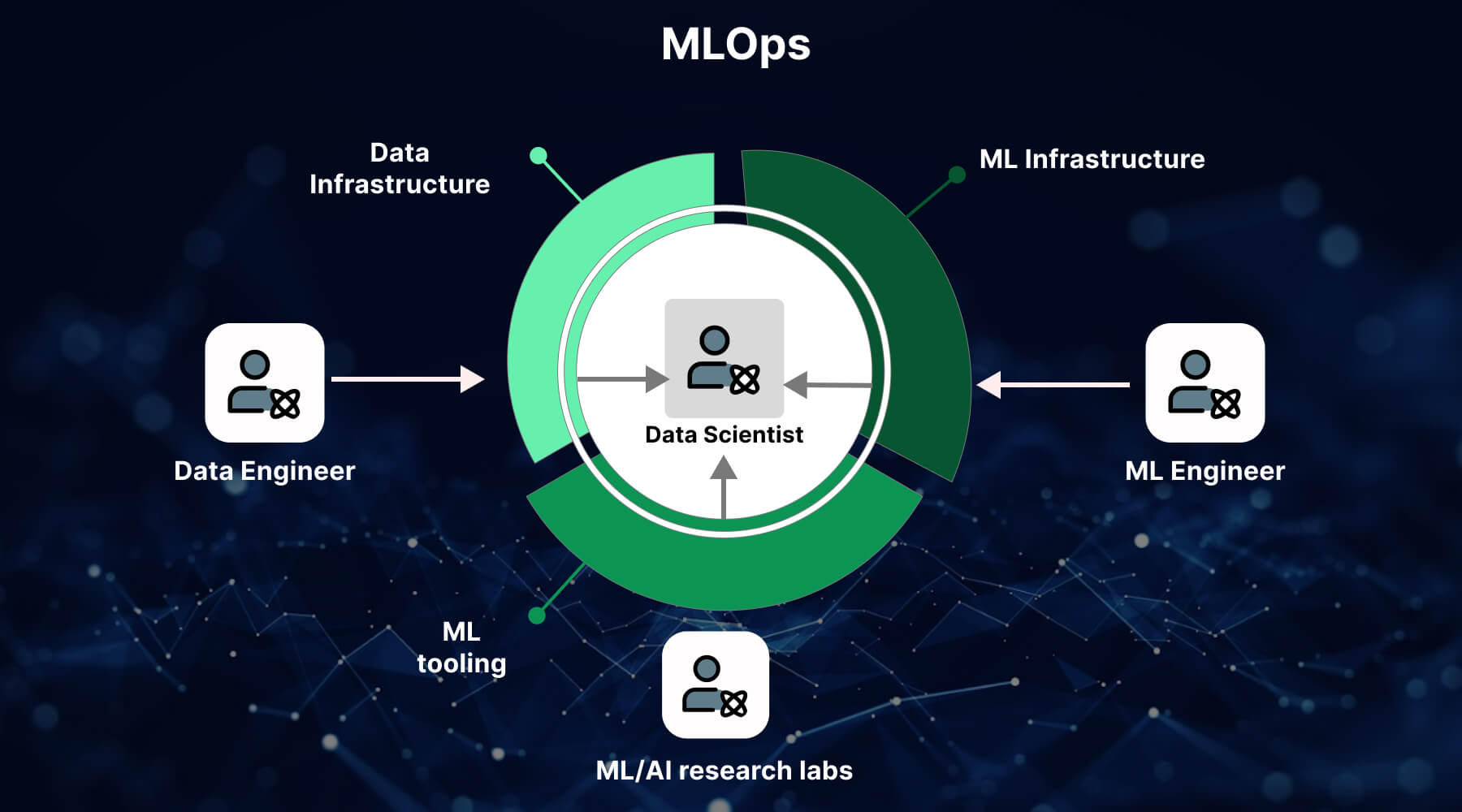

While Artificial Intelligence (AI) and Business Process Automation (BPA) have distinct applications and capabilities, they converge in several key areas. These technologies intersect at various points, sharing goals such as business enhancement, automation, data dependency, integration, scalability, continuous evolution, and decision-making support. However, it is crucial to acknowledge their unique characteristics. BPA focuses on rule-based automation, while AI encompasses more advanced capabilities like machine learning and computer vision. Nonetheless, when combined, these technologies can create a synergistic effect, optimizing business potential and driving innovation.

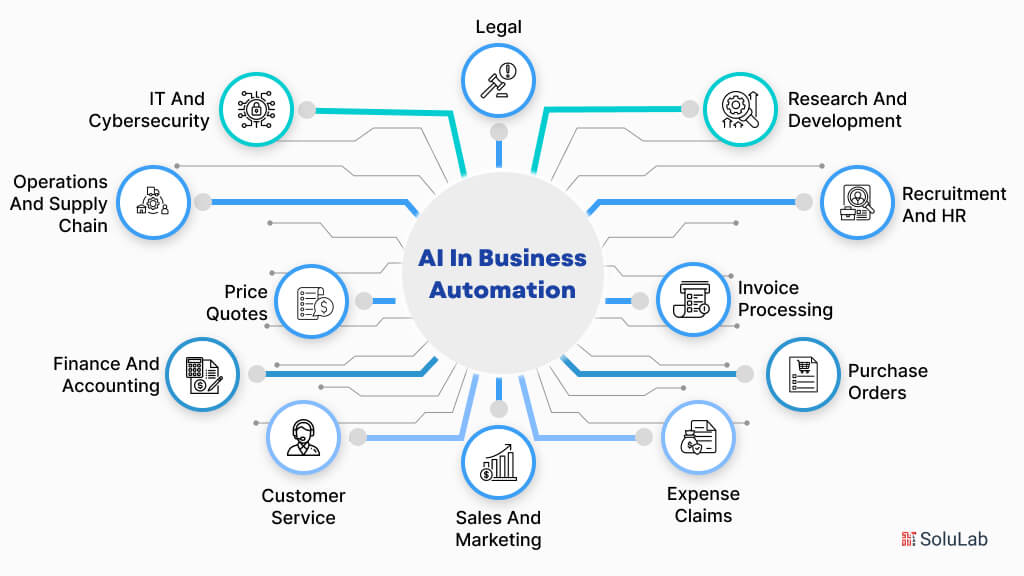

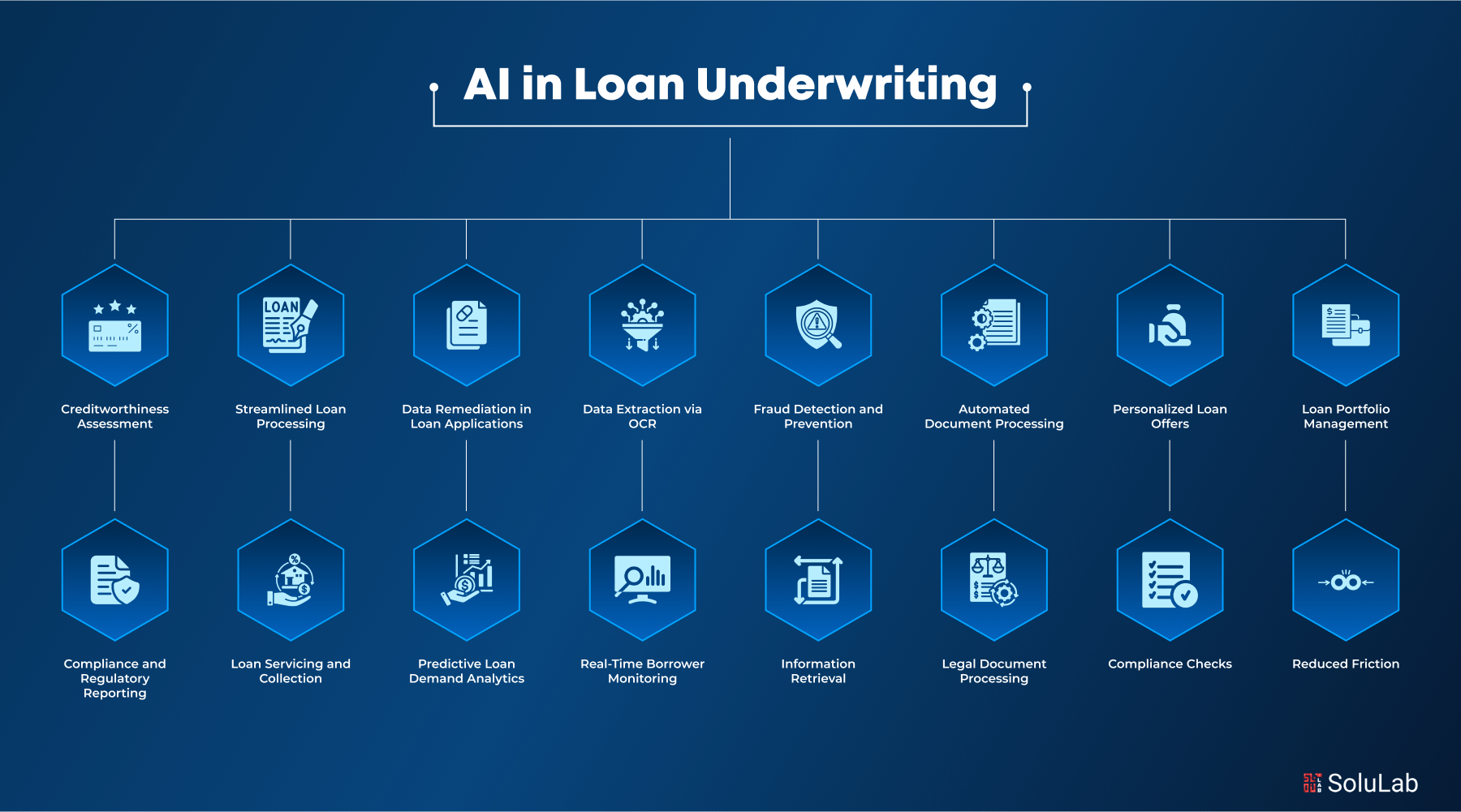

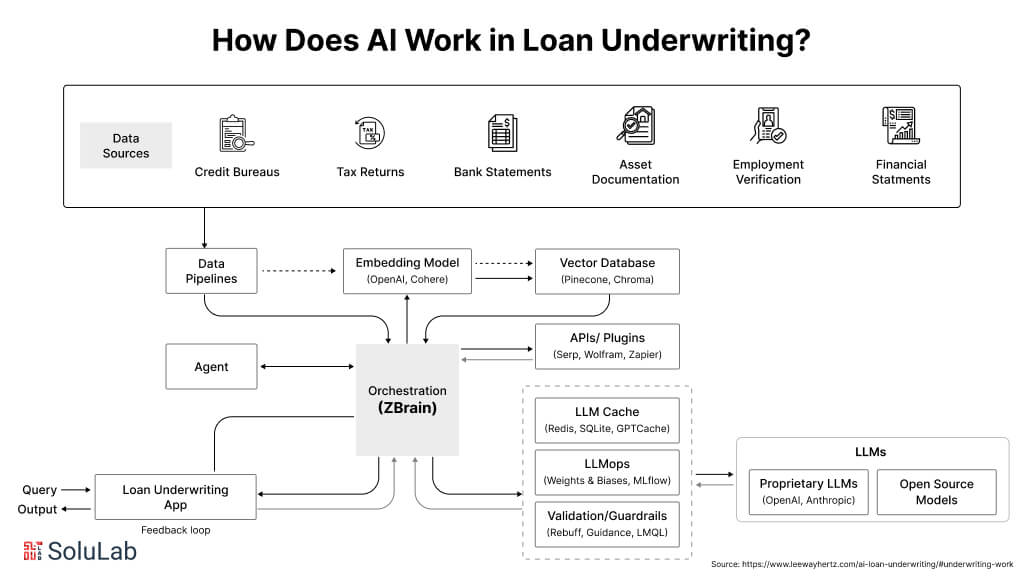

Use Cases Of AI In Business Automation

In contemporary business, Artificial Intelligence (AI) and Business Process Automation (BPA) can be harnessed to achieve several crucial AI use cases, including:

1. Research And Development:

AI plays a crucial role in automating R&D automate business processes across sectors. It aids in idea generation and innovation by analyzing market trends, consumer behavior, and competition. AI can automate project management tasks like scheduling, resource allocation, and progress tracking, ensuring efficient team coordination. Additionally, AI automates the collection and analysis of market data, customer feedback, and competitor information, providing valuable insights for strategic decision-making in R&D.

2. Recruitment And HR:

Automating business processes using AI can change HR processes by streamlining tasks such as onboarding, job advertisement, compliance checks, timesheet tracking, exit interviews, and performance management. This not only saves time but also allows HR teams to focus on critical aspects like employee training, culture development, and wellness programs. Key use cases of AI agent in HR include automating resume screening, enhancing the employee onboarding experience, and utilizing predictive analytics to identify potential indicators of turnover and proactively improve employee retention. By suing AI, organizations can enhance their HR capabilities, streamline processes, improve efficiency, and foster a better employee experience.

3. Invoice Processing:

Automated business process automated invoice processing, reducing manual errors and improving efficiency. BPA systems can extract relevant invoice information, automate approval workflows, integrate AI with ERP systems, perform three-way matching, handle exceptions, and provide an audit trail. Automating invoice processing allows the accounts team to focus on high-priority tasks, enhances overall financial accuracy, and simplifies audits and compliance. To accelerate digitization, finance teams can standardize invoice layouts and line-item fields before feeding them into OCR/RPA workflows. For SMEs without a full ERP, free resources can jumpstart this effort, with customizable invoice templates for Excel that include formula-driven subtotals/totals, professional formatting, and printable layouts. Using a consistent template reduces errors during data capture, speeds approvals, and creates cleaner datasets for AI-driven analytics.

4. Purchase Orders:

AI powered RFx and automated business process software significantly enhance the procurement process by digitizing purchase order forms and connecting them to databases. This eliminates manual data entry, reducing repetitive tasks and improving the accuracy and speed of the procurement process. Key benefits include automated data entry, real-time inventory updates, streamlined vendor communication, expense tracking, and budget management. BPA software enables organizations to optimize their procurement operations, minimize errors, enhance transparency, and foster stronger relationships with suppliers, ultimately resulting in cost savings and improved supply chain efficiency.

5. Expense Claims:

Business process automation software expense management system simplifies expense reporting processes and ensures compliance with organizational guidelines. It facilitates efficient submission and approval of expense claims, automates policy compliance checks, provides real-time tracking and visibility, and helps prevent fraud through data analytics. For budget management, BPA offers automated workflows for budget approvals, streamlining financial planning and reducing manual workload. These features enhance accuracy, save time, and foster transparency in expense and budget management.

6. Sales And Marketing:

Business process automation software provides substantial advantages in sales and marketing by automating various tasks and optimizing resource allocation. In sales, automation enables quick and accurate price quoting and efficient approval processes, leading to faster deal closures and enhanced customer satisfaction. In marketing, automating business processes facilitates automated email campaigns, lead scoring, and nurturing, improving customer engagement, conversion rates, and revenue. Additionally, BPA streamlines time-off requests, making it easier for employees to submit requests and providing visibility into the approval process. Overall, BPA solutions enhance operational efficiency, improve customer experience, and drive better business outcomes.

7. Price Quotes:

Automating business processes upgrades the quoting process, enabling rapid generation and automatic sending of price quotes for managerial review. This speeds up the process, enhances customer experience, and provides real-time pricing for E-commerce, customized quotes for services, and tiered pricing for bulk orders. automating business processes software also automates the discount approval process, ensuring alignment with the company’s pricing strategy. Overall, BPA software streamlines the quoting process, improves customer satisfaction, and increases the likelihood of purchase.

8. Customer Service:

AI-driven technologies are upgrading customer service. Chatbots provide instant responses to common questions, virtual assistants offer personalized assistance, business process automation tools streamline ticket management, and sentiment analysis extracts insights from customer feedback. These tools enhance customer engagement, improve satisfaction, and allow human agents to focus on complex issues. By strength AI, businesses can deliver a more efficient and proactive customer service experience, fostering loyalty and driving growth.

9. Finance And Accounting:

AI technology has greatly improved the efficiency and accuracy of financial processes in businesses. Business process automation tools like AI-driven expense management systems automate expense submission, categorization, and reimbursement, reducing the risk of discrepancies and enhancing compliance. AI in finance also enables efficient invoice processing by extracting relevant information, automating approval workflows, and minimizing manual handling. Additionally, AI can detect anomalies in expense reports and identify unusual spending patterns, ensuring financial integrity. Furthermore, AI algorithms provide accurate financial forecasts and insights by analyzing historical data, market trends, and external factors, helping businesses make informed decisions and optimize their financial strategies.

10. Operations And Supply Chain:

AI in supply chain plays a vital role in various industries by improving demand forecasting, inventory optimization, and fleet management. Business process automation services enhance the retail sector by strengthening AI-driven demand forecasting, which uses historical data and external factors to accurately predict product demand, enabling retailers to optimize inventory levels and enhance customer satisfaction. In manufacturing, AI helps optimize inventory management by analyzing production data and supplier lead times, minimizing excess stock and stockouts. Within the transportation industry, AI-driven predictive maintenance analyzes vehicle sensor data and historical records to predict component failures, optimizing fleet efficiency and reducing unplanned repairs. AI’s impact on these industries leads to improved decision-making, cost reduction, and enhanced overall operations.

11. IT And Cybersecurity:

Gen AI in cybersecurity plays a vital role in enhancing cybersecurity and streamlining IT operations. It offers real-time threat detection, behavioral analysis for proactive threat identification, rapid response to phishing incidents, business process automation services such as automated patch management, efficient troubleshooting assistance, and intelligent ticket routing and prioritization. These AI-driven solutions empower organizations to swiftly address cybersecurity threats, minimize downtime, and optimize IT support functions, ultimately contributing to improved security posture and enhanced business resilience.

12. Legal:

AI in legal is changing the legal industry by automating and streamlining various aspects of legal work. It expedites contract review processes by scanning legal documents for key terms, conditions, and potential issues. Automation in business processes through AI-powered document automation streamlines the creation of legal documents by intelligently generating drafts based on predefined templates. In M&A transactions, AI helps review extensive documentation and identify potential legal risks, accelerating due diligence. It also contributes to efficient contract lifecycle management by automating various stages, enhancing compliance, and reducing the risk of disputes. Implementing AI in these key areas enhances operational efficiency, reduces errors, and allows legal professionals to focus on more complex and strategic tasks.

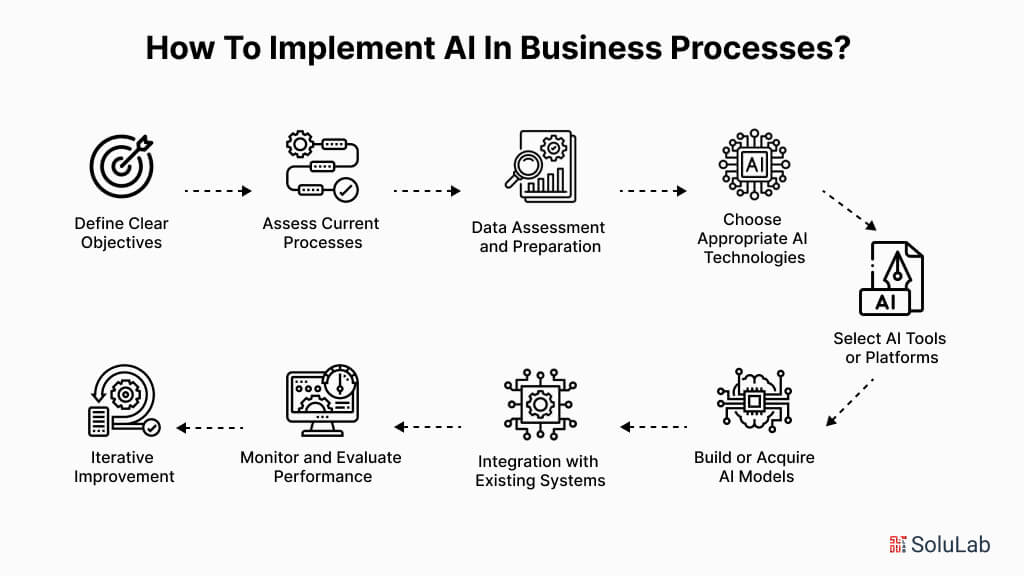

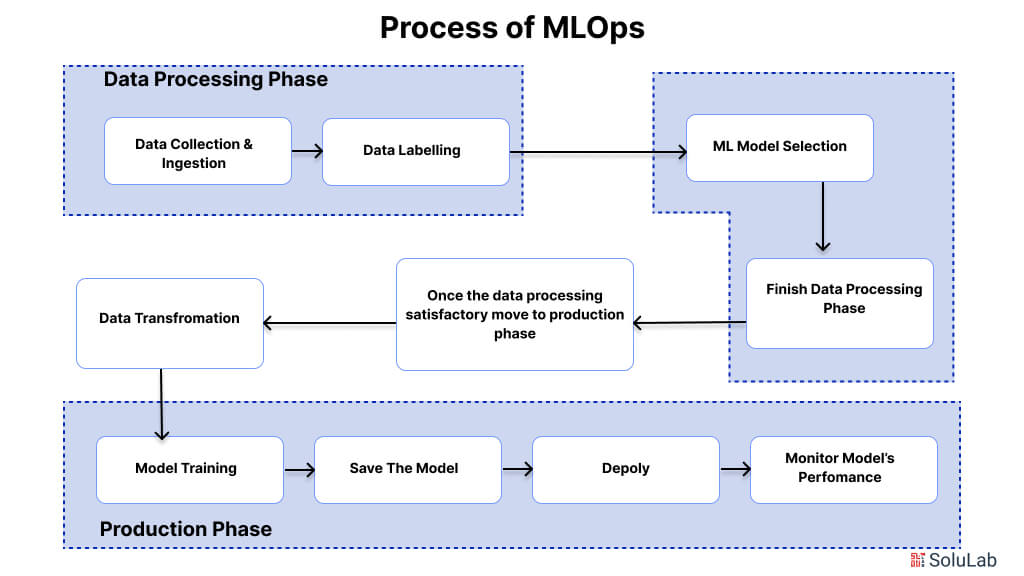

How To Implement AI In Business Processes?

Integrating AI into business processes requires a strategic approach to maximize its benefits and ensure successful implementation. Here’s a general guideline for effective AI integration:

- Define Clear Objectives: Clearly articulate the business goals you aim to achieve with AI, whether it’s improving efficiency, reducing costs, enhancing customer experience, or gaining a competitive edge. Having well-defined objectives is vital to ensure the automation of business processes aligns with your strategic goals.

- Assess Current Processes: Evaluate existing business processes to identify areas where AI can make the most significant impact. Look for repetitive tasks, data-intensive operations, or areas with potential for optimization. Understanding the business process automation benefits and identifying business process automation use cases will help you focus on the most impactful areas.

- Data Assessment and Preparation: AI relies heavily on data. Assess the quality, quantity, and accessibility of your data. Ensure data is cleaned, organized, and reflective of the processes you want to automate or optimize. Implement data governance practices to maintain data quality, which is crucial for the automation in business process.

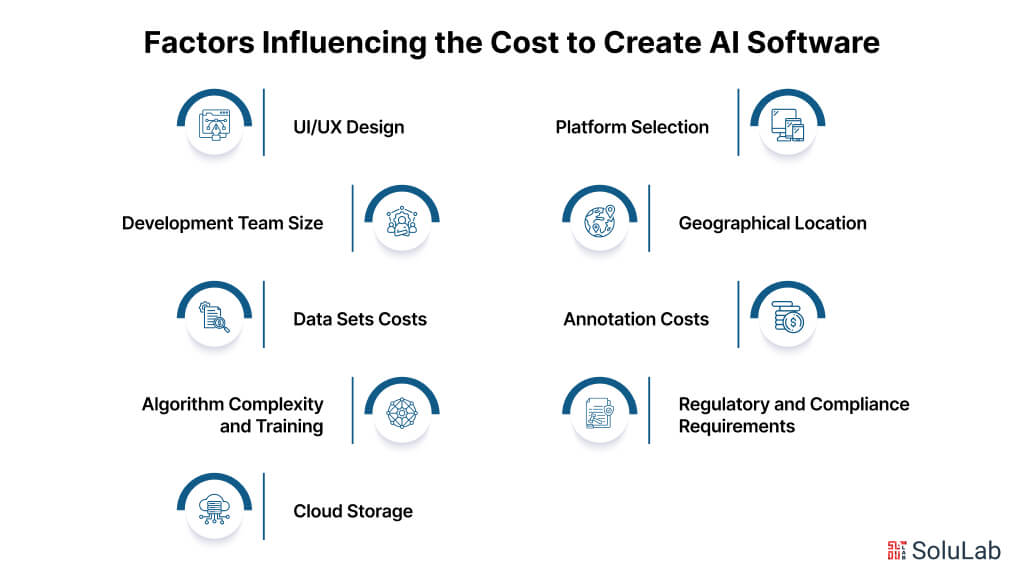

- Choose Appropriate AI Technologies: Select the right AI technologies for your specific needs. This could include machine learning, natural language processing, computer vision, or a combination of these. The choice of technology should align with your business objectives and the nature of your processes, as seen in various business process automation examples.

- Select AI Tools or Platforms: Depending on your resources and requirements, choose AI tools or platforms that suit your business. This could range from pre-built AI solutions to custom development. Many cloud service providers offer AI services that can be integrated into your existing infrastructure, providing the benefits of business process automation.

- Build or Acquire AI Models: If you opt for custom solutions, you may need to build AI models tailored to your business needs. This involves training models on relevant data to make predictions, classifications, or automate tasks. Alternatively, you can strengthen pre-trained models and customize them for your specific requirements to optimize the automation of business processes.

- Integration with Existing Systems: Ensure seamless integration of AI into existing business systems. This may involve collaborating with your IT department to connect AI solutions with databases, applications, and other infrastructure components, enhancing the overall business process automation benefits.

- Monitor and Evaluate Performance: You can try implement monitoring mechanisms to track the performance of AI applications using performance management software. Regularly evaluate how well the AI meets business objectives and make adjustments as needed. This may involve refining models, updating data, or modifying algorithms based on real-world feedback and business process automation examples.

- Iterative Improvement: AI implementation is an iterative process. Use feedback from users and performance metrics to improve and optimize your AI applications continually. Stay informed about advancements in AI technology that could further enhance your processes, providing ongoing business process automation use cases for continuous improvement.

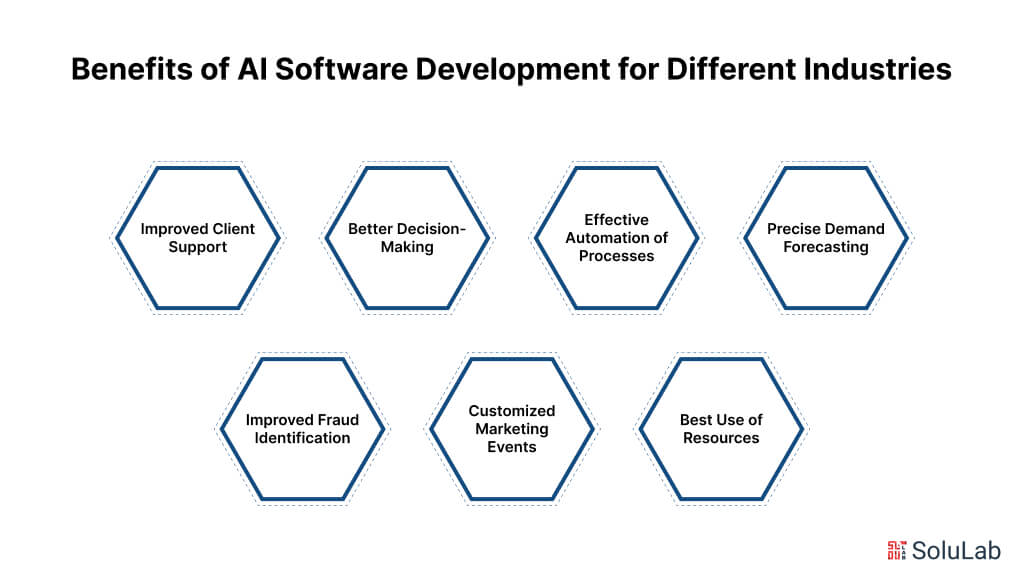

Benefits of AI in Business Automation

Artificial Intelligence (AI) and Business Process Automation (BPA), when combined, create a powerful synergy that goes beyond traditional business operations, creating value across diverse domains. These intertwined technologies are transforming contemporary business, ranging from enhanced revenue generation and efficient cost control to improved customer satisfaction and brand expansion. By strategically incorporating AI into automation in business process, organizations can achieve their fundamental objectives and position themselves for long-term growth and innovation in a highly competitive market.

Several important business process automation use cases in modern business can be realized by strengthening the capabilities of AI and BPA. These use cases include:

1. Enhancing Revenue Streams

Businesses primarily aim to optimize revenue and ensure its ongoing growth. Common strategies involve attracting more customers, boosting sales, introducing new products or services, and adjusting prices. Implementing AI and BPA to automate sales and marketing processes can significantly contribute to increased revenue. CRM platforms, for example, can streamline lead management, while AI in banking and AI-powered chatbots offer personalized recommendations, leading to enhanced sales opportunities. Additionally, invoicing tools can expedite the billing process, and predictive analytics solutions can assist in forecasting sales, enabling businesses to make informed decisions and optimize their revenue streams.

2. Decreasing Operational Expenses

Optimizing operations, employing automation in business process, or offloading non-essential functions are crucial strategies for businesses aiming to reduce costs and boost profits. Business process automation (BPA) plays a pivotal role in cost reduction by automating routine tasks, freeing up resources and enhancing efficiency. Moreover, workflow automation tools can generate significant cost savings by streamlining processes and eliminating manual labor. AI-driven finance applications offer valuable insights into spending patterns, enabling businesses to identify areas for cost optimization and make data-driven decisions.

3. Boosting Customer Satisfaction

Achieving success hinges on ensuring customer satisfaction. Providing high-quality products, exceptional service, and responsiveness to feedback is paramount. AI-powered chatbots can deliver uninterrupted support, prompt issue resolution, and tailored recommendations. Moreover, automating order fulfillment through CRM tools provides an integrated view of the customer, facilitating customized interactions that enhance satisfaction and foster loyalty.

4. Increasing Brand Recognition

Building a successful business goes hand-in-hand with establishing a strong brand. Enhancing brand awareness is essential and can be achieved through marketing efforts, optimizing online presence, and fostering a positive brand image. Artificial Intelligence (AI) and Business Process Automation (BPA) technologies can significantly aid in this process by refining marketing strategies and increasing online visibility. Tools for managing social media and search engine optimization (SEO) can enhance the quality and reach of website content. AI-powered sentiment analysis tools enable businesses to gauge customer responses effectively, leading to more targeted and personalized communication.

5. Expanding Market Share

To gain a competitive edge, expand, or merge, it is crucial to have a growing market share. AI and BPA offer valuable insights into customer behavior and enhance operational efficiency, thereby contributing to market share growth. AI-powered analytics tools can identify areas for growth, and supply chain management software simplifies interactions with suppliers. Business process automation (BPA) helps businesses stay competitive and capture a larger market share.

6. Fostering Innovation

Innovation is crucial for staying competitive. To innovate effectively, it’s essential to comprehend customer demands gleaned from diverse feedback channels. Artificial Intelligence (AI) and Business Process Automation (BPA) play significant roles in driving innovation by providing valuable insights and uncovering untapped opportunities. Analytical tools empowered by AI can scrutinize customer feedback, pinpointing potential new products that align with consumer desires. Additionally, BPA software enables businesses to streamline processes, minimize inefficiencies, and heighten quality. This combination of AI and BPA nurtures an environment conducive to innovation and overall operational efficiency.

7. Automating Routine

By strengthening AI business process automation, organizations can enhance efficiency through the minimization of manual tasks and the reduction of errors. AI algorithms further contribute to this enhancement by handling data processing and analysis at speeds and scales that surpass human capabilities. Furthermore, this automation increases employee productivity, freeing up time for them to focus on more strategic and creative aspects of their work. AI-powered business automation tools provide valuable assistance to employees in data analysis, research, and decision-making processes, empowering them to make informed choices and drive business growth..

8. Facilitating Seamless Integration

Where tools and technologies play a crucial role, AI-powered business process automation solutions stand out for their ability to integrate seamlessly with various systems and tools. From CRM systems and ERP software to project management platforms, these solutions can be easily incorporated into existing systems, allowing for seamless functionality across different platforms. This integration ensures that businesses can enhance their existing workflows without the need for complete overhauls, optimizing their operations and maximizing efficiency.

9. Driving Continuous Improvement

Businesses can remain competitive by incorporating AI into their automation of business processes. AI solutions are inherently scalable, allowing them to handle growing workloads and adapt to growing business requirements. AI systems continuously learn and improve over time, optimizing processes to deliver superior results and performance. This ensures that businesses stay at technology and achieve long-term success.

10. Optimizing Resource Allocation

Incorporating AI into business process automation enhances resource allocation and utilization efficiency. strong AI-driven business process automation enables businesses to effectively monitor inventory, forecast demand, and optimize logistics, leading to optimal resource utilization. AI tools analyze historical data to anticipate future requirements, allowing for improved inventory management, demand forecasting, and logistics optimization. These capabilities result in cost reduction and increased productivity.

11. Improved Decision Making

AI-powered business process automation empowers businesses to make more effective decisions by strengthening advanced analytics and machine learning. Through the processing of large datasets, AI uncovers patterns and generates actionable insights, facilitating data-driven, predictive, and real-time decision-making. This automation significantly enhances accuracy, minimizes human error, and supports strategic planning, enabling organizations to respond swiftly to market. By strength AI-driven insights, businesses can make informed decisions that drive improved outcomes and achieve long-term success. The integration of AI and business process automation offers versatile solutions across various business domains, including revenue enhancement, cost optimization, customer satisfaction, brand development, market expansion, and innovation. By harnessing these technologies, businesses can forge a path toward sustainable growth and competitive advantage.

Challenges and risks of AI business process automation

The incorporation of artificial intelligence (AI) into business process automation (BPA) offers the potential for increased efficiency. Nevertheless, this technological advancement is accompanied by a distinct array of challenges and potential risks that require careful consideration.

-

Data Security and Privacy Concerns:

AI systems rely on vast amounts of data, often including sensitive information about customers, employees, and business operations. This raises significant concerns about data security and privacy. Without robust security measures, this data can be vulnerable to unauthorized access, theft, or misuse, leading to financial losses, reputational damage, and legal consequences. Organizations must implement stringent data encryption, access controls, and intrusion detection systems to protect this sensitive information.

-

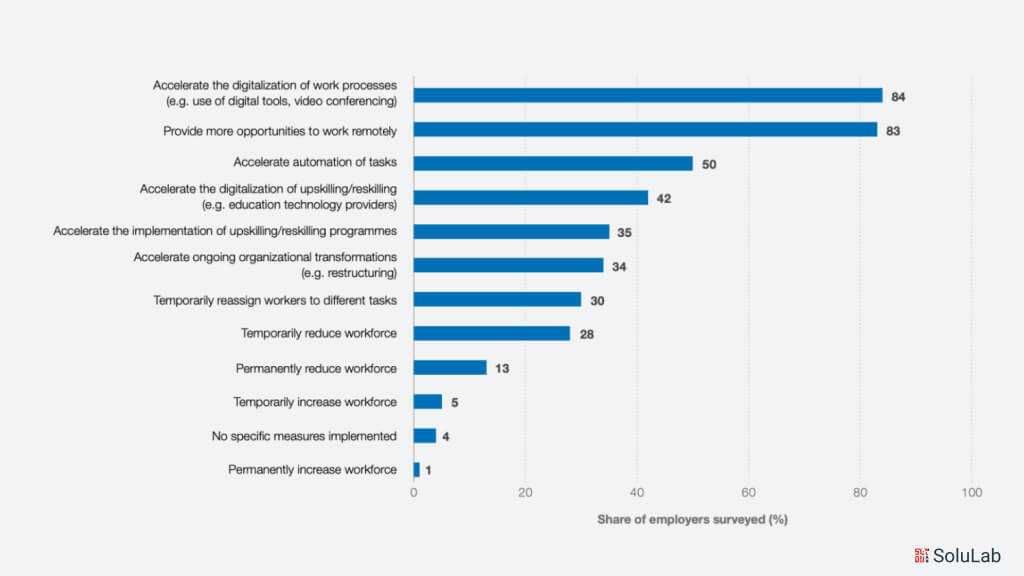

Workforce Adaptation and Training:

AI-powered BPA solutions can lead to a shift in employee roles and responsibilities, requiring workers to adapt to new technologies and tasks. This can be a significant challenge, especially for employees who are not technologically savvy or who have limited opportunities for training and skill development. Organizations need to invest in comprehensive training programs to help employees understand and operate AI systems effectively. Additionally, they must provide clear communication and support to address employee concerns about job displacement and career development.

-

Ethical Considerations:

AI systems can inadvertently perpetuate biases present in the data, leading to unethical decision-making. For example, an AI-powered resume screening tool trained on biased data may unfairly discriminate against certain demographic groups. Organizations must carefully evaluate the ethical implications of AI in business process automation and implement measures to mitigate potential biases. They should establish clear ethical guidelines, regularly audit their AI business process automation systems for bias, and provide mechanisms for users to challenge biased decisions.

-

Lack of Human Oversight:

Excessive reliance on AI without human oversight can result in errors and missed contextual factors. AI systems are not infallible and can make mistakes due to incorrect data, faulty algorithms, or unexpected scenarios. Without human oversight, these errors can go undetected and lead to severe consequences. Organizations must ensure that AI for business process automation systems are used as decision-making aids rather than replacements for human judgment. They should establish clear roles and responsibilities for human oversight and intervene when necessary to correct errors or provide additional context.

-

Integration Challenges:

Integrating AI solutions into existing systems and workflows can be complex and disruptive. AI systems often require specialized hardware, software, and data formats, which can be challenging to integrate with legacy systems. Organizations must carefully plan and execute AI business process automation projects, considering factors such as data compatibility, system interoperability, and user experience. They should also provide adequate training and support to users to help them adapt to the new AI-enabled systems and workflows.

Future Of AI in Business Process Automation

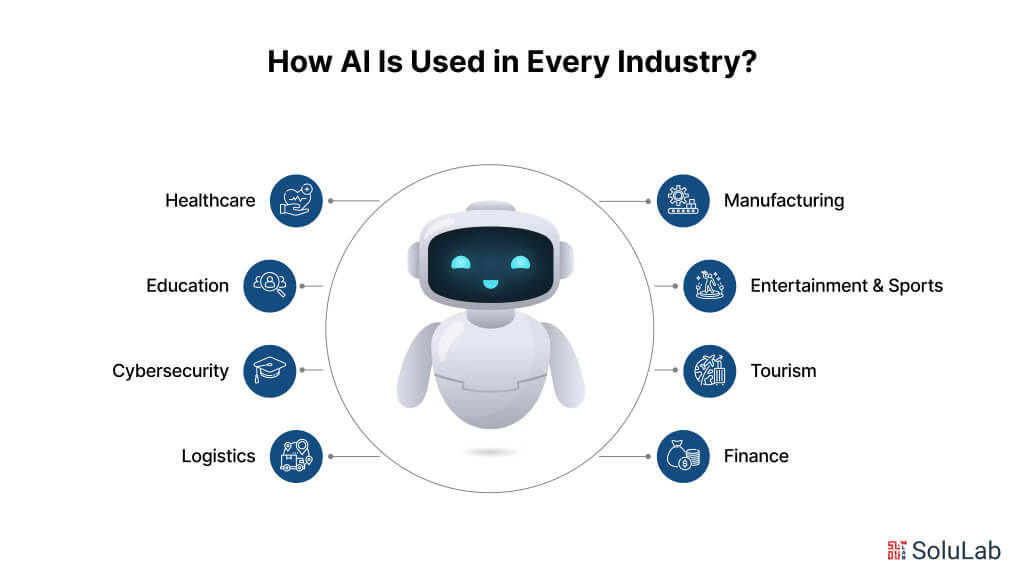

As AI transforms business process automation, key trends are deciding its future. Advancements in AI technology, including natural language processing, computer vision, and deep learning, will enhance communication, analysis, and decision-making. The integration of AI and the Internet of Things (IoT) will create smart devices and enable predictive maintenance. AI and Robotic Process Automation (RPA) will create more intelligent robotic systems. AI-driven predictive analytics will optimize resource planning and strategic decision-making. Autonomous decision-making systems will execute tasks and make data-driven decisions. The democratization of AI will make advanced AI tools accessible to smaller businesses. Hyper Automation, an extension of AI capabilities, will automate multiple processes and create comprehensive automation frameworks. These trends promise efficiency, personalization, and improved decision-making across industries. As their adoption accelerates, AI’s transformative potential in business process automation will usher in an increasingly automated and intelligent future. Partnering with an AI consulting company can help businesses navigate these advancements and fully capitalize on the benefits of AI.

Conclusion

AI in Business Process Automation is revolutionizing how businesses operate by improving efficiency and reducing costs. Automating repetitive tasks and optimizing workflows allows organizations to focus on innovation and growth, while AI-powered solutions help unlock new levels of performance and customer satisfaction.

To make the most of AI in your operations, partnering with an experienced AI development company is crucial. If you’re looking to hire AI developers who can tailor solutions to your specific needs, SoluLab offers the expertise to help businesses implement advanced AI technologies, driving success and innovation.

FAQs

1. What is business process automation (BPA)?

Business process automation (BPA) involves using technology to streamline and automate repetitive and manual tasks within business processes. This can include automating workflows, data entry, and other routine tasks to improve efficiency, reduce errors, and cut costs.

2. How does AI enhance business process automation?

AI enhances business process automation by introducing intelligent capabilities such as predictive analytics, natural language processing, and machine learning. This allows for more sophisticated automation, including data-driven decision-making, real-time insights, and the ability to handle complex tasks that go beyond traditional automation.

3. What are some examples of business process automation?

Examples of business process automation include automated invoice processing, customer service chatbots, and workflow automation for approvals and document management. These solutions help streamline operations, reduce manual effort, and improve accuracy across various business functions.

4. What are the benefits of business process automation?

The benefits of business process automation include increased efficiency, reduced operational costs, minimized errors, enhanced productivity, and improved compliance. By automating repetitive tasks, businesses can allocate resources more effectively and focus on strategic activities.

5. How can I get help with AI integration for my project?

If you’re looking to integrate AI into your gaming project, consider reaching out to an AI consulting company or AI development company. These companies can offer expertise in applying AI technologies to enhance your game’s features and performance.

6. What are some use cases for AI in business process automation?

Use cases for AI in business process automation include automating customer support with chatbots, streamlining invoice processing with intelligent data extraction, and optimizing supply chain management through predictive analytics. These applications enhance operational efficiency and provide valuable insights for better decision-making.

7. How do I get started with implementing AI for business process automation?

To get started, define your business objectives, assess your current processes, and identify areas where AI can make the most impact. Choose the right business process automation tools and AI-powered BPA solutions that align with your needs. SoluLab can assist with integrating these technologies and ensuring a smooth implementation to achieve your automation goals.

![How to Build AI Software [Step-by-Step Guide]](https://www.solulab.com/wp-content/uploads/2024/09/Steps-to-Build-AI-Software.jpg)