Generative AI has emerged as a transformative technology in recent years, revolutionizing various industries with its potential to create original content such as images, text, and even music. The advancements in generative AI have enabled machines to learn, create and produce new content, leading to unprecedented innovation across various sectors. As a result, many companies are now considering generative AI technology and hiring Generative AI Development Companies to leverage its benefits and enhance their operations with AI-led automation.

Generative AI is the new future AI that focuses on learning, analyzing, and producing original content through machine learning algorithms. This technology is transforming businesses’ operations and enhancing their ability to provide customized solutions. It has become a hot topic in the market, with many companies investing in this technology to leverage its benefits.

This blog will provide in-depth information about generative AI, how it works, the different types of generative AI models, and their applications in various industries. We will also discuss the tech stack used in Generative AI development services and how natural language processing (NLP), deep learning, and machine learning play a critical role in this technology. By the end of this blog, you will have a comprehensive understanding of generative AI and its significance in today’s digital landscape.

What is Generative AI?

Generative AI is a field of artificial intelligence that involves using machine learning algorithms to create new data or content that did not exist before. Unlike traditional AI, which involves using pre-existing data to make predictions or decisions, Generative AI generates novel content, such as images, music, or text, based on a given input or set of rules.

Generative AI algorithms use various techniques, including deep learning, reinforcement learning, and probabilistic programming, to create new content. These algorithms work by learning patterns and relationships within the data and then using this knowledge to generate new content similar to the input data.

One of the most exciting aspects of Generative AI is its ability to create highly realistic and convincing content that can be difficult to distinguish from human-created content. This has led to many creative applications of Generative AI, from generating new music and art to creating virtual characters and entire worlds.

How Does it Differ From Other Types of AI? (Supervised and Unsupervised Learning)

Generative AI differs in its output from other types of AI, such as supervised and unsupervised learning. While supervised and unsupervised learning are focused on learning patterns and making predictions based on existing data, generative AI is focused on creating new and original content that does not exist in the training data.

Supervised learning involves training a machine learning model on labeled data to make predictions or classifications based on new, unseen data. On the other hand, unsupervised learning involves finding patterns and relationships in unlabeled data without specific guidance or targets.

In contrast, generative AI models use unsupervised learning techniques to learn patterns and relationships in data, but the focus is on creating new content rather than making predictions or classifications. Generative models are trained to learn the underlying structure and characteristics of the data. They can then generate new, original content similar to the training data but not identical.

The Technologies Within Generative AI; Types of AI Models

Though generative AI is a single technology that powers endless possibilities within various fields, it comprises different AI models responsible for different operations, and here they are.

-

Generative Adversarial Networks (GANs)

Generative Adversarial Networks, or GAN for short, is a generative AI class that has recently gained much attention. GANs consist of two neural networks: a generator and a discriminator. The generator’s job is to create realistic outputs that mimic the input data, while the discriminator’s job is to identify whether the output is real or fake.

The GAN framework was first proposed in 2014 by Ian Goodfellow and his colleagues at the University of Montreal. They wanted to create a system to generate new samples similar to the original data. They came up with the idea of using two neural networks in competition with each other.

The generator network is trained to create new samples similar to the training data, while the discriminator network is trained to distinguish between real and fake data. The generator is then adjusted based on the feedback from the discriminator until it can create realistic outputs that fool the discriminator.

Since its introduction, GANs have been used for various applications, including image and video generation, text generation, and music composition. With their ability to create realistic and diverse outputs, GANs quickly become one of the most exciting AI research areas.

How Do GANs Work?

A Generative Adversarial Network (GAN) consists of two neural networks: the generator and the discriminator. The generator produces new data, while the discriminator verifies whether the generated data is real or fake.

The generator takes a random input and creates new data, such as images or audio. Initially, the generated data is random and meaningless. The discriminator then receives both real and generated data and learns to differentiate between them.

The two networks are trained together in a competition: the generator tries to create data that can fool the discriminator into thinking it is real, while the discriminator tries to identify which data is real and which is generated correctly. Through this process, the generator learns to create more realistic data, and the discriminator becomes better at distinguishing between real and fake data.

Over time, the generator becomes skilled at creating data that is so realistic that it can often fool humans into thinking it is real. This technique has been used to generate realistic images, videos, and even music.

-

Autoencoders

Autoencoders are another generative AI model that has become increasingly popular recently. Autoencoders are neural networks trained to compress and reconstruct input data, such as images or text. This process of compression and reconstruction allows the autoencoder to learn a compressed representation of the input data that can then be used to generate new, similar data.

Autoencoders are often used for image and audio compression and image and text generation tasks. For example, an autoencoder can be trained to compress an image into a lower-dimensional representation, which can then be stored or transmitted more efficiently. When the compressed representation is decoded, the reconstructed image may not be an exact original copy. Still, a similar image will preserve the original’s essential features.

Recently, autoencoders have also generated highly realistic images and videos. For example, researchers have used autoencoders to create deepfake videos, which are videos that utilize AI face swaps to replace one person’s face with another. While deepfakes have been controversial, they demonstrate the potential of autoencoders and other generative AI models to create highly realistic and convincing content.

How Autoencoders Work?

Autoencoders are neural networks trained to encode input data into a lower-dimensional representation and then decode it back to the original input. This lower-dimensional representation is known as a “latent space” or “embedding,” It can be used for tasks such as data compression, denoising, and image generation.

To do this, the autoencoder consists of two main parts: an encoder and a decoder. The encoder takes the input data and compresses it into a lower-dimensional representation, while the decoder takes this compressed representation and reconstructs the original input data.

Using a loss function, the network is trained by minimizing the difference between the original and reconstructed inputs. During training, the network learns to extract the most important features of the input data and encode them in the latent space, allowing it to generate high-quality reconstructions.

Overall, autoencoders are a powerful tool for unsupervised learning and have numerous applications in various fields, including computer vision, natural language processing, and anomaly detection.

-

Variational Autoencoders (VAEs)

Autoencoders have been an essential tool in generative AI for quite some time. But the advancements in deep learning have led to the development of a new type of autoencoder – the Variational Autoencoder (VAE). Like the traditional autoencoder, the VAE is also an unsupervised learning algorithm that is used for dimensionality reduction and data compression. However, it differs from the traditional autoencoder as it uses probabilistic models to encode the input data and generate new samples from the encoded data distribution.

The VAE overcomes some of the limitations of traditional autoencoders, such as the generation of blurry images. It does this by introducing a probabilistic model that allows for generating realistic and diverse samples from the encoded data distribution. This allows VAEs to generate more realistic and diverse data, making it a powerful tool for generative AI.

How Do VAEs Work?

VAEs, or variational autoencoders, are a type of deep learning model that learns to generate new data by capturing a dataset’s underlying patterns and structures. Like regular autoencoders, VAEs have an encoder and a decoder network. However, VAEs use a probabilistic approach to generate new data rather than deterministic. During training, VAEs learn to encode an input data point into a set of latent variables or a probability distribution in the latent space.

Then, using a sampling technique, the decoder network generates a new data point from the learned distribution. This process helps VAEs produce more diverse and realistic outputs than traditional autoencoders.

-

Transformers

The Transformer model is a generative AI model commonly used in natural language processing tasks such as translation and text summarization. It uses a unique architecture called the self-attention mechanism, which allows it to process information in parallel and capture long-range dependencies between words in a sentence.

In simpler terms, the Transformer model breaks down a sentence into smaller parts and assigns a weight to each word based on its relationship with other words in the sentence. This allows it to understand the context of the sentence and generate accurate translations or summaries. The Transformer model has proven to be highly effective in natural language processing tasks and has become a popular choice for many applications in the field of AI.

How Transformers Work?

Unlike traditional sequence-to-sequence models that rely on recurrent neural networks (RNNs) to process sequential data, the Transformer model uses a novel attention-based mechanism to process input sequences.

At a high level, the Transformer model breaks down input sequences into smaller, more manageable chunks called “tokens.” These tokens are then processed by a series of encoder and decoder layers that use self-attention to calculate the importance of each token in the sequence.

The Transformer model uses a multi-head self-attention mechanism to calculate a weighted sum of all input tokens during the encoding process. This lets the model focus on different parts of the input sequence simultaneously and capture more complex relationships between tokens. The resulting vector, representing the input sequence, is then passed through a series of feedforward layers to produce an encoded output.

During decoding, the Transformer model uses a similar multi-head self-attention mechanism to generate output tokens one at a time. At each time step, the model attends to the previously generated tokens and the encoded input sequence to predict the next token in the output sequence.

One of the key advantages of the Transformer model is its ability to handle longer input sequences more efficiently than RNN-based models. This is because the self-attention mechanism allows the model to process each token independently rather than sequentially.

Additionally, the Transformer model has achieved state-of-the-art performance on a wide range of natural language processing tasks, including machine translation, language modeling, and text generation.

Despite its successes in natural language processing, the Transformer model is not limited to this domain. It has also been used for image generation, music generation, and other creative tasks that require generating complex and original content.

The Role of NLP, Deep Learning, and Machine Learning in Generative AI

NLP, deep learning, and machine learning are all key components of generative AI, playing different but complementary roles in generating new content.

NLP is particularly important for generative AI that deals with natural language, as it involves the processing and analyzing of textual data, such as sentences, paragraphs, and documents. NLP models are used to understand and extract meaning from textual data and generate new content based on that input. This is critical in applications such as chatbots, where the AI must be able to generate natural language responses based on user input.

On the other hand, deep learning is a machine learning type that uses artificial neural networks to analyze and learn patterns in data. This makes it well-suited for generating complex, high-dimensional data like images, music, and video. Deep learning models are trained on large datasets of real-world examples, which they use to generate new content similar in style and structure to the original data.

Finally, machine learning is the foundation of all generative AI, as it provides the algorithms and techniques necessary to train models on large datasets of real-world examples. Machine learning models learn from this training data, allowing them to generate new content similar in style and structure to the original data. By combining NLP, deep learning, and machine learning development techniques, researchers can create sophisticated generative models capable of producing new and original content in various applications, from art and music to natural language processing and beyond.

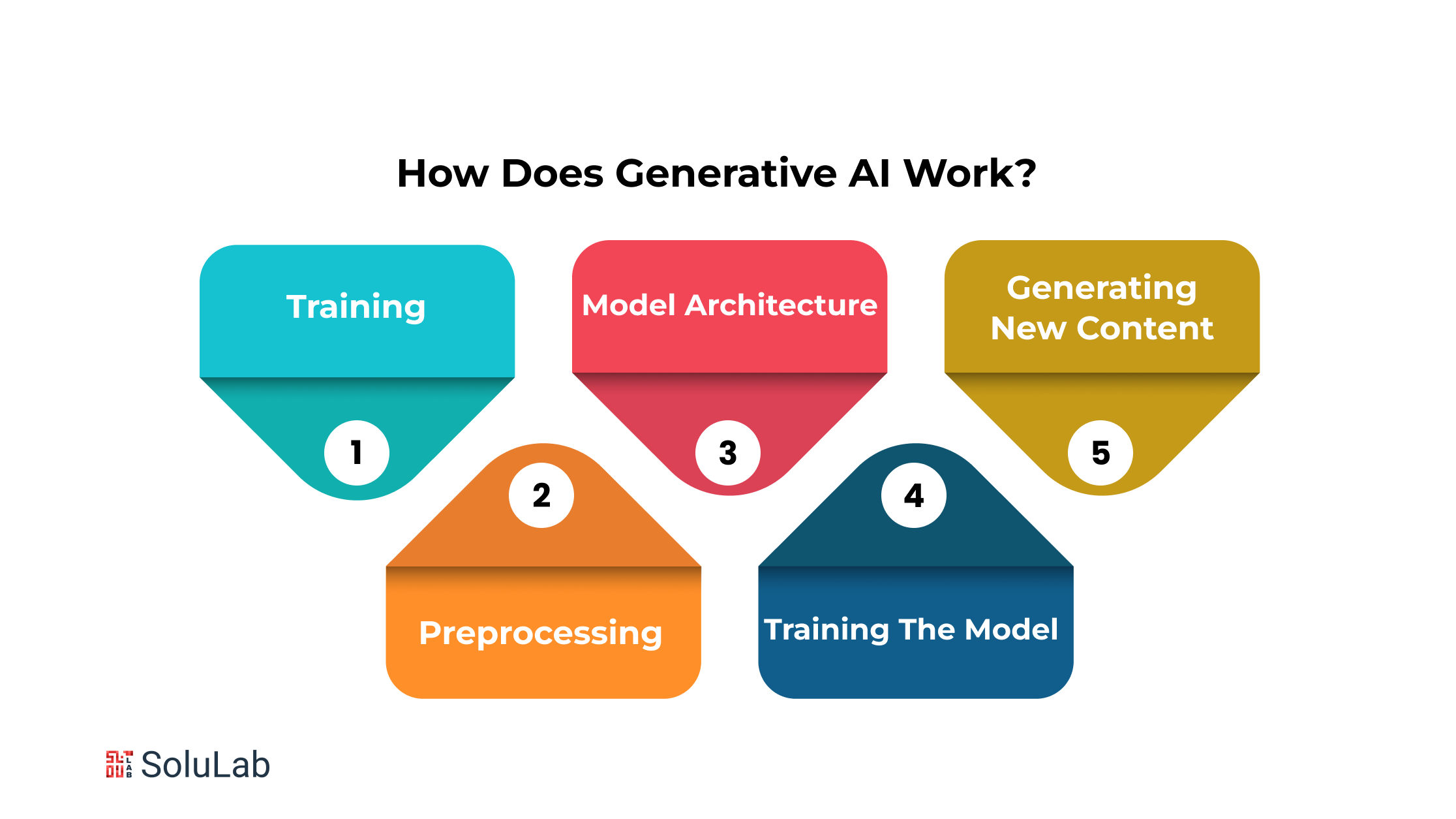

How Does Generative AI Work?

Here is a step-by-step overview of how generative AI works.

1. Training

To create a generative AI model, a large dataset is needed for the model to learn from. This dataset can be text, images, or any other data type the model will generate. The more data the model has to train on, the better it will be able to generate new content.

2. Preprocessing

Before the data is fed into the generative AI model, it must be preprocessed to make it easier for the model to understand. This can involve converting the data into a format that the model can work with, such as converting images into pixels or text into numerical vectors.

3. Model Architecture

There are several generative AI models, each with its architecture. The most common type of generative AI model is a Generative Adversarial Network (GAN), which consists of two neural networks: a generator and a discriminator. The generator creates new content based on the input it receives, while the discriminator evaluates the content and provides feedback to the generator.

4. Training the Model

Once the model architecture is defined, the model is trained using the preprocessed data. During training, the generator creates new content based on random inputs, and the discriminator evaluates the content to determine if it is real or fake. The generator then adjusts its output based on the feedback from the discriminator until it can create content from the real data.

5. Generating New Content

After training the model, it can generate new content based on its input. For example, a text-based generative AI model might be fed a sentence or a paragraph and then generate a new paragraph or story based on that input. Similarly, an image-based generative AI model might be fed an image and then generate a new similar image but not identical to the original.

Generative AI Examples/ Common Use Cases

Generative AI has many potential use cases, from generating realistic images and videos to creating natural language responses in chatbots. Some of the most common use cases for generative AI include the following:

1. Image and Video Generation:

Generative AI models, particularly those like Generative Adversarial Networks (GANs), are capable of producing images and videos that closely resemble real-world examples. This ability has revolutionized industries like entertainment, where CGI effects in movies and video games can now be created with astonishing realism. Additionally, generative models can be used in fields like fashion and design to generate new, unique patterns and styles for clothing, accessories, and interior design. Artists and creators can also leverage these models to generate novel artworks, pushing the boundaries of creativity and visual expression.

2. Music Generation:

Generative AI has entered the realm of music composition, enabling the creation of original compositions or accompaniments. By analyzing existing musical patterns and structures, these models can generate new melodies, harmonies, and rhythms. This finds applications in various domains, including background music for videos, video games, and other multimedia projects. Musicians and producers can even use generative AI as a tool for inspiration, generating musical ideas that they can further develop into complete compositions.

3. Natural Language Processing:

In the realm of conversational AI, generative models have enhanced the capabilities of chatbots and virtual assistants. By training on large datasets of human interactions, these models can generate contextually relevant and engaging responses in natural language. This leads to more lifelike and productive interactions with users. Beyond customer support and information retrieval, generative AI is also utilized in applications like generating personalized emails, writing reports, and even crafting creative writing pieces, leveraging its ability to mimic human language patterns.

4. Text Generation:

Generative AI’s capacity to generate text based on input data has practical applications in content creation. Websites, blogs, and social media platforms can benefit from automatically generated articles, posts, and captions. News agencies can use generative models to quickly produce summaries of breaking stories or detailed reports. E-commerce platforms can automate the generation of product descriptions, enhancing the shopping experience for customers. This use case streamlines content production and frees up human resources for more strategic tasks.

5. Speech Synthesis:

Generative models play a pivotal role in text-to-speech (TTS) systems and voice assistants. By converting written text into synthesized speech, these models offer natural-sounding vocal interactions. They have applications in accessibility, helping visually impaired individuals access textual content through audio. Voice assistants powered by generative AI are increasingly integrated into smart devices, providing users with hands-free access to information, entertainment, and control over their environments.

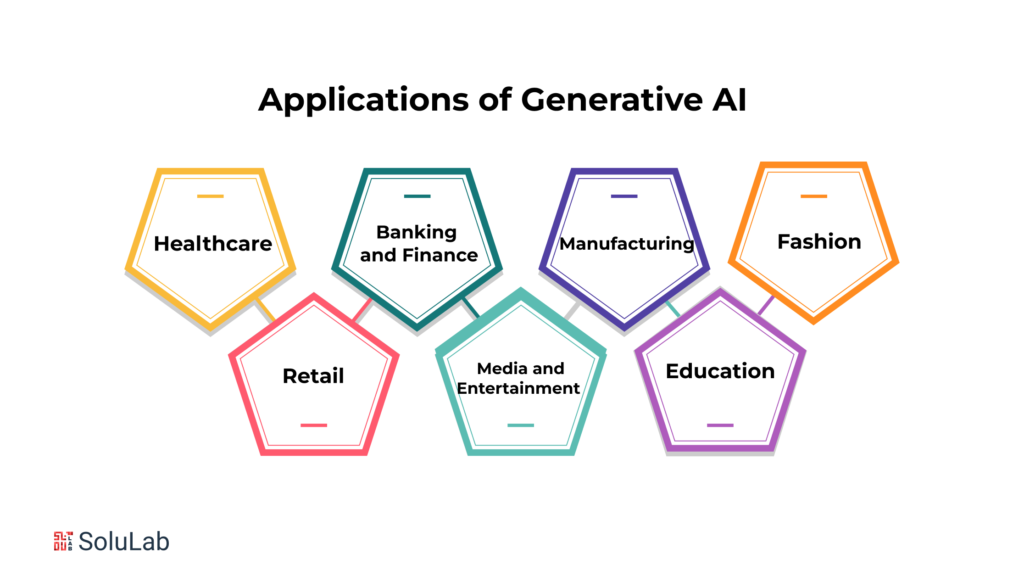

Generative AI Applications in Various Industries

Generative AI can transform many industries by providing new opportunities for innovation and growth. Here are some of the most promising applications of generative AI in various industries:

1. Healthcare:

In healthcare, generative AI tools can be used in drug discovery and development, predicting disease outcomes, and medical imaging analysis. By analyzing large amounts of medical data, generative AI can identify potential drug candidates or predict how patients respond to different treatments. In addition, generative AI can analyze medical images, such as X-rays and MRIs, to help doctors diagnose diseases and conditions more accurately.

On top of it, generative AI can also be used in genetic research to see how gene expression changes in response to specific changes in genes. This could accelerate the development of gene therapies and enhance the treatment process by predicting which therapy patients’ genes best respond to.

Related: Generative AI for Healthcare

2. Retail:

In the retail industry, generative AI tools can be used in product design, creating personalized customer experiences, and predicting product demand. By analyzing customer data, generative AI can help retailers create more personalized and engaging shopping experiences, such as recommending products based on a customer’s purchase history. In addition, generative AI can help retailers predict demand for products more accurately, enabling them to optimize inventory levels and avoid stockouts.

3. Banking and Finance:

In the banking and finance industry, generative AI can be used in fraud detection, risk assessment, and portfolio optimization. By analyzing financial data, generative AI can detect patterns that indicate fraudulent activity or identify potential risks in a portfolio or account. In addition, generative AI can help financial institutions optimize their investment portfolios, maximizing returns while minimizing risk.

4. Media and Entertainment:

In the media and entertainment industry, generative AI tools can be used in film and game design, music composition, and personalized content recommendations. By analyzing data on customer preferences, generative AI can help create more engaging and personalized content, such as recommending movies and TV shows based on a user’s viewing history. In addition, generative AI can be used to create new music or game levels, providing unique and engaging experiences for users.

5. Manufacturing:

Generative AI can be used in product design, process optimization, and predictive maintenance in the manufacturing industry. By analyzing data on product performance, generative AI can help manufacturers optimize product design, improving functionality and reducing costs. In addition, generative AI can help manufacturers optimize their production processes, minimizing waste and improving efficiency. Finally, generative AI can be used to predict equipment failures, enabling manufacturers to perform maintenance before a breakdown occurs.

Related: Generative AI in the Manufacturing

6. Education:

In the education industry, generative AI can be used in adaptive learning, educational content creation, and student engagement. By analyzing data on student performance, generative AI can help educators create more personalized learning experiences, adapting the curriculum to each student’s needs. In addition, generative AI can be used to create educational content, such as quizzes and games, that engage students and reinforce learning.

7. Fashion:

Generative AIs can help designers to design fashionable clothes using generative AIs’ image generation capabilities; the tool can utilize huge amounts of data on fashion design and keep track of evolving trends to analyze customer requirements and accordingly design fashionable clothes.

Moreover, the generative AI-powered tools can help the designer to generate fashion models for their creative clothing; this eliminates the tedious, time-consuming, and costly photoshoot, allowing them to move forward with the product launch.

Read Blog: The Role of AI on the Fashion Industry

Opportunities and Ethical Advancements

Generative AI stands at the forefront of technological innovation, poised to revolutionize countless industries, while also embracing numerous prospects for growth. As we embark on this journey, it is essential to acknowledge certain limitations and uphold ethical considerations, ensuring a path of responsible development and utilization.

While generative AI’s potential is vast, it does require substantial amounts of data for model training. Recognizing this, efforts are underway to overcome challenges, particularly in sectors where data accessibility is limited. Additionally, the refinement of training data quality remains pivotal, as it profoundly influences the accuracy and dependability of the model’s outcomes.

Another aspect to address is the interpretability of these models. Unlike conventional rule-based systems, understanding the inner workings of generative AI models can be intricate. The journey towards enhancing transparency in the decision-making process is ongoing, enabling the identification and rectification of errors and biases.

From an ethical and legal vantage point, the ascent of generative AI brings forward crucial considerations that warrant attention to foster its responsible integration. Foremost among these concerns is the potential for bias within training data, which underscores the necessity for fair outcomes for all demographics. Additionally, the ability of this technology to craft convincing synthetic media prompts careful contemplation, guarding against potential misuses such as deceptive deep fakes and orchestrated disinformation campaigns.

The path ahead involves a comprehensive approach to surmounting these challenges and ethical concerns. Industry leaders are actively investing in research endeavors that aim to develop algorithms capable of mitigating biases in training data, concurrently enhancing the interpretability of the models. Companies are embracing responsible AI principles, prioritizing attributes like fairness, transparency, and accountability throughout the generative AI life cycle. Collaborations with regulators and policymakers are also underway to lay down guidelines and regulations, ensuring the technology’s ethical and prudent development and deployment. As we navigate this evolving landscape, the limitations we recognize become stepping stones toward a more ethically sound and transformative future powered by generative AI.

The Future of Generative AI

Generative AI is a rapidly evolving field of artificial intelligence that enables machines to create new content, such as music, art, and even text-based content like stories and articles. With recent advancements in machine learning algorithms, we can expect even more impressive feats from generative AI in the coming years.

One of generative AI’s most significant potential benefits is its ability to revolutionize content creation. As language models become more sophisticated, we can expect to see more natural and human-like text generated by machines. This could have significant implications for industries such as marketing, advertising, and content production, where the ability to produce high-quality, engaging content quickly and at scale is highly valued.

In addition, generative AI could also play a critical role in scientific research, enabling researchers to simulate complex phenomena and generate new hypotheses rapidly. With the ability to create vast amounts of data quickly, generative AI could accelerate scientific breakthroughs in fields like medicine, physics, and engineering.

Despite its potential benefits, generative AI also raises significant ethical concerns. For example, there are concerns about how the widespread use of generative AI could impact the job market and lead to increased unemployment. Additionally, there are worries about how generative AI could be used to create fake news or misinformation, leading to societal instability and mistrust.

To sum up, generative AI’s future is full of opportunities and challenges. As technology continues to evolve, we can expect to see more impressive feats of creativity and innovation from machines. However, ensuring that these advancements are developed and deployed ethically and responsibly is essential to avoid any unintended negative consequences.

Conclusion

In conclusion, generative AI is an exciting and rapidly evolving field of artificial intelligence with a vast range of applications. From creating music and art to generating text-based content, generative AI has the potential to revolutionize content creation and scientific research.

As we have explored in this beginner’s guide, generative AI is powered by advanced machine learning algorithms, NLP, LLMs, and AI models that enable machines to learn and mimic human creativity. However, with these advancements come significant ethical concerns, such as job displacement and the potential for misinformation.

As we move forward, it is important to continue to research and develop generative AI responsibly and ethically, to maximize the benefits while minimizing any negative consequences. With careful planning and collaboration between researchers, policymakers, and society as a whole, we can ensure that generative AI is developed and deployed in a way that benefits everyone.

SoluLab, a leading Generative AI development company, offers comprehensive services catering to diverse industries and business verticals. Their skilled and experienced team of Artificial Intelligence developers leverages state-of-the-art Generative AI technology, software, and tools to create custom solutions that address unique business needs. From improving business operations to optimizing processes and enhancing user experiences, SoluLab’s Generative AI solutions are designed to unlock new possibilities for businesses.

Their team of experts is well-versed in various AI technologies, including ChatGPT, DALL-E, and Midjurney. Businesses can hire the best Generative AI developers from SoluLab to produce custom, high-quality content that sets them apart from competitors. To explore these innovative solutions and take their business to the next level, interested parties can contact SoluLab today.

FAQs

1. What is the difference between generative AI and other types of AI?

Generative AI is a type of AI that can create new content, such as music, art, or text-based content, while other types of AI are designed to perform specific tasks, like image recognition or natural language processing.

2. How does generative AI work?

Generative AI works by using advanced machine learning algorithms to analyze and learn patterns in existing data. It then uses this information to create new content that mimics human creativity.

3. What are some potential applications of generative AI?

Generative AI has a vast range of potential applications, including content creation, scientific research, and even video game development. It could also be used in areas such as personalized medicine, where it could be used to generate tailored treatments for individual patients.

4. Can generative AI be used in enhancing cybersecurity?

Yes, generative AI can play a role in enhancing cybersecurity. It can be used to simulate and predict potential cyber threats, helping security experts identify vulnerabilities and develop effective countermeasures. Additionally, generative AI can assist in creating realistic phishing attack simulations, which can be used to train employees to recognize and respond to phishing attempts effectively.

5. How can generative AI enhance the creative process?

Generative AI can significantly enhance the creative process by providing artists, writers, musicians, and designers with novel ideas and inspiration. It can serve as a powerful tool to help creators overcome creative blocks and explore new artistic directions. By collaborating with generative AI, creators can push the boundaries of their imagination and discover innovative ways to express their artistic visions.