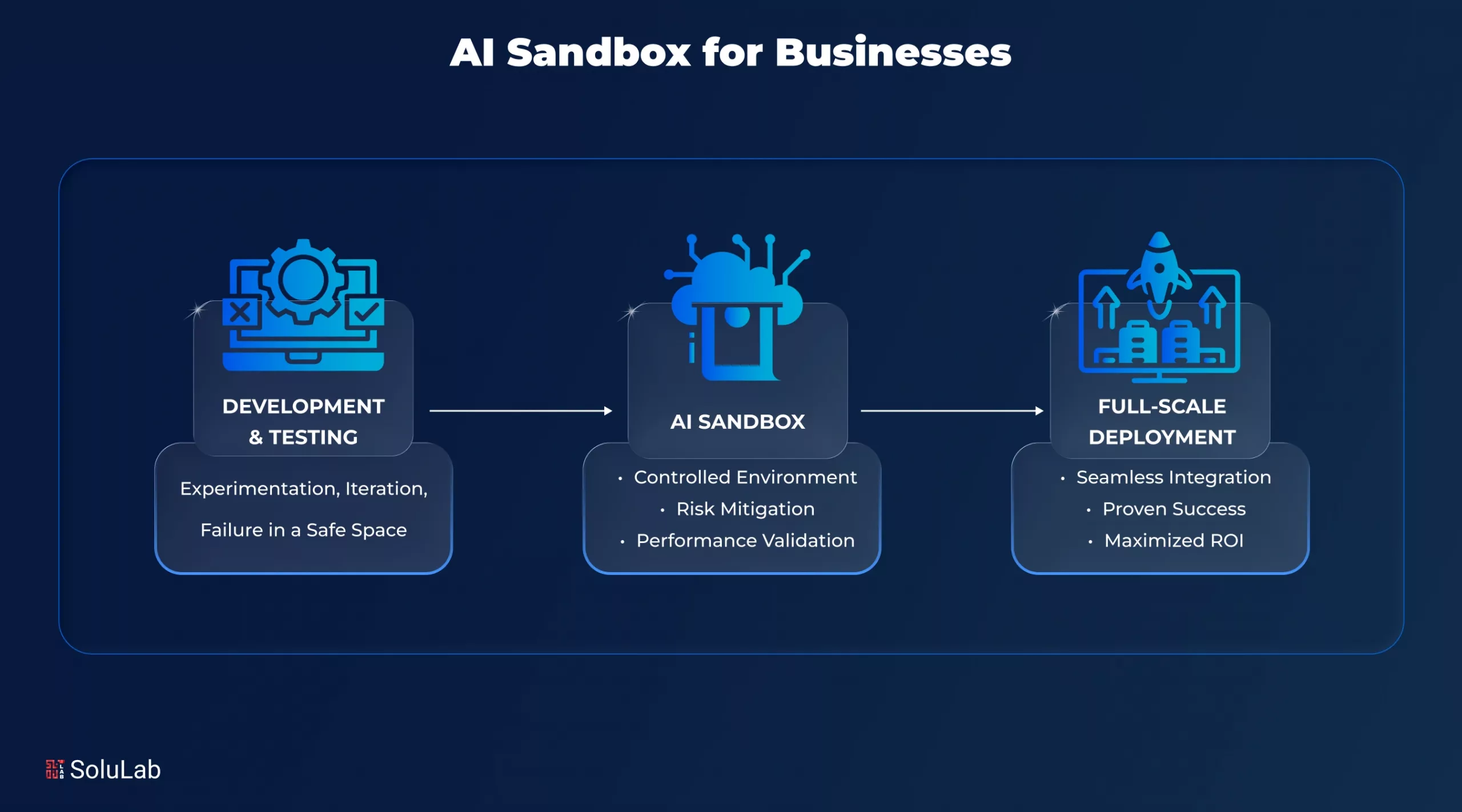

What if you could test-drive AI before letting it take the wheel of your entire business? Implementing AI can revolutionize operations, customer experiences, and decision-making — but jumping straight into full-scale deployment can be risky. Errors, unexpected behaviors, or integration challenges can cost time, money, and even credibility.

| In 2025, 66 AI and technology sandboxes are active worldwide, with 31 focused on AI innovation. |

Acting as a safe, controlled environment, an AI sandbox lets businesses experiment, validate, and fine-tune AI models before going live. In this blog, we’ll explore why using an AI sandbox is a smart move, how it minimizes risks, and how it ensures your AI deployment drives maximum impact from day one.

What Is an AI Sandbox?

An AI sandbox is a secure, isolated environment where businesses can test and validate AI models before they are deployed in real-world operations. It acts like a safe playground for AI, allowing enterprises to experiment without impacting live systems or risking sensitive data.

Unlike traditional testing setups, an AI sandbox lets businesses:

- Experiment safely with AI algorithms: Try new models, tweak parameters, and see results without production risk.

- Simulate real-world scenarios: Test how AI reacts to different data, customer behaviors, or operational challenges.

- Catch risks and issues early: Identify potential errors, biases, or failures before they affect business outcomes.

Using enterprise AI sandbox platforms, your data scientists, developers, and business teams can collaborate seamlessly. These platforms help you protect sensitive data, track AI performance, and ensure models meet both business objectives and regulatory standards.

When combined with AI app development solutions, sandboxes streamline the entire testing workflow, making your AI models deployment-ready faster and more efficiently.

Key Benefits of Using an AI Sandbox Before Deployment

Implementing AI across your business can be transformative, but jumping straight into full-scale deployment comes with risks. An AI sandbox provides a controlled testing environment where models can be evaluated safely. Here’s why it’s invaluable:

1. AI Risk Management & Compliance Protection

An AI sandbox enables full AI risk assessment and ensures regulatory compliance. With EU AI Act penalties up to €35M or 7% of global turnover, sandbox testing protects against costly fines. Only 6% of regulated enterprises have an AI-native security strategy, making pre-deployment validation essential.

2. Cost Efficiency & ROI Protection

AI projects cost $80,000–$190,000 initially, with $5,000–$15,000 annual maintenance. Risk mitigation with AI sandboxes prevents production failures, delivering ROI over 9,000% and payback in 6–12 months. Sandboxes safeguard budgets for AI deployment solutions.

3. Failure Prevention

AI failures are high:

- 42% of companies abandoned AI projects in 2025

- Only 1 in 8 prototypes reach operations

- 80% of pilots fail due to data, scalability, or alignment issues

- AI sandbox solutions for businesses catch issues early, saving time and money.

4. Enhanced AI Readiness

A sandbox helps with AI readiness checks, ensuring teams, processes, and infrastructure are prepared. Proper testing improves accuracy by 27% and reduces performance degradation, enabling smoother deployment of AI-powered solutions.

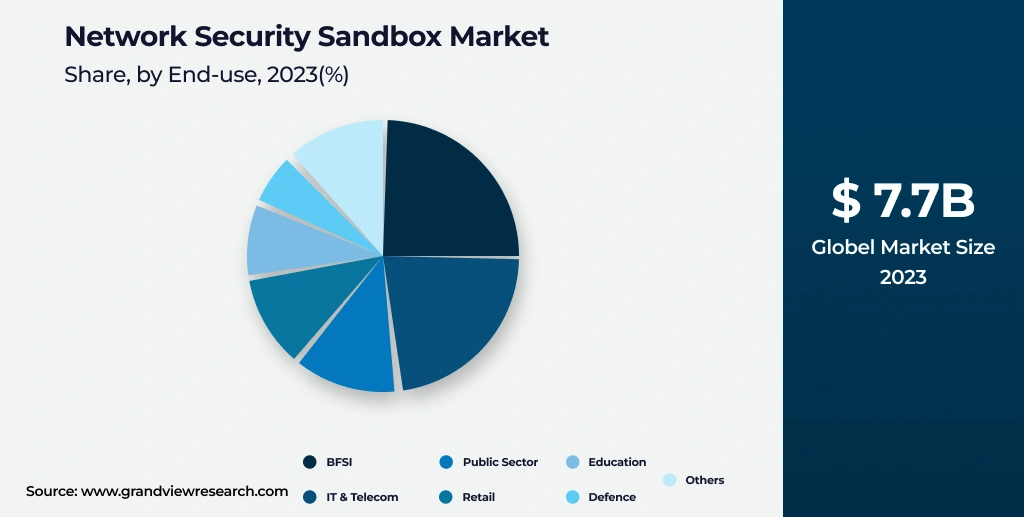

What is the current Market Context & Investment Reality for the AI Sandbox?

The AI sandbox market is growing fast, reflecting both the opportunities and risks of AI adoption. Consider these numbers:

- Network security sandbox market: $11.1B in 2024 to $140.1B by 2030 (52.5% CAGR)

- Cloud sandboxing market: $2.44B in 2024 to $7.75B by 2032 (15.5% CAGR)

- Analytics sandbox market: $2.5B in 2023 to $10.1B by 2032 (16.8% CAGR)

Despite this growth, only 26% of companies have the right capabilities to scale AI-powered solutions, while a staggering 95% of generative AI pilots fail to deliver business value.

This is where AI sandbox solutions for businesses become critical. Sandboxes let you test AI in sandboxes, perform AI risk assessments, and refine models safely before full-scale deployment.

They help businesses reduce failure risks, save costs, and ensure better ROI from AI development solutions.

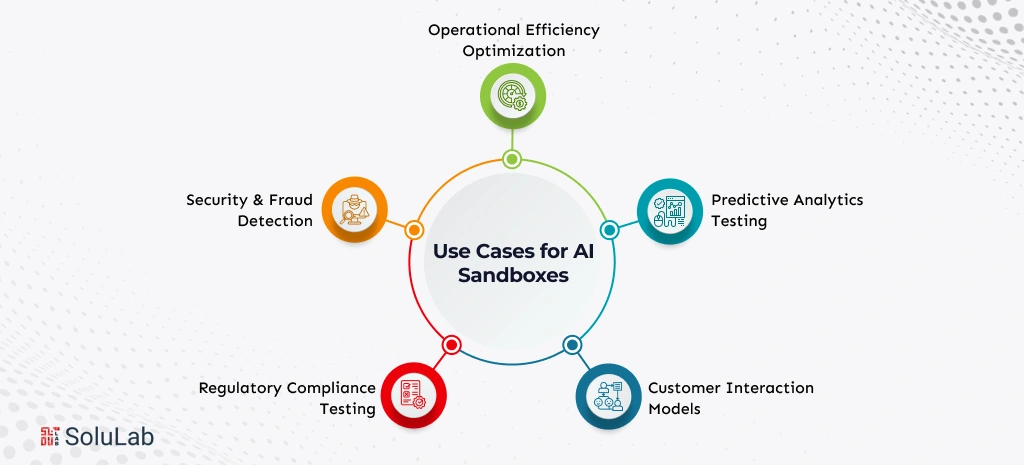

Common Use Cases for AI Sandboxes

1. Predictive Analytics Testing

Use an AI sandbox to test and fine-tune forecasting models before deploying them in real business operations. This ensures more accurate predictions and reduces costly errors in decision-making.

2. Customer Interaction Models

Validate chatbots, recommendation engines, and other AI-powered customer tools in a safe environment. Testing in a sandbox prevents mistakes from affecting live users, enhancing customer experience and trust.

3. Regulatory Compliance Testing

Simulate scenarios to ensure your AI models comply with regulations like the EU AI Act. Sandboxes help identify compliance gaps before deployment, reducing the risk of fines up to €35 million.

4. Security & Fraud Detection

Stress-test AI security models and fraud detection systems safely. Sandboxes allow enterprises to identify vulnerabilities and improve model reliability without exposing real data.

5. Operational Efficiency Optimization

Test AI applications for logistics, HR, and manufacturing processes. Sandbox testing helps optimize performance, reduce bottlenecks, and improve overall operational efficiency.

Using testing AI in sandboxes in these areas allows businesses to reduce risk, save costs, and deploy AI faster. For modern organizations, these use cases translate directly into safer, smarter, and more profitable AI adoption.

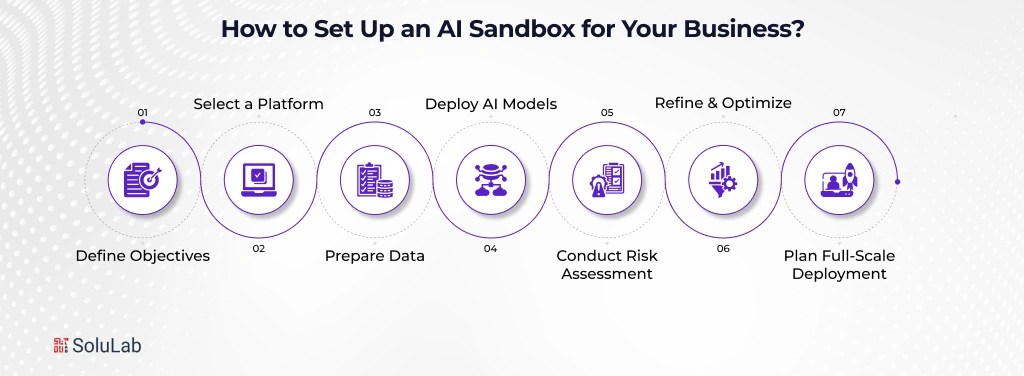

How to Set Up an AI Sandbox for Your Business?

Setting up an AI sandbox for your business doesn’t have to be complicated. By following these structured steps, companies can test safely, reduce risks, and ensure successful deployment. Here’s a practical guide:

1. Define Objectives

Start by identifying which AI models or business processes you want to test. Focus on high-impact areas such as AI-powered solutions, predictive analytics, or customer interaction models. Clear objectives ensure your sandbox tests are meaningful and directly tied to business goals.

2. Select a Platform

Choose an enterprise AI sandbox platform that fits your company’s size, security requirements, and regulatory needs. Platforms vary from cloud-based solutions to on-premise multi-GPU infrastructures, typically costing $20,000–$50,000 for setup and $2,000–$5,000 annually for operations. The right platform ensures smooth testing and data protection.

3. Prepare Data

Use anonymized or synthetic datasets to replicate real-world conditions without risking sensitive information. This approach supports AI risk assessment and helps prevent 80% of AI pilot failures caused by data issues.

4. Deploy AI Models

Implement your AI solutions using AI app development solutions. Whether it’s a recommendation engine, chatbot, or predictive model, deploying in a sandbox lets your team test functionality, accuracy, and reliability in a controlled environment.

5. Conduct Risk Assessment

Evaluate every AI model for potential failures, biases, or compliance gaps. With regulatory penalties reaching €35 million under the EU AI Act, this step is critical. Sandbox testing helps ensure AI risk management and prepares your business for safe production deployment.

6. Refine & Optimize

Use insights from sandbox tests to improve model performance. Adjust algorithms, improve accuracy, and ensure risk mitigation with AI sandboxes. On average, sandbox testing can boost model accuracy by 27% and prevent costly failures, delivering a potential ROI of 9,000%.

7. Plan Full-Scale Deployment

Once models are validated, transition to live operations using AI deployment services. A well-tested AI model ensures smoother integration, operational reliability, and faster business impact. Companies that skip this step risk production failures, wasted investment, and regulatory penalties.

How AI Sandbox Is Used In Businesses?

1. Harvard University – Academic AI Sandbox

Users: 50+ researchers and faculty

Challenge: Enable generative AI without risking IP/data leaks

Solution: Secure AI sandbox with GPT-3.5, GPT-4, Claude 2, PaLM 2; data isolated per user

Results:

- 6-week pilot launch

- Met strict data compliance standards

- Informed university-wide AI strategy

2. Global Educational Publisher – GenAI Sandbox

Timeline: 60 days

Challenge: Explore AI ROI without major upfront investment

Solution: GenAI sandbox on client systems, tested GPT-3.5/4 on proprietary educational content

Results:

- Identified automation for editorial workflows

- Built confidence for AI investment

- Established ongoing partnership for production solutions

3. UK FCA – Supercharged Sandbox

Launch: June 2025

Goal: Enable AI innovation across financial services firms

Solution: Partnership with NVIDIA, secure sandbox, direct FCA guidance

Impact:

- Accelerated AI adoption

- Leveling the competitive playing field for smaller firms

- Faster AI experimentation and innovation

Conclusion

With 95% of generative AI pilots failing and regulatory penalties reaching up to €35M, using an AI sandbox is no longer optional; it’s essential. A properly implemented sandbox safeguards billions in AI investments, ensures regulatory compliance, and empowers enterprises to confidently deploy AI-powered solutions.

At SoluLab, a leading AI development company, we help businesses design, implement, and optimize custom AI sandboxes, enabling safe experimentation, fine-tuning, and compliance assurance. By partnering with a Generative AI development company, organizations can transform their AI initiatives from risky pilots into reliable, scalable, and high-impact solutions.

Future-proof your AI deployments, test, learn, and scale safely with SoluLab’s AI sandbox solutions. Contact us now!

FAQs

1. Which AI sandbox solutions for businesses are best?

The best AI sandbox solutions for businesses provide secure environments, collaborative tools, and easy integration with enterprise AI sandbox platforms and AI deployment services. They make testing and validating AI fast, safe, and reliable.

2. How can AI risk management improve AI success?

AI risk management identifies potential model failures, compliance breaches, or operational disruptions early. By mitigating these risks in a sandbox, businesses increase the chances of successful AI deployment and protect investments.

3. How does an AI readiness check prepare businesses for deployment?

An AI readiness check assesses infrastructure, team skills, and processes. It ensures that AI initiatives are ready for full-scale deployment and reduces the likelihood of model failure, operational issues, or compliance risks.

4. What role do AI deployment services play with sandboxes?

AI deployment services help move validated AI models from the sandbox to production safely. They ensure scalable, compliant, and high-performing AI solutions, protecting both ROI and enterprise operations.

5. Is using an AI sandbox cost-effective?

Absolutely. Early testing prevents expensive mistakes, reduces trial-and-error costs, and speeds up deployment timelines, making AI initiatives more efficient and cost-effective.

6. How does SoluLab help businesses implement an AI sandbox?

SoluLab, a leading Generative AI development company, helps businesses design, implement, and optimize custom AI sandboxes. We provide end-to-end support, including safe experimentation, performance optimization, and compliance assurance, ensuring AI deployments are reliable and impactful.