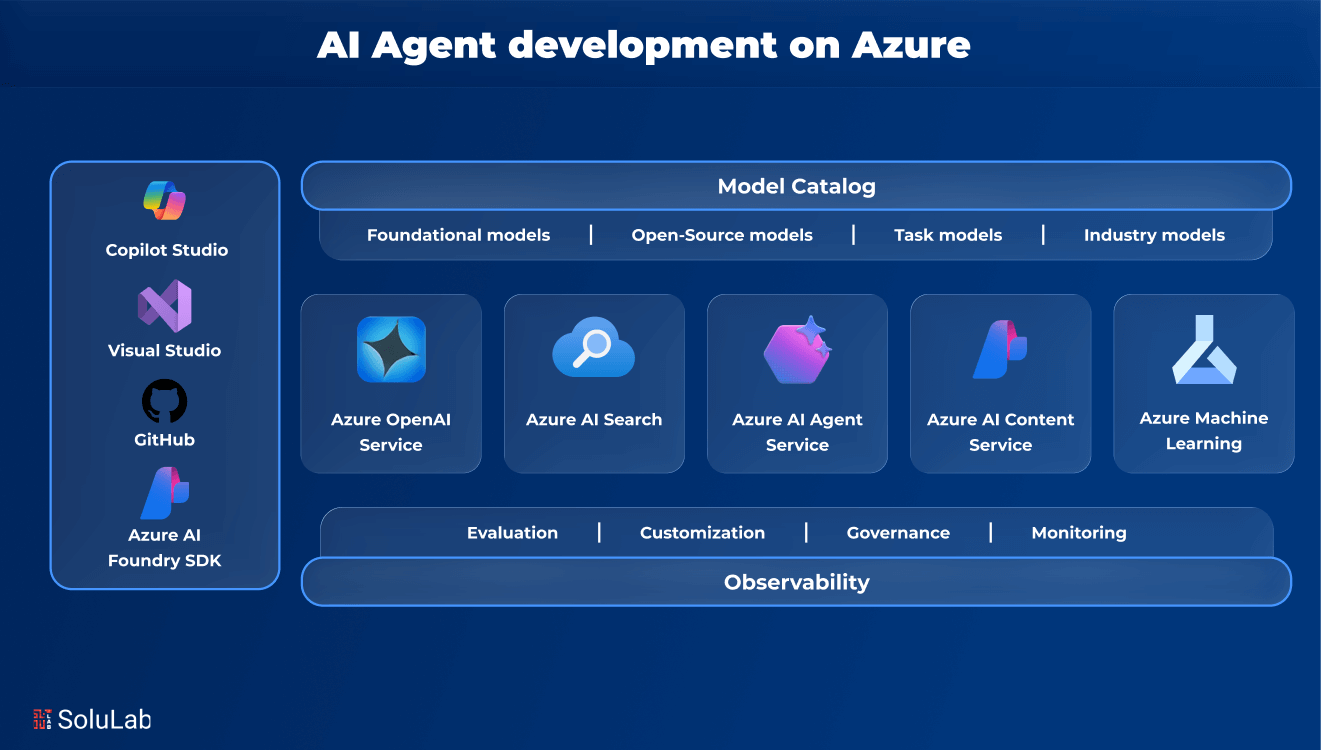

The rise of generative AI has transformed how developers approach application development. Instead of basic chatbots, teams are now creating AI agents, systems that reason, take action, and solve problems. But how do you move from an idea to a real, secure, and scalable AI agent development?

That’s where Azure’s AI Agent Service comes in. It gives developers everything needed to build powerful AI agents, without worrying about infrastructure or orchestration. From live Bing Search integrations to secure Python code execution, Azure’s tools are designed to support the growth of AI agents.

In this blog, explore the core building blocks of AI agent development on Azure and how to bring your ideas to life using tools like JavaScript, Azure Foundry, and more.

Why Azure AI Agent Service Simplifies Everything?

Traditional AI agent development involves managing orchestration, state, tools, and scaling manually. Developers often rely on frameworks like LangChain, AutoGen, or Semantic Kernel. Azure AI Agent Service replaces that complexity with a fully managed solution. It supports faster development, stronger security, and enterprise-grade scalability. With this managed service, teams can:

- Focus solely on writing intelligent agent logic while Azure handles orchestration, hosting, security, and scaling in the background.

- Leverage built-in tools like File Search, Bing integration, and Function Calling to expand your agent’s real-world capabilities instantly.

- Switch between different models seamlessly without modifying your backend architecture or redeploying your entire application setup.

This approach helps developers build faster, test smarter, and deploy at scale, all while ensuring reliability.

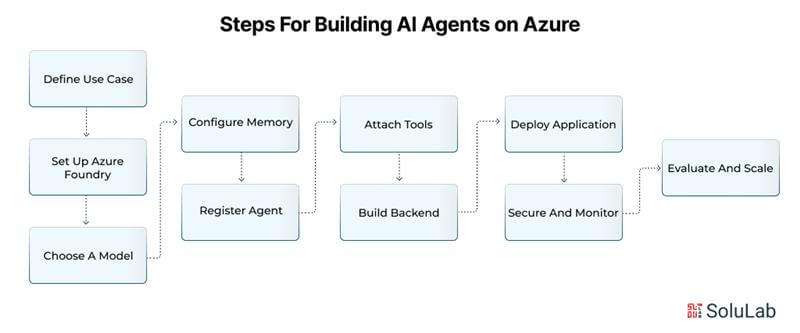

Step-by-Step Guide For Building AI Agents on Azure

- Define Use Case: Identify what your agent should achieve, support, automate, or perform information retrieval.

- Set Up Azure Foundry: Create an Azure AI Foundry instance to manage agents, tools, and model orchestration.

- Choose a Model: Use GPT-4o for advanced reasoning or 4o-mini for faster, low-cost interactions.

- Configure Memory: Select Cosmos DB for global storage, Redis for quick access, or AI Search for RAG and embeddings.

- Register Agent: Use Azure SDK to define agent behavior, connect models, and attach memory and tools.

- Attach Tools: Add Function Calling for actions, Code Interpreter for analysis, File Search for document access, and Bing Search for real-time data.

- Build Backend: Use Node.js or Express to route user inputs to the agent, with optional frontend support like React.

- Deploy Application: Choose App Service for web hosting, Container Apps for dynamic AI workloads, or AKS for full container control.

- Secure and Monitor: Use GenAI Gateway for traffic control, Content Safety for output filtering, and Defender for threat protection.

- Evaluate and Scale: Monitor with Azure Monitor, test prompts using Prompt Flow, and scale as needed.

Choosing the Right Models for Your AI Agent Development on Azure

The success of an AI agent depends heavily on model choice. Azure offers a wide model catalog to fit different needs, from small, fast models to complex multimodal ones. Here’s how to decide what fits best:

Models Based on Use Case

- Multimodal Reasoning (Text + Image): Use GPT-4 or Llama models for tasks requiring combined input types

- Latency-Sensitive Applications: Choose smaller models like 4o-mini for faster responses and cost efficiency

- Embeddings for Search or Classification: Use OpenAI’s text-embedding-3 or Cohere embeddings for semantic matching

- Image Understanding: Combine Azure OpenAI’s CLIP model with AI Search for image-based retrieval

- Advanced Reasoning: Use o1-preview for reflection-based problem-solving or o1-mini for streamlined reasoning

Each model offers strengths in performance, reasoning depth, or cost-efficiency. Azure’s Model Catalog lets developers experiment and switch as needed.

Storage Options on Azure AI Agent Memory

Memory is a core feature of modern AI agents. Azure provides multiple storage tools to handle different data types:

- Azure AI Search: Best for semantic search, translation, OCR, and image analysis

- Cosmos DB: Ideal for frequently accessed data and global distribution needs

- Azure Redis Cache: High-speed memory layer for low-latency access

- PostgreSQL with Graph Extensions: Supports relational graph-based memory

- MongoDB vCore: Offers fully managed vector search with document flexibility

These tools help agents store interaction history, retrieve relevant documents, and update knowledge graphs efficiently.

Running Your AI Agent: Deployment Environments

Azure offers flexible deployment options depending on your workload and architecture:

| Requirement | Azure Solution |

| Web Apps | Azure App Service for CI/CD, load balancing |

| Serverless Compute | Azure Functions and Container Apps |

| Container Control | Azure Kubernetes Service (AKS) for orchestration |

| Communication | Azure Communication Services (voice, SMS, chat) |

Use App Service for typical web apps. Use Functions or Container Apps for AI-specific workloads that benefit from serverless GPU support or dynamic sessions. AKS fits well with enterprise-scale deployments.

Core Tools: Bringing Your AI Agent to Life With Azure Services

Azure AI Agent Service supports several key tools that enhance your agent’s reasoning and action-taking capabilities.

Key Tools and What They Enable:

1. Function Calling: Let agents trigger APIs and workflows by invoking pre-defined functions

2. Code Interpreter: Runs Python code in a secure sandbox to process data and create visual outputs

3. File Search: Gives agents access to business files and documentation for more accurate answers

4. Bing Search: Connects agents to live web data for real-time updates and external knowledge grounding

These tools allow agents to not only think but also act intelligently based on context.

Practical Demo: Building AI Agents with JavaScript

During a recent developer session, participants learned how to build and deploy an AI agent using JavaScript and Azure. The architecture included:

- A terminal or frontend (like React) to interact with the agent.

- Node.js with Express or Fastify as a backend to handle API communication.

- Azure SDKs (azure/ai-projects) to register, configure, and manage the agent.

- AI Foundry to centralize models, tools, and data.

The session walked through agent creation, tool integration, and execution, all with simple JavaScript commands.

Backend Setup: Node.js with AI Foundry Integration

In a typical AI agent app, the backend exposes APIs for sending messages to the agent. Following this, the agent receives tasks, reasons over them, and triggers tools. And finally, AI Foundry manages execution, monitoring, and security under the hood. With this setup, developers avoid manual orchestration and focus purely on logic.

Tools That Make the Agent Smarter

These tools, integrated directly into Azure AI Agent Service, enable rich and secure interactions:

Highlighted Features

- Code Interpreter allows safe execution of dynamic code for analysis and insights.

- Function Calling links agents with APIs and enables action-triggering across systems.

- Azure AI Search enables semantic RAG capabilities directly in your agent.

- Bing Search grounds agents in real-time facts and trends from the web.

Each of these tools can be activated with just a few lines of code, dramatically simplifying development.

Read Also: How to Build AI Agents with LangGraph?

Azure Advanced Security, Monitoring, and Quality Control of AI Agents

No AI application is complete without strong security, safety checks, and system monitoring. Enterprise-grade AI agents must be reliable, trustworthy, and protected against misuse. Azure offers a rich set of tools to ensure this from day one.

1. API Management with GenAI Gateway

This service provides a secure gateway between users and AI models. It manages token usage, monitors traffic, and supports semantic caching. You can route requests to multiple models and apply load balancing. Built-in rate limiting protects against overuse or attacks. GenAI Gateway also integrates smoothly with Logic Apps and Azure Functions for seamless tool execution.

2. Azure Event Hubs and Service Bus

These services handle communication across components reliably. Event Hubs is ideal for telemetry and event streaming. It allows high-throughput data flows between agents and services. Service Bus supports message queuing with delivery guarantees. It is well-suited for workflows that need order and confirmation before processing continues.

3. Azure Monitor

Azure Monitor offers deep observability into application performance. You can track latency, errors, and response times in real-time. It supports custom metrics, dashboards, and alerts. With built-in integrations, you can monitor AI agents alongside databases, APIs, and storage systems. This helps troubleshoot quickly and maintain high uptime.

4. Azure AI Agents Content Safety

This tool scans both input and output data from your AI agents. It detects harmful content in text and images, such as violence, hate speech, or sensitive material. It supports custom safety categories and thresholds. Prompt Shield is also included to guard against prompt injection attacks. Together, they help prevent misuse or accidental generation of unsafe content.

5. Microsoft Defender for Cloud

This service offers advanced protection tailored for AI workloads. It detects suspicious behavior, data exfiltration attempts, and poisoned model interactions. Defender integrates with GenAI Gateway and AI Content Safety. It provides real-time alerts, policy enforcement, and threat analytics. You also get protection against identity misuse and data leakage during AI operations.

6. Prompt Flow for Evaluation

Prompt Flow supports continuous testing and performance evaluation of prompts and workflows. Developers can track changes, measure accuracy, and monitor safety responses over time. This tool is essential for fine-tuning prompts in production and ensuring the agent behaves as expected across various scenarios.

7. Managed Identity and Secure Access

Azure uses Microsoft Entra Managed Identity to handle secure connections between services. This means your AI agents can access databases, APIs, and azure cloud development tools without storing credentials in code. It reduces risk and simplifies compliance.

This end-to-end security model ensures that agents are safe, compliant, and enterprise-ready.

Development Tips

- Use GPT-4o for tasks requiring advanced reasoning and image processing.

- Pair embedding models like text-embedding-3 with retrieval tools for effective RAG workflows.

- Choose Redis Cache with Cosmos DB for high-speed memory and reliable persistence.

- Start with Logic Apps for orchestration, then integrate with Function Calling for custom tools.

- Use AI Content Safety and GenAI Gateway together to enforce quality and protect against unsafe outputs.

These practices help balance capability, performance, and cost.

Quick Reference Guide for Azure AI Agent Components

| Component | Purpose |

| Azure AI Foundry | Central hub for models, tools, and controls |

| Function Calling | Enables real-world task execution |

| Code Interpreter | Runs dynamic scripts safely |

| AI Search + Embeddings | Powers the semantic RAG and document insights |

| GenAI Gateway | Ensures safe and optimized API management |

Conclusion

Azure AI Agent Service gives developers the freedom to focus on what matters, creating smart agents that solve real-world problems. From model selection to memory design and secure deployment, Azure handles the hard parts. Developers can build, test, and scale without reinventing the wheel. With JavaScript or Python, from the terminal or UI, AI agents are now within reach.

Whether you’re developing a customer support tool or automating internal workflows, this platform helps bring AI-first apps to life quickly, securely, and reliably. But turning that vision into a reality still requires the right technical guidance and development expertise. That’s where SoluLab, a trusted AI agents development company, specializes in building secure, scalable, and high-performing solutions using platforms like Azure.

Contact SoluLab today to get started with your custom AI agent development on Azure.

FAQs

1. What is Azure AI Agent Service?

Azure AI Agent Service is a fully managed platform for building intelligent AI agents. It handles orchestration, model integration, memory, and tool usage—so developers can focus on logic instead of infrastructure or scaling issues.

2. How are AI agents different from chatbots?

AI agents go beyond simple conversations. They reason over tasks, use tools, fetch external data, and perform actions, making them autonomous systems rather than just responsive bots.

3. What are the core tools used in Azure AI agents?

Key tools include Function Calling, Code Interpreter, File Search, and Bing Search. These tools help agents take action, analyze data, and access live or stored information during execution.

4. What other ways are there to build AI agents?

Besides Azure, developers use open-source frameworks like LangChain, AutoGen, or Semantic Kernel. These require more manual setup but offer flexibility. Azure simplifies this with enterprise-grade features and full-stack integrations.

5. How much does it cost to build an AI agent on Azure?

Costs vary by model usage, storage, compute, and tools. Azure offers pay-as-you-go pricing. Lighter apps using smaller models cost less, while complex agents with GPUs and storage may need larger budgets.

6. Can I build an AI agent using JavaScript?

Yes. Developers can use JavaScript or TypeScript with Node.js and Azure SDKs. The backend communicates with Azure AI Agent Service, allowing seamless agent setup, tool usage, and secure interactions.