- AI Development

- AI App Development

- AI Consulting

- AI Software Development

- ChatBot Development

- Enterprise AI ChatBot

- AI Chatbot Development

- LLM Development

- Machine Learning Development

- AI Copilot Development

- MLOps Consulting Services

- AI Agent Development

- Deep Learning Development

- AI Deployment Services

- Deep Learning Consulting

- AI Token Development

- AI Development Company

- AI Development Company in Saudi Arabia

- AI Integration Services

- Hire Blockchain Developers

- Hire Full Stack Developers

- Hire Web3 Developers

- Hire NFT Developers

- Hire Metaverse Developers

- Hire Mobile App Developers

- Hire AI Developers

- Hire Generative AI Developers

- Hire ChatGPT Developers

- Hire Dedicated Developers

- Hire Solana Developer

- Hire OpenAI Developer

- Hire Offshore Developer

- About Us

- Networks+

- Smart Contracts +

- Crypto currency +

- NFT +

- Metaverse +

- Blockchain+

- Mobile Apps +

- WEB +

- Trending +

- Solutions +

- Hire Developers +

- Industries +

- Case Studies

- Blogs

In 2026, artificial intelligence isn’t just a trend, it’s a business necessity. Companies across industries like healthcare, fintech, logistics, and retail are using AI-powered apps to streamline operations, reduce costs, and stay competitive.

According to Statista, the global AI software market is projected to hit $126 billion this year, while McKinsey notes that organizations adopting AI at scale can boost productivity by up to 40%.

With this rapid adoption, a growing number of business leaders now ask, “How much does it cost to develop an AI app?”

This blog gives you clear, actionable insights into every cost factor, whether you’re planning a simple chatbot or a full-scale AI personal assistant app. We’ll break down the real AI app development cost in 2026.

Why Invest in AI App Development in 2026?

AI is a growth factor in 2026; more than 80% of companies are expected to use AI solutions in some form, according to Gartner. From reducing manual work to making faster decisions, businesses are turning to AI implementation services and AI integration services to stay ahead.

But AI apps today are much more than just chatbots. Thanks to generative AI development services and falling AI personal assistant app development costs, companies can now build smart apps that understand language, predict user behavior, and automate operations, all in real-time.

Whether you’re in healthcare, finance, retail, or logistics, investing in AI app development helps your business improve efficiency, boost customer satisfaction, and cut costs.

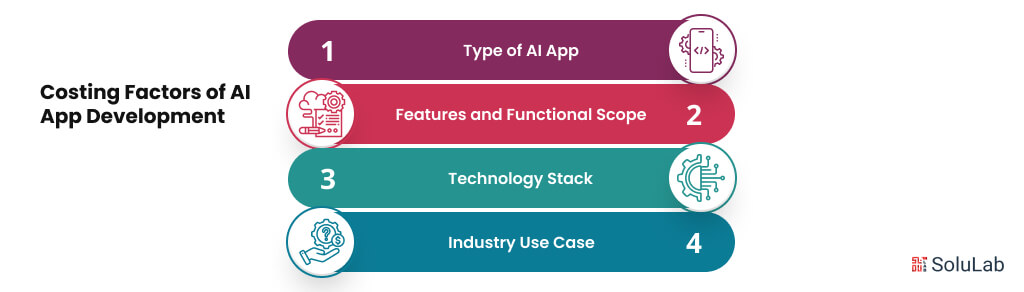

Key Factors and Features That Affect the Cost of AI App Development

When you’re planning to build an AI-powered app, knowing what drives the cost is essential. As a B2B company, you’re likely focused on performance, compliance, and long-term ROI. Below are the main things that shape the total AI app development cost:

1. Type of AI App

The kind of app you want to build plays a big role in its cost.

- Basic AI apps (like rule-based systems or recommendation engines) are cheaper and faster to build.

- Moderate AI apps use advanced tools like Natural Language Processing (NLP) for voice, image recognition, or basic predictions.

- Advanced AI apps involve adaptive systems, decision automation, or intelligent agents. These often require help from an expert AI agent development company.

2. Features and Functional Scope

The number and complexity of features directly influence your AI app’s cost. Common AI app features include:

- AI-powered chatbot and virtual agents

- Voice or facial recognition

- Behavioral analysis and user personalization

- Integration with IoT or even blockchain systems

More features mean more time, testing, and budget.

3. Technology Stack

What tools and platforms you use matters a lot.

- Using ready-made models (like OpenAI, Google Vertex AI) reduces costs.

- Building your own models from scratch requires more budget.

- Running on cloud services like AWS or Google Cloud adds cost unless optimized well.

- Partnering with an experienced team that offers MLOps consulting services can help manage infrastructure and deployment costs smartly.

4. Industry Use Case

Different industries have different needs, and that affects pricing, too.

- Sectors like finance and healthcare often need compliance, extra security, and data privacy, which increases the AI development cost.

- Other sectors like retail, logistics, or education are generally faster to build for.

These factors raise the overall AI development cost but are necessary for safe deployment.

Read Also: How to Create an AI-Powered App like Doppl?

AI App Development Cost Breakdown by Category

If you’re planning to build an AI-powered app in 2026, here’s a simple look at how much it might cost based on the type of solution you choose.

| Type of AI App | Estimated Cost Range (USD) | Ideal For | |

| Basic AI App | $15,000 – $30,000 | Simple apps with limited AI logic | |

| NLP-based Chatbot | $25,000 – $50,000 | Customer service, lead generation | |

| AI Personal Assistant App | $35,000 – $60,000 | Virtual assistants for tasks or scheduling | |

| Predictive Analytics App | $60,000 – $90,000 | Forecasting, demand planning, trend analysis | |

| Custom Generative AI Application | $80,000 – $150,000+ | Content generation, Gen-AI chat, simulations |

These ranges depend on factors like:

- Project complexity

- Platform (iOS, Android, Web)

- Number of third-party integrations

- Advanced AI app features like Natural Language Processing (NLP), Predictive Analytics, or Generative AI

For enterprises, a high-quality AI application solution isn’t just about features, it’s about scale, security, and future-proofing. Choosing the right AI development company or partner can make a big difference in both cost and performance.

If you’re unsure where to start or how to estimate the cost for your project, it’s best to hire AI developers who specialize in enterprise-grade AI solutions.

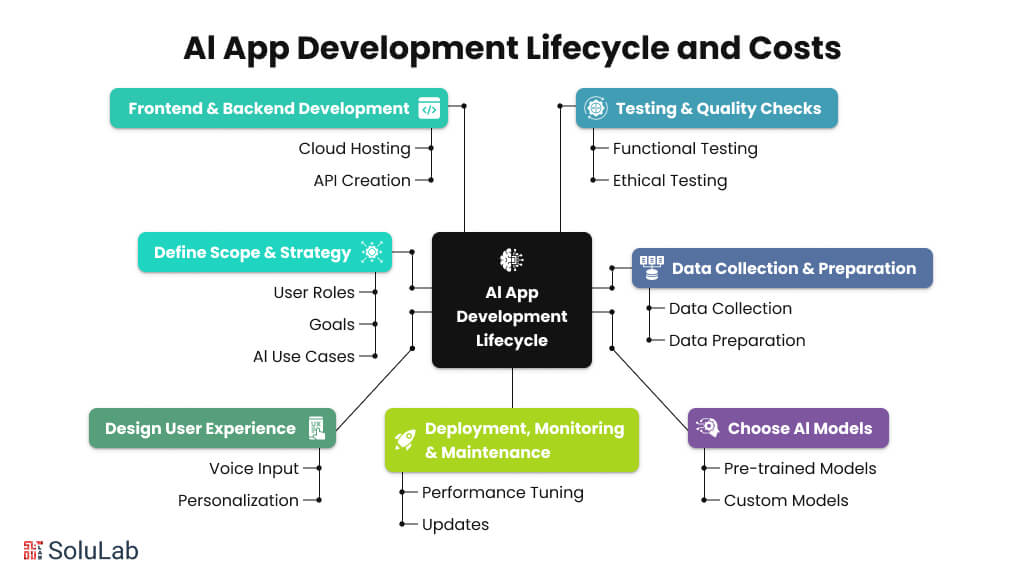

AI App Development Lifecycle and Associated Costs

To understand the total AI app development cost clearly, it’s important to break it down step-by-step. Each phase in the development lifecycle impacts your budget and knowing where your money goes helps you plan smarter.

Here’s a breakdown of the key phases:

1. Define Scope & Strategy

Start by identifying user roles, goals, and the core AI use cases and applications. This ensures your app solves a real business problem and avoids unnecessary features.

2. Data Collection & Preparation

The heart of any artificial intelligence app is clean, labeled, structured data. This stage may require help from AI implementation services or in-house teams to collect and prepare the right training data.

3. Choose the Right AI Models

Decide between pre-trained models (like GPT, BERT) or building custom ones. This impacts speed, accuracy, and long-term cost. Custom training raises the AI development cost, but often brings better ROI.

4. Design the User Experience

Great AI apps feel natural to use. UX design focuses on intuitive flows based on how users think, not just how the tech works. At this point, AI app features like voice input, personalization, or recommendations are mapped.

5. Frontend & Backend Development

This is where your mobile app development company or internal team builds the actual app. They’ll connect the AI engine, create APIs, and host the app in a secure cloud environment. You’ll also need to handle security and data compliance. For companies seeking scalability and cost efficiency, nearshore backend development can provide the technical expertise needed to manage integrations, optimize performance, and ensure compliance with data protection standards.

6. Testing & Quality Checks

Apps go through functional and ethical testing to ensure they work and don’t create bias or risk. This helps reduce risks during deployment and can even lower long-term AI mobile app development costs.

7. Deployment, Monitoring & Maintenance

Once live, the app must be monitored, fine-tuned, and updated regularly. AI models learn over time, so performance tuning is ongoing. Many companies choose to partner with a trusted AI development company to handle updates and scale confidently.

At any stage, you can hire AI developers to speed up the process, reduce risk, and ensure high-quality output.

Read Blog: Cost To Build An App In Australia

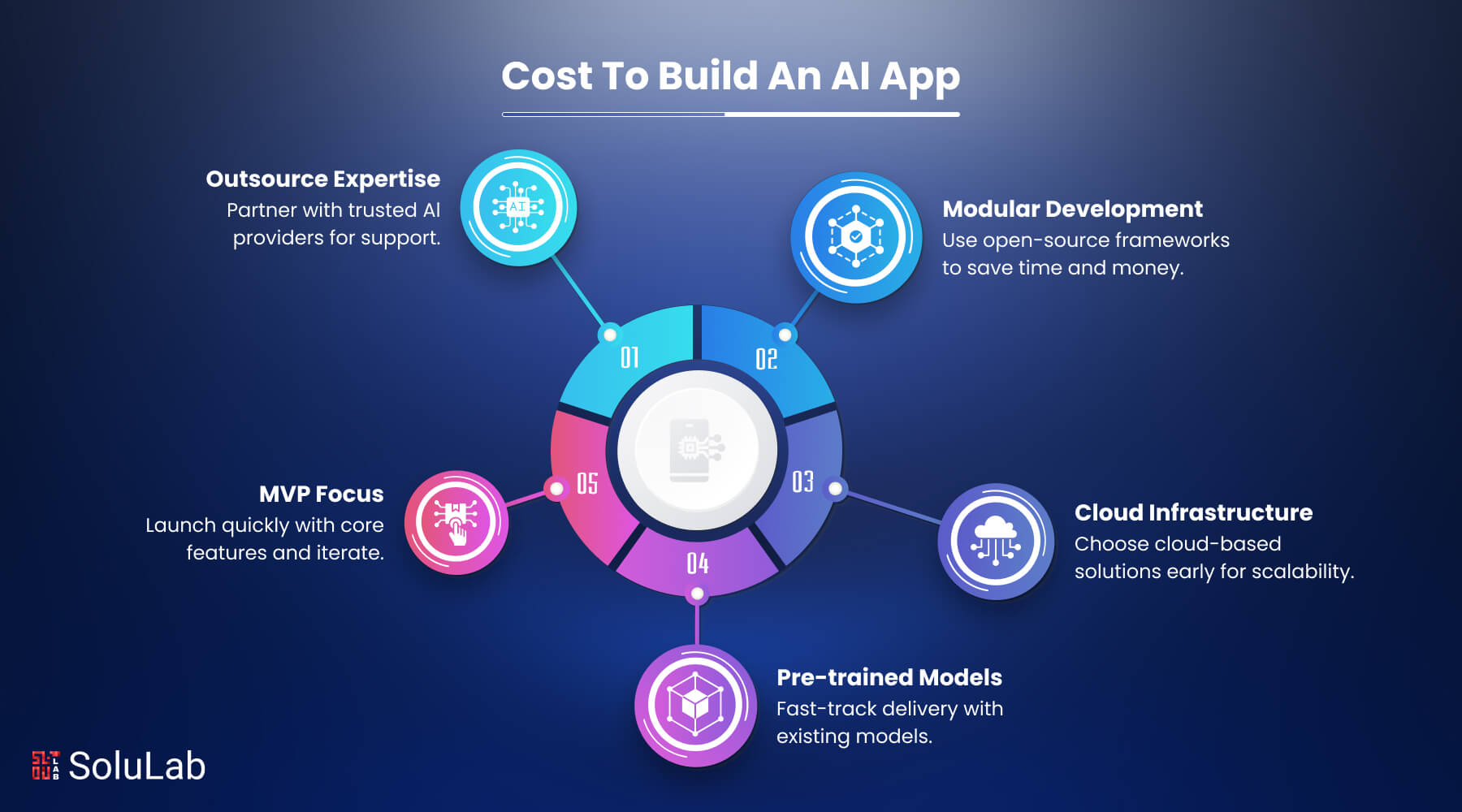

Strategies to Reduce AI App Development Costs

Are you concerned that the cost of your AI development might exceed your budget?

You’re not alone. Many businesses face the same challenge. But with the right approach, you can build a powerful AI app without overspending. Here’s how:

- Utilize modular development and leverage open-source AI and ML frameworks. It saves both time and money.

- Pick cloud-based infrastructure early and use MLOps consulting services to automate workflows.

- Choose pre-trained models to fast-track delivery for most AI use cases and applications.

- Focus on core MVP features first. Launch fast, then improve over time.

- Outsource to a trusted AI application solutions provider with proven expertise.

Cutting costs doesn’t mean cutting value. It means making smart, scalable decisions that get your AI app to market faster and more efficiently.

Conclusion

By now, you have a clear idea of what affects the AI app development cost. You’ve seen how features, app complexity, and industry use cases all play a role. Whether you’re building a Generative AI-powered chatbot, an AI personal assistant app, or a smart prediction tool, choosing the right mobile app development company is key to your success.

At SoluLab, we specialize in helping enterprises plan and build custom Artificial intelligence apps that are scalable, efficient, and built for real business impact. Our AI integration services are designed to support enterprise-grade apps from start to finish.

Contact us today to discuss your unique business idea, and we’ll power it with AI!

FAQs

1. How much does AI personal assistant app development cost today?

Building a personal assistant AI app like a smart scheduling or voice bot ranges between $50,000 and $150,000. The cost depends on how advanced your assistant is, simple apps are cheaper, but AI agents with NLP and voice capabilities need more development and testing.

2. Why does AI development cost vary so much across industries?

The AI development cost depends heavily on industry-specific use cases. For example, building AI for healthcare or finance often involves strict regulations and complex data, which means more time, security, and cost. In contrast, retail or HR apps may cost less due to simpler workflows.

3. Which Artificial intelligence apps are the most cost-effective to build?

Apps like chatbots, content recommenders, and image classifiers are the most budget-friendly Artificial intelligence apps. These use off-the-shelf models and are faster to build. More advanced tools like fraud detection or diagnostic engines require deeper AI expertise and bigger budgets.

4. How can I hire AI developers and manage the overall AI project cost?

To hire AI developers, work with an experienced AI development company that offers end-to-end support. Hiring a dedicated team ensures your project is scoped correctly, deadlines are met, and you’re not wasting budget on avoidable delays or poor architecture choices.