Let me be honest with you, most AI projects fail inside companies, far more than people think. And it’s not because the models are bad. It’s because the system around the model is broken.

A major collaborative study with NANDA and MIT in 2025 showed that 95% of generative AI pilots never reach production. That means only 5 out of 100 AI projects create any real business value.

And here’s the truth that many companies don’t hear enough: these failures have almost nothing to do with the model. MIT clearly stated that the 95% failure rate comes from flawed enterprise integration, not model performance. This is why enterprise AI consulting services, strong LLM security, and proper governance frameworks matter.

In this article, we will explain to you how to avoid becoming part of the 95% and how to turn AI from a hype expense into a real business asset. Let’s begin!

Key Takeaways

- 95% AI pilots don’t fail because the model was weak, but because security, governance, and integration were never thought through properly.

- Teams that build security, risk checks, and audits from day one move faster later and avoid shutdowns, rework, and public damage.

- With the right strategy and LLM security in place, AI stops feeling like a gamble and starts showing real business returns.

Common Risks & Threats in LLM Security for Enterprises Nobody Talks About

Before we talk about preventing AI projects from failing, we need to understand what actually breaks these systems. Most people only talk about technical attacks like prompt injection or data poisoning, but in real enterprises, failures happen because security, compliance, and governance were never built into the system from day one.

Here’s the truth no one talks about:

1. Invisible Data Leakage

This is the main problem we see in enterprise AI. Your team builds an AI chatbot connected to your CRM, ERP, or internal documents. It looks perfect in demo, but once real users start asking questions, the LLM begins revealing data it should never reveal.

This happens because your LLM security was not set up with strict boundaries. This is why you need a full LLM security assessment before launch. Without strong AI governance solutions, your smart AI becomes a data-leaking machine.

2. Prompt Injection Attacks

Prompt injection is now one of the biggest threats regulators are tracking. An attacker or even a curious employee can tell the model to ignore previous instructions and suddenly:

- Safety rules break

- Private data leaks

- API actions trigger

- Approval flows are bypassed

And this is scary, because it bypasses all normal security and compromises thousands of users’ data.

3. Unsafe Data Sources

Most enterprise LLMs connect to documents, APIs, websites, and RAG systems for decision making. Some of these sources are not under your control. Even attackers can hide malicious instructions inside a document. When your RAG pulls that file, the hidden text hijacks the model.

This already happened when Perplexity’s Comet AI browser was vulnerable to indirect prompt injection, allowing attackers to steal private data from normal-looking webpages.

This is why enterprises need strong AI risk management services to secure not just your AI but everything around it.

4. Compliance & Regulation Traps

If you operate in Europe, you can’t avoid compliance. You need HIPAA, SOC 2, GDPR, and industry-specific AI rules. But the real problem is that LLMs break old compliance systems because they store training data inside the model weights. Once sensitive data is trained into a model, you can’t simply delete it.

This is why companies need structured AI governance solutions before building anything. Just last year, the Italian government fined OpenAI €15 million for GDPR issues and unsafe content for minors. Imagine that happening to your brand just because your AI wasn’t governed properly.

5. The Governance Void

This is the silent killer as you launch a successful pilot, and everyone loves it. Then 10 departments want to use it in 10 different ways, but there’s no owner, approval flow, data access policy, monitoring, or audit trail.

This leads to chaos, which leads to a major incident, or the project gets shut down. Strong governance and AI implementation best practices keep LLM Security stable in production

How LLM Security Prevents AI Project Fails in Enterprise Environments?

Most teams treat LLM Security like an extra step. But in real enterprise AI, security is a core architecture. Think of how companies build normal enterprise systems: You don’t add login at the end or add encryption later, and you design the whole system with security from day one.

LLMs should be the same, but most companies still build first and secure later, which is risky and expensive and here’s the difference:

- Bad approach: Build the LLM → show a demo → panic → add security.

- Good approach: Design the security framework first → build everything inside it.

When you follow the good approach, AI security becomes an enabler. It lets you innovate fast without breaking compliance, data safety, or trust. This is also where strong and strategic enterprise AI development services matter. A real partner doesn’t just help you build features; they help you build secure, compliant, enterprise-ready features from day one.

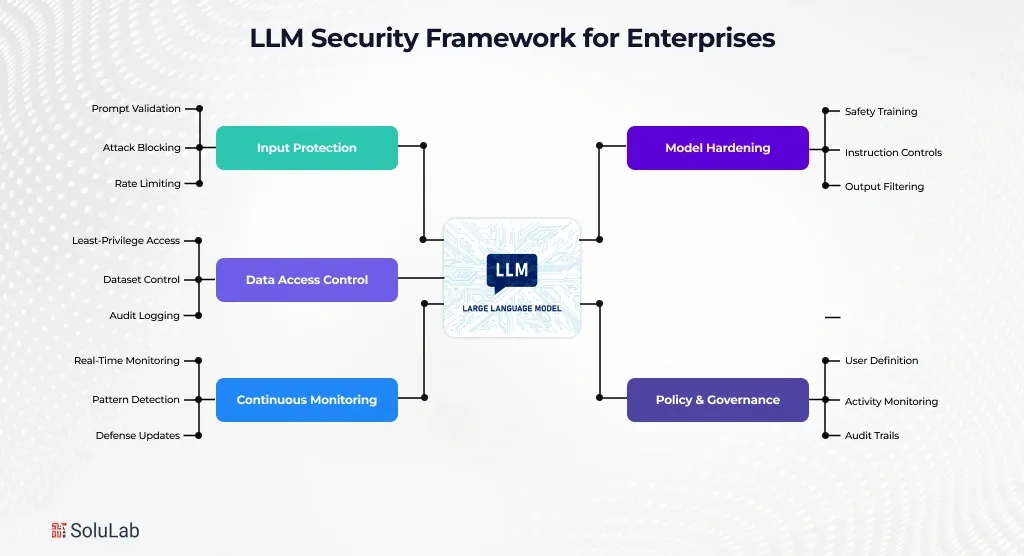

The Five Layers of LLM Security for Enterprises You Can’t Ignore

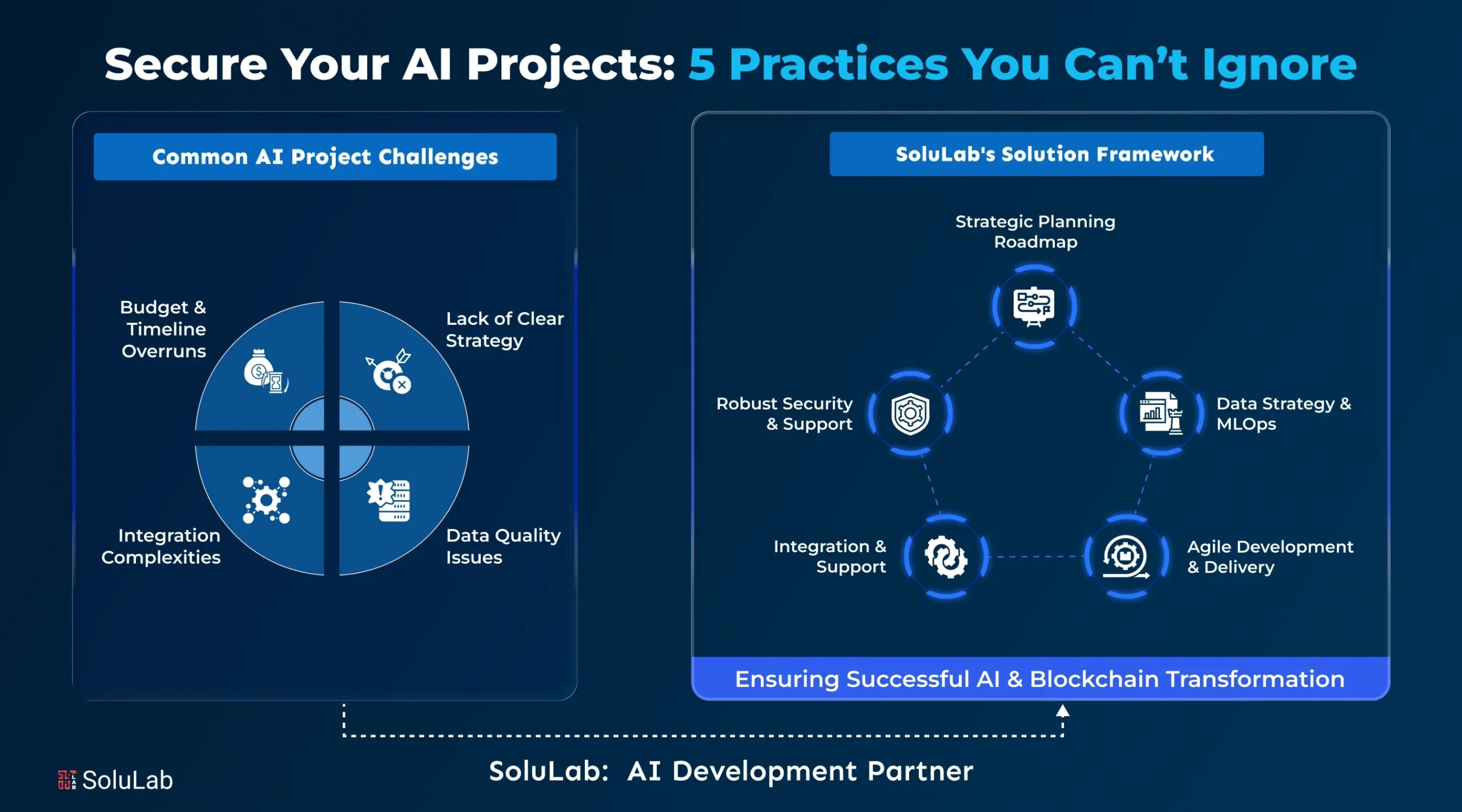

A strong LLM Security for Enterprises framework is built on five layers. Each layer protects a different part of the AI system, and together they create real enterprise-grade safety.

Layer 1: Input Protection

This is where all user prompts enter the system. If this layer is weak, everything else becomes super risky.

- Clean and validate every prompt before sending it to the model to have better output

- Block prompt-injection attacks like jailbreaks and malicious instructions

- Use rate limiting to stop spam, bots, and overload attacks

If attackers break the input layer, then they can force your LLM to reveal private data or behave unpredictably in front of users.

Layer 2: Model Hardening

This layer protects the model itself. Here is how it does-

- Fine-tune the model using safety training and enterprise-grade rules

- Add strict instruction-following controls so the model stays aligned and provides better output

- Use output filtering to stop the model from generating harmful, biased, or sensitive content

A hardened model reduces risk and gives you consistent and compliant responses every time.

Layer 3: Data Access Control

Your LLM should never have open access to all company data.

- Use least-privilege access, so the model only reads what it truly needs

- Control and limit which datasets, APIs, and documents it can pull from

- Log and audit every single data request for security and compliance to keep it safe.

This stops data leaks, unauthorized access, and accidental exposure of internal or personal information to attackers.

Layer 4: Policy & Governance

- This is where enterprise rules, compliance, and accountability live.

- Define who can use the system, and under what conditions

- Monitor activity and flag unusual or risky behavior

- Keep full audit trails for legal, compliance, and internal reviews for auditors

Enterprises need traceability, and Governance ensures that you always know who did what, when, and why.

Layer 5: Continuous Monitoring

Attackers evolve every day, so your AI system must evolve with them.

- Monitor model behavior and performance in real time

- Detect harmful patterns, strange outputs, or security risks

- Update defenses and patch vulnerabilities as new attack methods appear in Realtime

This layer keeps your system safe after deployment, which is where most companies actually get attacked.

This layered approach is what separates strong, safe enterprise AI from teams that only fix issues after a breach. And if one layer is missing, attackers will find it.

The Real Reasons Enterprise AI Projects Fail: LLM Security Gaps

Most AI development agencies do not deliver true LLM Security for Enterprises. And it’s not because they don’t care, it’s because they aren’t built for it. Here’s the real reason why-

Reason 1: Security Slows Down Their Sales Cycle

Most agencies want fast wins. A simple chatbot development typically takes 2–3 months, but incorporating proper AI security and governance solutions adds 1–2 extra months and increases the overall cost. So, they deploy quickly, show a fine-to-go demo, collect the payment, and since security doesn’t appear in a demo, they skip it.

Reason 2: Security Requires Skills Most Dev Teams Don’t Have

Developers are great at building features, but LLM security services require something else entirely, like threat modeling, compliance, security architecture, policy frameworks, and hiring people who understand this is hard and expensive.

So many agencies quietly pass the responsibility to you and say that we built the system, and security is the client’s problem.

Reason 3: Security Brings Liability They Don’t Want

Here’s what most teams will never say: If they claim full LLM Security for Enterprises and something breaks, leaks, or goes wrong, they’re liable. It’s safer for them to stop at– we delivered the product and avoid anything that looks like an LLM security audit or assessment.

How Market Leaders Use AI Governance Solutions to Secure Enterprise LLMs?

From these 2 cases you will learn how top companies use LLM security and enterprise-grade protections to avoid data leaks, and improve performance. This helps business owners understand the right way to build safe and scalable AI systems:

1. Slack AI

Slack works in enterprise communication, and their main challenge was adding AI features without breaking strict compliance standards like FedRAMP and HIPAA, while also making sure no customer data was ever put at risk.

What Slack Did?

- Data stays inside Slack’s AWS VPC with no external access

- No training on customer data, uses RAG only

- Closed AWS Escrow VPC for 3rd-party LLMs

- Auto monitoring for hallucinations and quality

- Full compliance: SOC 2, HIPAA, FedRAMP Moderate

Results in 6 Months

- +90% productivity

- 0 data privacy issues

- 100% audit pass

- FedRAMP maintained

Data isolation is greater than risky fine-tuning. Slack uses pre-trained models with RAG for maximum safety.

2. PepsiCo

PepsiCo wanted a scalable and fully secure RAG LLM system to improve customer interaction analysis without risking data leaks. Their main goal was to build an AI setup that protects sensitive product and customer data while still delivering fast insights across teams.

Security Framework

- Real-time SIEM monitoring to track risks instantly

- Regular penetration testing to check for hidden vulnerabilities

- Incident response plan for fast action during threats

- Role-based data access (RBAC) so only the right people can view sensitive info

- Encrypted data processing end-to-end for complete protection

Results

- Smooth, large-scale customer interaction analysis

- Zero security incidents

- Fully protected AI and RAG workflows

- Trusted by internal teams due to strong compliance

A setup like this shows how secure RAG systems can help big brands run AI safely, avoid data leaks, and get faster insights, something every growing enterprise needs today.

How SoluLab Handles LLM Security for Enterprises?

Phase 1: AI Readiness & Risk Mapping

We start by figuring out whether AI even makes sense for you right now. As most AI project failures come from rushing, we run a practical AI readiness assessment, look at your AI security posture, and talk to the people running real systems. From that, we map risks, fixes, costs, and timelines, which gives you a clear go or no-go decision and helps you avoid early AI fails.

Phase 2: AI Strategy & Governance Design

Once readiness is clear, we align leadership and teams, be it you partner with us or hire AI developer for your project. We help pick the right use cases, but also design simple AI governance solutions, who owns what, how data and models are used, and what rules actually matter. This creates a usable AI strategy and removes confusion that usually causes enterprise AI adoption challenges later.

Phase 3: Secure Architecture Design

With direction set, we design the system using LLM Security for Enterprises’ best practices. We map data flows, define integrations, and place security controls where they actually work. This result into is a clear architecture, which reduces delays, rework, or misalignment during the development phase.

Phase 4: Implementation with Built-In Security

When we start building, security isn’t something we park for later. It goes in from day one, as part of how the system works. That means validation, filtering, access controls, logging, monitoring, and SIEM integration, which are built into the core flow. This matters because retrofitting security later is one of the fastest ways AI projects fail once they hit production.

Phase 5: LLM Security Testing & Audit

Before anything goes live, we test it properly. We run a full LLM security assessment, followed by a structured LLM security audit, red-team testing, and compliance checks. This phase exists because assumptions break in real environments. The result is verified proof that your system is secure, along with documentation that holds up with regulators, partners, and enterprise clients, which becomes critical once AI moves beyond pilots.

Phase 6: Governance, Monitoring & Continuous Improvement

Once the system is live, the job isn’t done, and it never really is. Models drift, data changes, and risk profiles shift over time, which is where most teams get caught off guard. We train your people, set up monitoring dashboards and alerts, and continue with ongoing AI audit services. This way, some problems show up early, instead of turning into incidents no one saw coming. Over time, this keeps your AI systems secure and scalable.

Conclusion

Building AI the right way is about discipline. Most enterprises see AI fail because they rush in, but the ones that get it right slow down where it matters. They think through the strategy, treat LLM security as part of the core system, run audits early, and put governance in place before things scale. We follow proven implementation practices and keep risk in check as models evolve. That’s what turns AI from a gamble into something that actually delivers ROI and drives growth.

At SoluLab, a top LLM development company, that’s exactly how we work with teams. We help enterprises think clearly about AI before they build, then guide them through development, security, and governance so they don’t repeat the same mistakes others do through out enterprise AI consulting services. Whether it’s tightening risk, securing LLMs, or rolling out AI at scale, we focus on making AI work safely, efficiently, and in a way that actually makes business sense.

Want to get an AI readiness checklist to avoid AI failure in your organization? Contact us now!

FAQs

A lot less than a breach or fine. LLM security services usually cost 15-25% of your project’s cost. For a $500K project, they might have to spend $75-125K, far less than a single incident that could cost $5-50M. Security is insurance, but it can be expensive if you skip it.

Yes, every pilot eventually scales into production. Without proper governance structure, you face AI adoption challenges as more teams have to adapt it. Building governance from day 1 can make scaling smooth and safe

A good partner like SoluLab explains AI security, shows LLM security audit experience, and has a method for governance solutions. They usually plan readiness assessment services properly and prevent project failures with proven practices.

They treat AI like a feature instead of infrastructure. Without proper AI governance solutions, companies are going to fail. Proper planning with consulting services can prevent costly mistakes from happening.

Before writing any code. Your Security plan should begin with AI strategy consulting, readiness assessment, and risk management to prevent AI project failures.

You can, but it’s usually messy and expensive. Fixing LLM security after launch often means reworking architectures, retraining teams, and pausing deployments. Teams that handle security, governance, and risk early avoid downtime, that create new problems.