As the boundaries of artificial intelligence continue to expand, the ability to tailor language models to suit particular industries or specialized areas of knowledge has become increasingly important. Fine-tune GPT models offer a remarkable opportunity to elevate the quality and relevance of the generated text in niche domains. Whether you’re working in finance, healthcare, law, or any other specialized field, customizing language models can significantly enhance their performance, ensuring they generate contextually appropriate and accurate content.

This process involves training the GPT model on domain-specific data, allowing it to learn the intricacies and nuances of the particular subject matter. By following the steps outlined in this blog, you’ll gain the knowledge and skills needed to fine-tune GPT models for your chosen niche, unlocking their full potential and empowering your work. In this blog, we will guide you through the process of ChatGPT fine-tuning models, enabling you to leverage their immense potential and create powerful language models that are finely attuned to your specific domain.

Read Also: What is GPT?

What are the Advantages of the ChatGPT Fine-tuning analytics model?

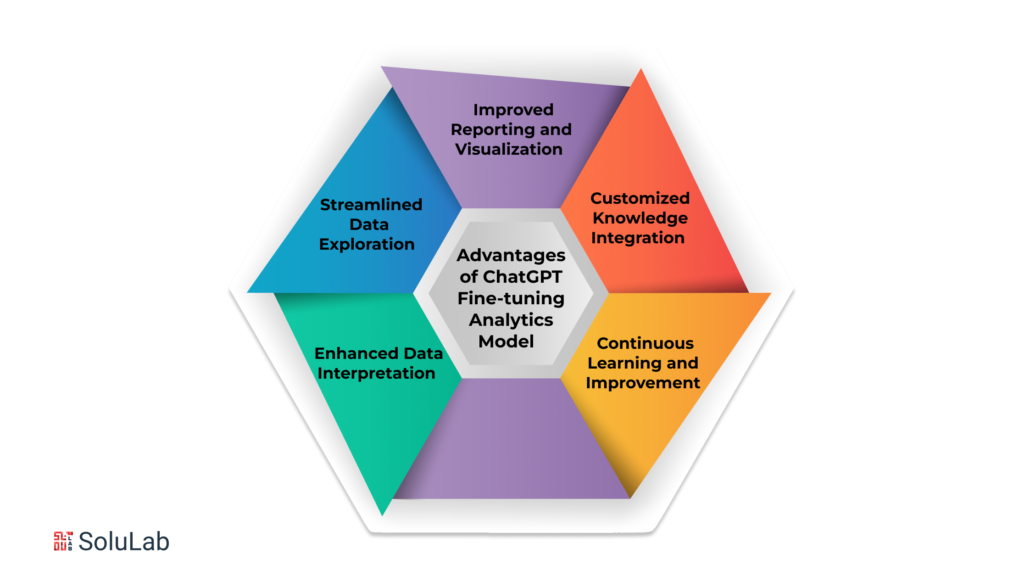

Fine-tune GPT model specifically for analytics is a game-changer in the world of data analysis and insights. By customizing these language models to understand and generate text in the context of analytics, professionals in the field can unlock a host of benefits. Let’s explore some of the advantages of ChatGPT fine-tuning models for analytics:

-

Enhanced Data Interpretation

Fine-tune GPT models can grasp the intricacies of data analysis terminologies, statistical concepts, and domain-specific jargon. This allows them to provide more accurate and insightful interpretations of complex data sets, helping analysts extract valuable information and draw meaningful conclusions.

Whether it’s deciphering patterns, identifying trends, or explaining statistical relationships, a fine-tuned ChatGPT model can become an indispensable assistant in the analytics workflow.

-

Streamlined Data Exploration

Analyzing vast amounts of data often involves exploring different variables, dimensions, and relationships. Fine-tune GPT model can be trained to understand specific queries related to data exploration, enabling analysts to obtain quick and relevant insights.

By asking questions or providing prompts, analysts can engage in a natural language conversation with the model, receiving instant responses that aid in data exploration, hypothesis testing, and decision-making.

-

Improved Reporting and Visualization

Generating comprehensive reports and creating visualizations that effectively communicate data-driven insights can be time-consuming. However, a fine-tuned ChatGPT model can assist in automating these tasks.

By describing analysis results, summarizing key findings, and suggesting appropriate visualizations, the model can accelerate the reporting process, freeing up time for analysts to focus on higher-level tasks and strategic analysis.

Read Our Blog: Top 10 ChatGPT Development Companies

-

Customized Knowledge Integration

Fine-tune GPT model for analytics allows the incorporation of domain-specific knowledge into the language model’s understanding. This means that the model can be trained on data from specific industries or business domains, enabling it to provide tailored insights and recommendations.

Whether it’s finance, marketing, healthcare, or any other analytics-driven field, a fine-tuned ChatGPT model can leverage the relevant expertise to deliver more accurate and contextually appropriate responses.

-

Continuous Learning and Improvement

Fine-tune GPT models can be updated and refined over time as new data becomes available or the analytics domain evolves. This iterative process ensures that the model stays updated with the latest trends and insights, continuously improving its performance.

By ChatGPT fine-tuning regularly, analysts can maintain a cutting-edge language model that adapts to changing data landscapes and supports accurate decision-making.

How to Fine-tune GPT Models for Specific Domains?

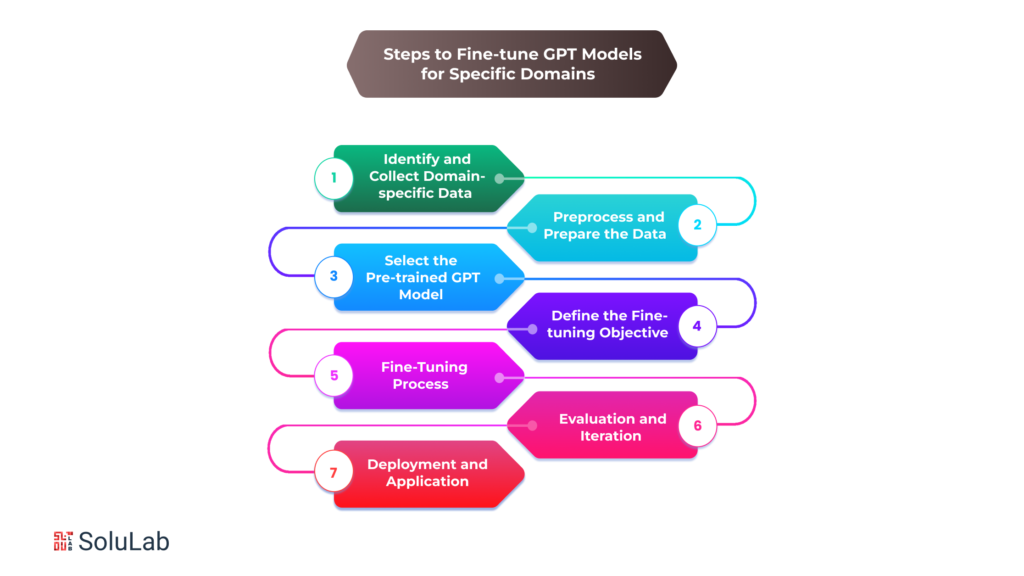

Fine-tune GPT model for specific domains is an invaluable technique that allows you to tailor the power of these language models to your specific needs. By training these models on domain-specific data, you can enhance their ability to generate contextually accurate and relevant content. In this article, we will walk you through the step-by-step process of fine-tuning the GPT model to ensure they perform optimally in your chosen domain.

1. Identify and Collect Domain-specific Data

The first step in fine tune GPT model is to gather a dataset that is specific to your target domain. This dataset should include text samples, documents, or any other relevant sources of information that capture the unique language and context of your domain. It is essential to have a diverse and representative dataset that encompasses the range of topics and scenarios encountered in your domain.

2. Preprocess and Prepare the Data

Once you have collected the domain-specific data, it’s crucial to preprocess and clean it before feeding it into the fine-tuning process. This involves removing any irrelevant or noisy data, correcting inconsistencies, and standardizing the format of the text. Additionally, you may need to annotate the data with labels or tags to assist the model in understanding the specific tasks or intents relevant to your domain.

3. Select the Pre-trained GPT Model

The next step is to choose an appropriate pre-trained GPT model as the starting point for fine-tuning. Several pre-trained models are available, such as GPT-3, GPT-2, or even the more recent GPT-4. Consider the size of the model, its performance on general language tasks, and its compatibility with the fine-tuning process. Selecting the right base model is crucial for achieving the desired results in your domain.

4. Define the Fine-tuning Objective

Before initiating the fine-tuning process, you need to define the specific objective or task you want the model to excel in your domain. This could be text generation, sentiment analysis, question answering, or any other specific task relevant to your use case. By clearly defining the objective, you can guide the fine-tuning process to optimize the model’s performance for that particular task.

Read Our Blog: How Does ChatGPT Help Custom Software Development?

5. Fine-tuning Process

The fine-tuning process involves training the selected pre-trained model on your domain-specific dataset. It’s important to strike a balance between underfitting and overfitting the model. Underfitting occurs when the model fails to capture the nuances of your domain while overfitting results in the model memorizing the training data and struggling with generalization.

During fine-tuning, you’ll typically use techniques such as gradient-based optimization, adjusting learning rates, and employing regularization strategies to achieve optimal results. Experiment with different hyperparameters, batch sizes, and training epochs to find the right balance for your specific domain.

6. Evaluation and Iteration

After fine-tuning the model, it’s crucial to evaluate its performance on a separate validation dataset or through other evaluation metrics specific to your domain. Analyze how well the model performs on the intended task, whether it captures the nuances of the domain-specific language, and whether it generates accurate and contextually appropriate outputs.

Based on the evaluation results, you may need to iterate and refine the fine-tuning process. This could involve tweaking hyperparameters, gathering additional data, or exploring alternative pre-trained models. The iterative process allows you to continually improve the model’s performance and align it more closely with the nuances and requirements of your domain.

7. Deployment and Application

Once you are satisfied with the fine-tuned model’s performance, it’s time to deploy and integrate it into your production systems or applications. Ensure that the infrastructure can handle the computational requirements of the model and that it is well-integrated into your workflow. Monitor the model’s performance and gather feedback from users to identify areas for further improvement or adaptation.

How to Choose the Appropriate Fine-tune GPT-3 Model Variant for Fine-tuning?

Choosing the appropriate fine-tune GPT-3 model variant is a crucial step in the process of customizing language models for specific domains. The GPT-3 model family offers several variants with different capabilities and sizes, each suited to different use cases and computational resources. To ensure the best results from fine-tuning, selecting the right model variant is essential. Here are some factors to consider when making this decision:

-

Model Size and Computational Resources

GPT-3 models come in various sizes, ranging from a few million to hundreds of billions of parameters. The size of the model affects both its computational requirements and its ability to capture complex relationships in the data. Smaller models may be more suitable for limited computational resources, such as CPUs or low-memory environments. In comparison, larger models can handle more extensive and diverse datasets, providing more nuanced insights.

-

Fine-tuning Objective

Consider the specific objective of fine-tuning. Are you aiming to generate coherent and contextually appropriate responses or extract detailed information from the text? For simpler tasks, a smaller GPT-3 variant may suffice, while more complex objectives may require a larger model with more parameters to capture intricate nuances and improve performance.

-

Domain Expertise and Vocabulary

Some GPT-3 variants are trained on specific domains, such as legal or medical texts, and are more adept at understanding and generating text in those domains. If your fine-tuning project focuses on a particular domain, selecting a variant that aligns with that domain can provide a head start in capturing relevant knowledge and understanding the specialized vocabulary.

-

Latency and Response Time

The size of the fine-tuned model affects the inference time required for generating responses. Larger models typically have longer response times due to their increased computational requirements. Consider the latency and response time constraints of your application or system, as this can impact the user experience and overall performance.

-

Data Availability and Task Complexity

Assess the availability and size of your domain-specific dataset. Larger models generally require more training data to generalize well. If you have a limited dataset, a smaller GPT-3 variant might be more appropriate to prevent overfitting. Conversely, if you have a substantial amount of diverse data, a larger variant can help capture a wider range of patterns and nuances.

-

Experimentation and Evaluation

It’s often beneficial to experiment with multiple GPT-3 variants to evaluate their performance on your specific task or domain. Test different models and compare their results in terms of text quality, coherence, and relevance to your domain. This empirical evaluation can provide valuable insights and help you make an informed decision.

How to Evaluate the Performance and Quality of the Fine-tuned Model?

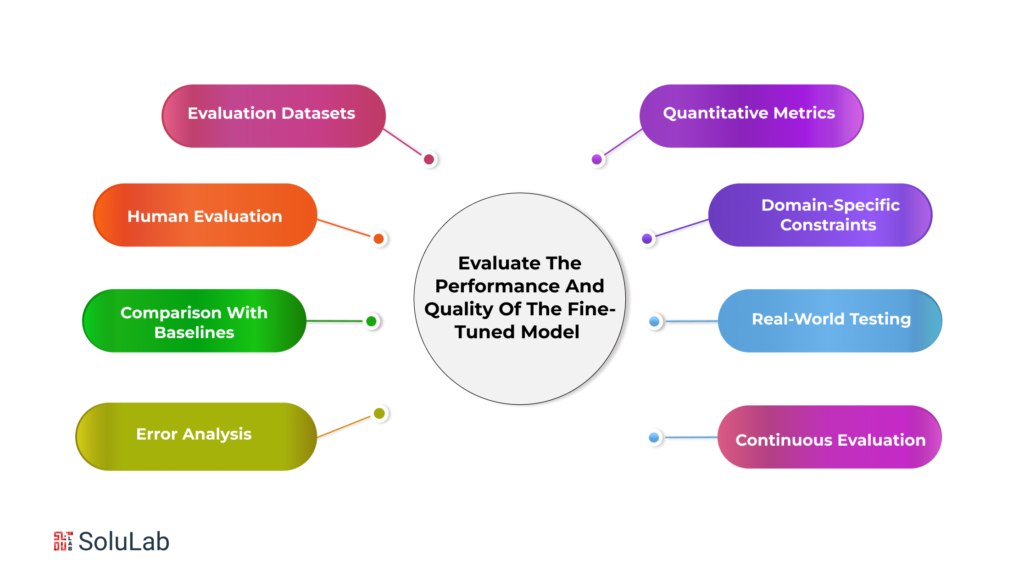

Evaluating the performance and quality of a fine-tuned model is a crucial step in assessing its effectiveness and determining its readiness for deployment. Here are some strategies and metrics to consider when evaluating the performance and quality of your fine-tuned model:

-

Evaluation Datasets

Prepare separate evaluation datasets that are distinct from the training data. These datasets should cover a wide range of scenarios and use cases within your domain. Ensure that the evaluation data represent the same distribution and characteristics as the real-world data the model will encounter during deployment.

-

Quantitative Metrics

Utilize quantitative evaluation metrics to measure the performance of your fine-tuned model. Common metrics include accuracy, precision, recall, F1 score, perplexity, or any domain-specific metrics relevant to your task. These metrics provide objective measures of the model’s performance and can help identify areas that require improvement.

-

Human Evaluation

Incorporate human evaluation to assess the quality and coherence of the generated text. Develop a standardized evaluation protocol and involve human evaluators who are knowledgeable in your domain. Provide them with specific criteria to evaluate the responses generated by the model, such as relevance, fluency, and coherence. This qualitative assessment adds valuable insights into the subjective aspects of the model’s performance.

-

Domain-specific Constraints

Consider any specific constraints or requirements in your domain and evaluate how well the fine-tuned model adheres to those constraints. For example, in legal or medical domains, compliance with regulations and ethical guidelines may be essential. Ensure the fine-tuned model generates outputs that align with these constraints and do not produce biased or sensitive content.

-

Comparison with Baselines

Establish baselines by comparing the performance of your fine-tuned model with existing models or methods used in your domain. This comparison provides a benchmark and helps assess the improvement achieved through fine-tuning. Use appropriate statistical tests to determine if the performance difference is statistically significant.

-

Real-world Testing

Conduct real-world testing by integrating the fine-tuned model into relevant applications or workflows. Gather feedback from users or domain experts who interact with the model in practical scenarios. This feedback can provide valuable insights into the model’s effectiveness, usability, and performance in real-world settings.

-

Error Analysis

Perform a thorough error analysis to understand the weaknesses and limitations of the fine-tuned model. Identify common errors or failure cases and examine the patterns or causes behind them. This analysis helps guide further iterations of fine-tuning and improvement efforts.

-

Continuous Evaluation

Fine-tuned models should be continuously evaluated and monitored even after deployment. Collect user feedback, track performance metrics, and stay updated with the evolving needs and challenges of your domain. This allows you to make iterative improvements and ensure that the model remains effective over time.

Conclusion

Fine-tuning GPT models for specific niche domains offers a remarkable opportunity to create powerful language models finely attuned to the intricacies of specialized industries or areas of knowledge. The process involves training the GPT model on domain-specific data, allowing it to learn the nuances and context required for generating accurate and relevant content.

To embark on this fine-tuning journey, start by identifying and selecting relevant data sources specific to your domain. This could include domain-specific datasets, research papers, online forums, or even subject matter experts who can provide valuable insights.

Once you have gathered the necessary data, the next step is to configure the model and define the fine-tuning objectives. Choosing the appropriate GPT model variant is essential, considering factors such as model size, computational resources, and the complexity of the task at hand. Configuration involves setting up the model architecture and hyperparameters to suit the specific domain. Training techniques, such as gradual unfreezing or learning rate scheduling, can further enhance the model’s performance.

SoluLab prides itself on having an exceptional team of experienced and skilled professionals dedicated to developing customized ChatGPT clones tailored to meet the unique business requirements of its clients. As a leading ChatGPT applications development company, SoluLab continuously enhances its expertise and innovates its services with the latest technologies, aiming to empower businesses with advanced AI chatbot solutions. With a focus on seamless integration, development, fine-tuning, and maintenance of custom AI solutions, SoluLab’s talented team leverages its expertise in ML, NLP, and Deep Learning to create captivating OpenAI experiences. Clients can experience the difference by hiring SoluLab’s top ChatGPT developers for their AI development needs.

In addition to ChatGPT, SoluLab’s Generative AI development services offer powerful models and solutions inspired by ChatGPT, DALL-E, and Midjurney. Their team of generative AI experts, well-versed in a wide range of AI technologies, enables businesses to leverage these tools and produce custom, high-quality content that sets them apart from competitors. If you’re seeking state-of-the-art generative AI development services tailored specifically to your company’s requirements, SoluLab is the right place to explore. Contact SoluLab today and discover how their team can help boost your company’s technical capabilities through advanced AI solutions.

FAQs

1. What is fine-tuning, and why is it important for GPT models in niche domains?

Fine-tuning is the process of customizing pre-trained language models, such as GPT models, to specific domains or industries. It allows models to learn domain-specific knowledge and generate contextually appropriate text. Fine-tuning is crucial in niche domains as it enhances the model’s understanding, relevance, and accuracy within a specific industry, making it a valuable tool for professionals, researchers, and enthusiasts.

2. How do I select relevant data sources for fine-tuning my GPT model?

To select relevant data sources, start by defining your domain and identifying specific topics or areas of knowledge within it. Explore existing domain-specific datasets, publications, online forums, and communities related to your niche.

3. How do I choose the appropriate GPT model variant for fine-tuning?

Choosing the right GPT model variant depends on factors like computational resources, fine-tuning objectives, domain expertise, vocabulary requirements, latency considerations, data availability, and task complexity. Assess the model’s size, its compatibility with available resources, its fine-tuning performance, and its domain-specific capabilities.

4. What are the key steps in the fine-tuning process for GPT models?

Collect relevant data, preprocess it by cleaning and formatting, configure the model architecture, select appropriate hyperparameters, train the model on the domain-specific dataset, evaluate its performance using quantitative metrics and human evaluation, and refine the model based on the findings.