In today’s digital age, data security is important. With the increasing prevalence of cyber threats and stringent regulatory requirements, organizations must employ robust strategies to protect sensitive information. Among the arsenal of data protection methods, encryption and tokenization stand out as two key techniques. However, despite their shared goal of safeguarding data, they operate in distinct ways and serve different purposes.

Understanding the data tokenization vs encryption is crucial for effectively implementing data security measures. In this blog, we delve into the concept of tokenization and encryption, elucidating their functionalities, advantages, and best practices. By grasping the fundamental disparities between these methods, businesses can make informed decisions to fortify their data defenses and navigate compliance requirements confidently.

What is Encryption?

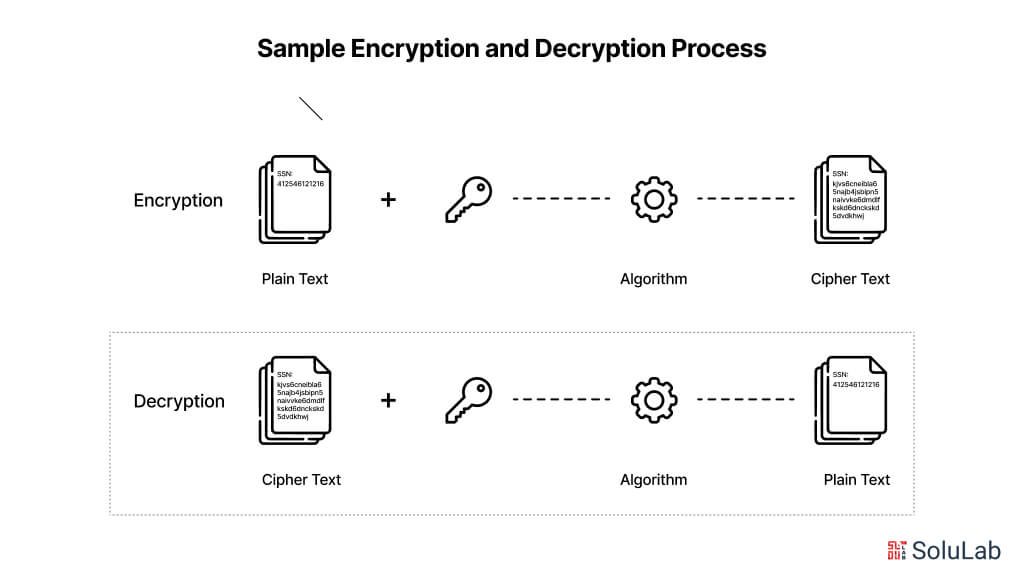

Encryption is a method of securing data by converting it into an encoded form that can only be deciphered by authorized parties. In essence, encryption involves scrambling plain, readable data (known as plaintext) into an unreadable format (known as ciphertext) using an algorithm and a cryptographic key. This procedure makes sure that even if someone with illegal exposure to the encrypted data manages to decrypt it, they will be unable to do so without the necessary decryption key.

The encryption process typically involves applying a mathematical algorithm to the plaintext along with a cryptographic key. The algorithm manipulates the plaintext based on the key’s instructions, transforming it into ciphertext. The resulting ciphertext appears as a seemingly random sequence of characters, making it unintelligible to anyone who does not possess the decryption key.

Encryption plays a vital role in data security, offering confidentiality and privacy for sensitive information during transmission and storage. It is utilized across various sectors, including finance, healthcare, government, and telecommunications, to protect data from unauthorized access, interception, and tampering.

Types of Encryption

There are two primary types of encryption:

1. Symmetric Encryption: Symmetric encryption encrypts data and decrypts it using the same key. The parties involved in the communication must safely exchange this key. The Advanced Encryption Standard (AES), Data Encryption Standard (DES), and Triple DES (3DES) are examples of symmetric encryption methods.

2. Asymmetric Encryption: As an alternative to public-key encryption, asymmetric encryption employs two keys: a private key for decryption and a public key for encryption. The private key remains hidden, while the public key is shared without restriction. RSA (Rivest-Shamir-Adleman) and ECC (Elliptic Curve Cryptography) are two examples of asymmetric encryption techniques.

How Does Encryption Work?

Encryption works by transforming plain, readable data (plaintext) into an unreadable format (ciphertext) using an algorithm and a cryptographic key. This procedure guarantees that private data stays safe and secret, even in the event that it is received by unapproved parties. Let’s delve into the specifics of how encryption operates:

Encryption Process

- Algorithm Selection: Encryption begins by selecting a suitable encryption algorithm, which dictates how the plaintext will be transformed into ciphertext. Common encryption algorithms include AES (Advanced Encryption Standard), RSA (Rivest-Shamir-Adleman), and ECC (Elliptic Curve Cryptography).

- Key Generation: A cryptographic key is generated or selected to be used in conjunction with the encryption algorithm. The key serves as the input to the algorithm, influencing the transformation process. In symmetric encryption, the same key is used for both encryption and decryption, while in asymmetric encryption, a pair of keys (public and private) is employed.

- Encryption: The encryption algorithm takes the plaintext and the encryption key as inputs and performs a series of mathematical operations on the plaintext, scrambling it into ciphertext. The resulting ciphertext appears as a random sequence of characters, obscuring the original data.

- Output: Once encrypted, the ciphertext is generated and can be transmitted or stored securely. Without the corresponding decryption key, the ciphertext is indecipherable and unintelligible to unauthorized parties.

Decryption Process

- Key Retrieval: To decrypt the ciphertext and recover the original plaintext, the recipient must possess the appropriate decryption key. In symmetric encryption, this key is the same as the one used for encryption, while in asymmetric encryption, the private key is used for decryption.

- Decryption: The recipient applies the decryption key and the decryption algorithm to the ciphertext, reversing the encryption process. The algorithm reverses the transformations applied during encryption, converting the ciphertext back into plaintext.

- Output: The decrypted plaintext is obtained, revealing the original, readable data. This plaintext can then be processed, displayed, or utilized as intended by the recipient.

Encryption operates on the principle of confidentiality, ensuring that only authorized parties with access to the decryption key can retrieve and understand the original data. By utilizing robust encryption techniques and secure key management practices, organizations can protect sensitive information from unauthorized access, interception, and tampering, thereby safeguarding data privacy and integrity.

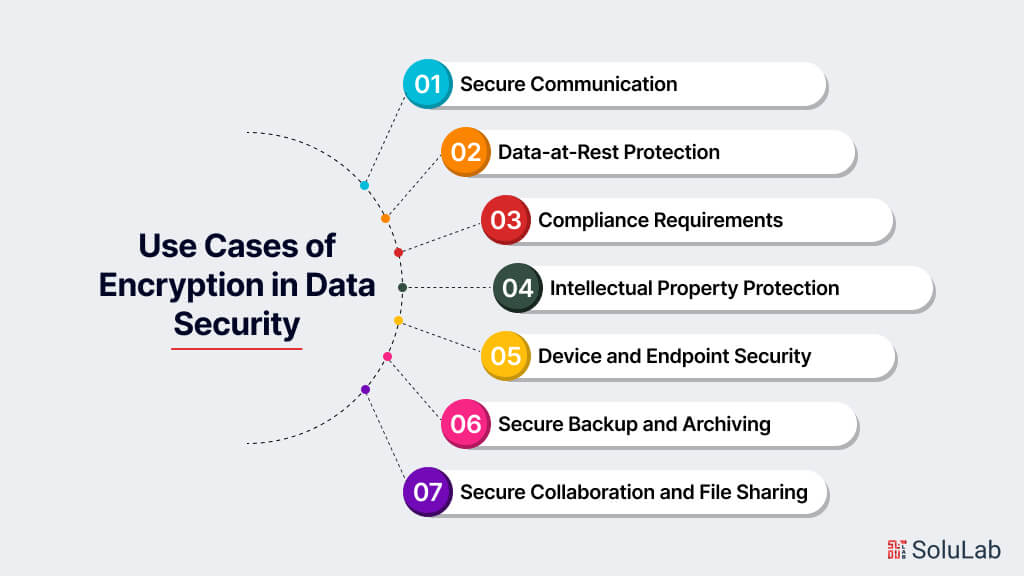

Use Cases of Encryption in Data Security

Encryption plays a crucial role in data security across various industries and applications. Here are some common use cases of encryption:

1. Secure Communication

Encryption ensures the confidentiality of sensitive information transmitted over networks, such as emails, instant messages, and online transactions. Secure protocols like SSL/TLS utilize encryption to establish secure connections between clients and servers, preventing eavesdropping and data interception. Virtual Private Networks (VPNs) use encryption to create secure tunnels over public networks, enabling secure remote access and communication.

2. Data-at-Rest Protection

Encryption safeguards data stored on devices, databases, and cloud storage against unauthorized access, theft, or breaches. Full-disk encryption encrypts entire storage devices, such as hard drives or solid-state drives (SSDs), ensuring that all data on the device remains protected. File-level encryption selectively encrypts individual files or directories, allowing granular control over data protection.

3. Compliance Requirements

Encryption is mandated by various compliance standards and regulations, such as the Health Insurance Portability and Accountability Act (HIPAA), the Payment Card Industry Data Security Standard (PCI DSS), and the General Data Protection Regulation (GDPR). Compliance with these regulations often requires the implementation of encryption measures to protect sensitive information, including personal health information (PHI), financial data, and personally identifiable information (PII).

Read Also: ERC-3643 vs ERC-1400 vs ERC-20

4. Intellectual Property Protection

Encryption safeguards intellectual property and trade secrets stored in digital formats from unauthorized access, disclosure, or theft. Industries such as technology, pharmaceuticals, and media rely on encryption to protect proprietary information, research data, and valuable assets.

5. Device and Endpoint Security

Encryption secures data stored on mobile devices, laptops, and other endpoints, preventing unauthorized access in case of loss or theft. Mobile device management (MDM) solutions often include encryption features to protect corporate data on employee-owned devices.

6. Secure Backup and Archiving

Encryption ensures the confidentiality and integrity of data backups and archives stored locally or in the cloud. Backup solutions often incorporate encryption to protect sensitive data during transmission and storage, reducing the risk of data breaches or unauthorized access.

7. Secure Collaboration and File Sharing

Encryption enables secure collaboration and file sharing among individuals or organizations, ensuring that shared data remains protected from unauthorized access. Encrypted file-sharing services and collaboration platforms encrypt data both in transit and at rest, maintaining confidentiality and privacy.

What is Tokenization?

Tokenization is a data security technique that substitutes sensitive data with non-sensitive placeholder values, called tokens. Unlike encryption, which transforms data into an unreadable format, tokenization replaces the original data with a token, which is typically a randomly generated string of characters. The token has no intrinsic meaning or value and cannot be mathematically reversed to reveal the original data. Tokenization is widely used in payment processing, healthcare, and other industries to protect sensitive information while retaining usability.

Types of Tokenization

There are four types of tokenization as follows:

- Credit Card Tokenization: In credit card tokenization, sensitive credit card numbers are replaced with tokenized values, allowing merchants and payment processors to store and process transactions securely without exposing cardholder data. Tokens are generated and managed by a tokenization service or platform, which securely maps tokens to their corresponding credit card numbers in a token vault. Tokenized credit card data can be used for recurring payments, fraud detection, and other transactional purposes without requiring access to the original credit card numbers.

- Data Tokenization: Data tokenization extends beyond credit card numbers to protect other types of sensitive data, such as social security numbers, bank account numbers, and personally identifiable information (PII). Like credit card tokenization, data tokenization replaces sensitive data with tokens, ensuring that the original data remains protected from unauthorized access or exposure. data tokenization vs encryption solutions may offer customization options to tokenize specific data elements based on organization-specific requirements and compliance standards.

- Email Tokenization: Email tokenization replaces email addresses with unique tokens, enabling organizations to communicate with customers and users without exposing their email addresses. Email tokens are generated and managed securely to ensure that each token corresponds to a unique email address. Email tokenization helps protect user privacy and mitigate the risk of email-related spam, phishing, and data breaches.

- Tokenization for Authentication: Tokenization is also used for authentication purposes, where tokens serve as temporary credentials or access tokens. Access tokens are used to authenticate users and grant access to secured resources or services, such as APIs, web applications, and cloud services. These tokens are typically time-bound and limited in scope, reducing the risk of unauthorized access or misuse.

Read Also: Entertainment Tokenization in Media & Film

How Does Tokenization Work?

Tokenization is a data security strategy where sensitive data is replaced with replacement values that aren’t sensitive (called tokens). Here’s how tokenization works:

1. Data Collection

Initially, sensitive data, such as credit card numbers, social security numbers, or other personally identifiable information (PII), is collected from users or systems.

2. Tokenization Process

The sensitive data undergoes a tokenization process where it is replaced with randomly generated tokens. Tokenization can be performed using tokenization software or services, which manage the mapping between original data and tokens securely.

Related: How to Create an NFT Token in 2025?

3. Token Generation

Tokens are typically alphanumeric strings generated using cryptographic methods. These tokens have no intrinsic meaning or value and are randomly generated for each instance of sensitive data.

4. Token Mapping

The tokenized data and its corresponding original data are securely stored in a token vault or database. This mapping allows authorized users to retrieve the original data associated with a token when necessary.

5. Tokenized Data Usage

Tokenized data is used in place of the original sensitive data for various purposes, such as payment processing, identity verification, or data storage. Tokens can be transmitted and stored without revealing the underlying sensitive information, minimizing the risk of data exposure or theft.

6. Token Retrieval

Authorized users can retrieve the original data associated with a token from the token vault when needed for legitimate purposes, such as transaction processing or customer verification. Access to the token vault is tightly controlled to ensure that only authorized personnel can access sensitive data.

Use Cases of Tokenization in Data Security

Tokenization finds extensive use in securing sensitive data by substituting it with non-sensitive tokens. Its applications span across industries such as finance, healthcare, and e-commerce, ensuring data privacy and compliance with regulatory standards.

1. Payment Processing

Tokenization is widely used in the payment industry to secure credit card transactions. Credit card numbers are tokenized to prevent exposure of cardholder data during payment authorization, reducing the risk of fraud and data breaches.

2. Healthcare Data Protection

In the healthcare sector, tokenization is employed to protect electronic health records (EHRs) and other sensitive patient information. Patient identifiers, such as social security numbers and medical record numbers, are tokenized to comply with healthcare regulations (e.g., HIPAA) and safeguard patient privacy.

3. Data Storage and Cloud Services

Tokenization helps secure data stored in databases, cloud storage, and other repositories. Sensitive data, such as personally identifiable information (PII) and financial records, can be tokenized to mitigate the risk of unauthorized access or data breaches.

4. Identity and Access Management

Tokenization is used in identity and access management (IAM) systems to authenticate users and manage access to secured resources. Access tokens are generated to grant temporary access to applications, APIs, and online services, reducing the reliance on traditional passwords and enhancing security.

5. Retail and E-commerce:

Tokenization is employed in retail and e-commerce environments to secure customer payment information and streamline checkout processes. Tokenized payment data allows merchants to process transactions securely without storing sensitive credit card information, improving customer trust and compliance with payment card industry standards (e.g., PCI DSS).

Key Differences Between Tokenization and Encryption

Before diving into the detailed comparisons, it’s essential to understand the key distinctions between tokenization and encryption. While both techniques aim to safeguard sensitive data, they differ in reversibility, performance, scalability, and regulatory compliance implications. Let’s explore these differences in detail:

A. Purpose and Functionality

Tokenization vs Encryption: Tokenization and encryption serve distinct purposes in data security. Tokenization substitutes sensitive data with non-sensitive tokens, ensuring data security while maintaining usability. Encryption, on the other hand, transforms data into an unreadable format, providing confidentiality but retaining the original data’s structure. While tokenization focuses on data substitution, encryption focuses on data transformation.

B. Reversibility

Data Tokenization vs Encryption: One fundamental difference lies in reversibility. Tokenization is non-reversible, meaning tokens cannot be mathematically reversed to obtain the original data. Even with knowledge of the tokenization algorithm and token values, it is practically impossible to reverse the process and retrieve the original data. Conversely, encryption is reversible, allowing encrypted data to be decrypted back into its original form using the decryption key. This reversible nature of encryption facilitates secure transmission and storage of data while ensuring that authorized parties can access and interpret the information.

C. Performance and Scalability:

Encryption vs Tokenization vs Masking: Performance and scalability considerations also distinguish tokenization from encryption. Encryption processes can be computationally intensive, especially when dealing with large volumes of data, which may impact system performance. In contrast, tokenization typically offers faster performance since it involves simple substitution of data with tokens, requiring fewer computational resources. Additionally, tokenization is inherently more scalable than encryption, making it suitable for environments with high data volumes. Tokenization systems can easily handle the generation, storage, and retrieval of large numbers of tokens without significant performance degradation, whereas encryption systems may face scalability challenges under similar conditions.

D. Regulatory Compliance Implications

Tokenization vs Encryption vs Hashing: Regulatory compliance requirements play a crucial role in determining the choice between tokenization and encryption. While encryption is often explicitly required by regulations such as GDPR (General Data Protection Regulation) or HIPAA (Health Insurance Portability and Accountability Act) for protecting sensitive data, tokenization and hashing may offer alternative methods for achieving compliance. Understanding the regulatory landscape and the specific requirements for each technique is essential for organizations to ensure compliance with data protection standards. Depending on the regulatory environment and industry-specific regulations, organizations may choose to implement tokenization, encryption, or a combination of both to meet compliance obligations and protect sensitive information effectively.

Check Our Case Study: NFTY

Pros and Cons of Encryption

Pros:

- Confidentiality: Encryption ensures that sensitive data remains confidential and unreadable to unauthorized parties, providing a high level of security.

- Data Integrity: Encrypted data is protected from tampering or unauthorized modifications, maintaining its integrity throughout transmission and storage.

- Regulatory Compliance: Encryption helps organizations comply with data protection regulations such as GDPR, HIPAA, and PCI DSS by safeguarding sensitive information from unauthorized access.

- Flexibility: Encryption can be applied to various types of data and communication channels, offering a versatile solution for securing information across different platforms and environments.

Cons:

- Performance Overhead: Encryption can introduce computational overhead and latency, particularly in high-volume environments, impacting system performance and responsiveness.

- Key Management Complexity: Effective encryption requires robust key management practices to securely generate, distribute, and store encryption keys, which can be complex and resource-intensive.

- Data Recovery Challenges: If encryption keys are lost or compromised, data recovery becomes challenging or impossible, potentially leading to permanent data loss.

- Potential Vulnerabilities: Despite its benefits, encryption may be vulnerable to cryptographic attacks or implementation flaws, requiring ongoing monitoring and updates to maintain security.

Pros and Cons of Tokenization

Pros:

- Data Security: Tokenization protects sensitive data by replacing it with non-sensitive tokens, reducing the risk of data breaches and unauthorized access.

- Non-Reversibility: Tokens cannot be reversed to obtain the original data, enhancing data security and privacy.

- Compliance Simplification: Tokenization simplifies regulatory compliance efforts by minimizing the scope of sensitive data subject to compliance requirements, reducing the risk of non-compliance penalties.

- Scalability: Tokenization systems can easily handle large volumes of data without significant performance degradation, making them suitable for scalable applications and environments.

Cons:

- Usability Challenges: Tokenization may introduce usability challenges, especially if the original data needs to be retrieved or processed in its original form, requiring additional token-to-data mapping mechanisms.

- Dependency on Tokenization Systems: Organizations become reliant on tokenization systems and providers, making it essential to ensure the availability, reliability, and security of these systems.

- Potential for Tokenization Errors: Incorrect tokenization mappings or errors in token generation can lead to data integrity issues and misinterpretation of tokenized data.

- Implementation Complexity: Implementing tokenization systems and integrating them with existing infrastructure may require significant effort and resources, particularly in complex environments with diverse data sources and applications.

Conclusion

In conclusion, understanding the nuanced differences between tokenization and encryption is essential for organizations seeking to fortify their data security measures. While both techniques offer robust solutions for protecting sensitive information, they operate distinctly in terms of purpose, reversibility, performance, and regulatory implications. Tokenization provides a pragmatic approach by substituting sensitive data with non-sensitive tokens, ensuring data security without compromising usability. On the other hand, encryption offers a reversible method of transforming data into an unreadable format, providing confidentiality while retaining the original data’s structure. By recognizing the strengths and limitations of each approach, organizations can make informed decisions tailored to their specific security requirements, compliance obligations, and operational needs.

At SoluLab, we empower businesses to enhance their data security posture through tailored tokenization solutions. As a leading tokenization development company, we specialize in designing and implementing robust tokenization systems that safeguard sensitive information across various industries and use cases. Our team of experts uses advanced technologies and industry best practices to deliver scalable, reliable, and compliant tokenization solutions that meet the unique security needs of our clients. Whether you’re looking to protect payment data, healthcare records, or other sensitive information, SoluLab is your trusted partner in achieving data security excellence. Contact us today to learn how our tokenization services can elevate your data protection strategy and drive business success.

FAQs

1. What is the main difference between tokenization and encryption?

The primary difference lies in their approach to data security. Tokenization replaces sensitive data with non-sensitive tokens, preserving usability but ensuring data security. Encryption transforms data into an unreadable format, providing confidentiality while retaining the original data’s structure.

2. Which method, tokenization or encryption, is more secure?

Both tokenization and encryption offer robust security measures. Tokenization provides non-reversible protection by substituting data with tokens, making it highly secure. Encryption, while reversible, offers strong confidentiality through complex algorithms and keys. The choice between the two depends on the specific security requirements and use cases of the organization.

3. Are tokenization and encryption mutually exclusive?

No, tokenization and encryption can be used together to enhance data security. For instance, sensitive data can be encrypted first to ensure confidentiality and then tokenized for additional protection. This hybrid approach combines the strengths of both techniques to create a multi-layered security strategy.

4. How does tokenization impact compliance with data protection regulations?

Tokenization simplifies compliance efforts by reducing the scope of sensitive data subject to regulatory requirements. By replacing sensitive information with tokens, organizations can minimize the risk of non-compliance penalties while still maintaining data security and privacy.

5. What are the key considerations for choosing between tokenization and encryption?

When deciding between tokenization and encryption, organizations should consider factors such as data sensitivity, reversibility requirements, performance implications, and regulatory compliance obligations. Understanding the specific security needs and operational requirements will help organizations make informed decisions tailored to their unique circumstances.