The arrival of the digital age has fundamentally changed how we interact with and utilize information. However, as we continue to explore this linked world, an entirely novel change that holds the potential to completely reshape the fundamentals of the internet ecosystem presents itself.

AI in Web3 is a blend of artificial intelligence and Web3’s decentralized philosophy. This dynamic combo is more than simply a passing fad in technology; it combines the boundless potential of artificial intelligence with the open, trust-based ideals of Web 3.

It reinvents the relationship between data, trust, and innovation, marking the next phase of digital progress. Let’s explore the specifics of this synergy in this blog and understand the role of artificial intelligence in Web3 development!

What is Web3?

Web3, or the decentralized and open version of the World Wide Web, is revolutionizing our online experiences. In contrast to the conventional Web2, which is dominated by middlemen and centralized platforms, Web3 seeks to empower people, advance privacy, and facilitate peer-to-peer commerce. It is based on blockchain technology, which offers security, immutability, and transparency.

Web3 includes new technologies including cryptocurrency, smart contracts, and decentralized applications (dapps). Decentralization, user ownership over data, and doing away with intermediaries are among its fundamental tenets. Web3 facilitates direct user engagement with decentralized networks and allows users to share in the value created by these networks, creating a more democratic and inclusive digital environment.

What is AI?

Artificial Intelligence, or AI for short, is the branch of computer science that focuses on building intelligent machines that can do jobs that are normally performed by humans. Artificial intelligence (AI) systems are made to examine data, draw conclusions from it, and act or make judgments in response to that information.

These machines are capable of simulating human cognitive functions including language comprehension, picture recognition, problem-solving, and creativity. Artificial Intelligence (AI) comprises several subfields, such as robotics, computer vision, natural language processing, and machine learning. Its uses span a wide range of sectors and improve human productivity, from healthcare and banking to transportation and entertainment.

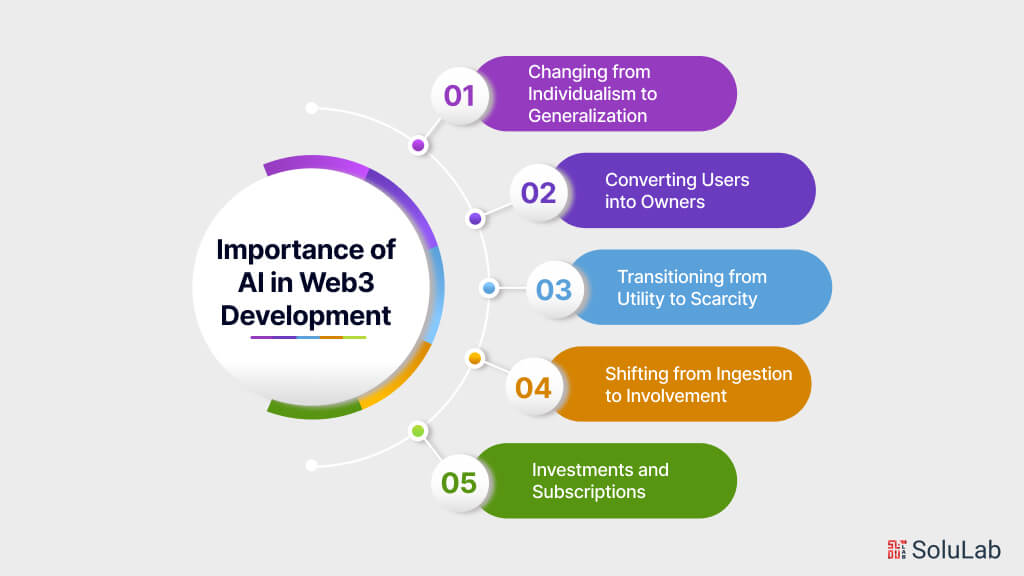

Why is AI Important in Web3 Development?

Future web3 and AI platforms will utilize artificial intelligence to provide decentralized intelligence, giving users more efficiency, security, and privacy. The integration of AI in web3 is revolutionizing digital interactions, transactions, and innovation through the use of smart contracts and decentralized apps.

1. Changing from Individualism to Generalization

Big tech has been using centralized AI models to gather insights and derive value from consumers for the last ten years. We are developing capabilities in the fields of AI and Web3 to benefit everyone, not just a wealthy select few. Every AI model is trained using the creator’s own experiences, interests, and expertise.

2. Converting Users into Owners

All material is produced and profited from by a small number of private firms, which leaves content producers frequently underappreciated and unnoticed. With Web3, artists are in total control of their digital assets, AI models, and data. Some businesses are contributing to the development of blockchain platforms, giving creators complete control and access to their data, and enabling them to share or reuse it as they see fit.

3. Transitioning from Utility to Scarcity

Tokens by themselves cannot give consumers ownership or incentives in order to maintain long-term viability. Tokens have to have real value and give their users something concrete. Using your creativity and intelligence, your personal AI produces and unlocks additional value from the material you produce. With access and involvement made possible by social tokens, this personal AI creates opportunities for partnerships and encourages value creation inside your community.

4. Shifting from Ingestion to Involvement

Present-day platforms are made with mass consumption in mind, creating a one-way relationship between content producers and viewers. Thanks to personal AIs and innovative ways to exchange value via social tokens, Web3 artificial intelligence producers and their communities have their own platforms. We are building a new collaborative network architecture that changes the relationship between value production and consumption by moving power away from platforms and toward individuals.

5. Investments and Subscriptions

The goal of content creators has always been to gradually grow a sizable subscriber base in the hopes of making money off of it. However, a small percentage of producers make a good living, which hurts both creators and subscribers. Communities can now invest in both the personal AIs that improve their lives and the artists they love thanks to a new creative economy being driven by web3 and future AI platforms. Today, artists have the chance to build profitable enterprises centered around their ideas, which benefits both the artists and the communities they serve.

How Can Web3 Make Use of AI?

The growing popularity of Artificial Intelligence (AI) has the potential to significantly affect several sectors, including the growth of Web3. AI and Web3 will play a significant part in creating the future decentralized web by improving user experiences and providing creative solutions.

- Web3 AI has a lot to offer when it comes to data analysis and decision-making. Large amounts of data created on the blockchain may be effectively processed and evaluated with the assistance of AI algorithms, providing users with insightful information that helps them make well-informed decisions.

- By employing AI Web3 to drive predictive analytics, users may identify trends, patterns, and possible hazards. This allows for more efficient resource distribution and investment tactics in decentralized Web3 ecosystems.

- AI has the ability to support Web3 network security protocols. Machine learning Web3 algorithms are able to recognize and stop fraudulent activity, find weaknesses, and strengthen encryption methods, all of which contribute to the overall security and reliability of decentralized systems.

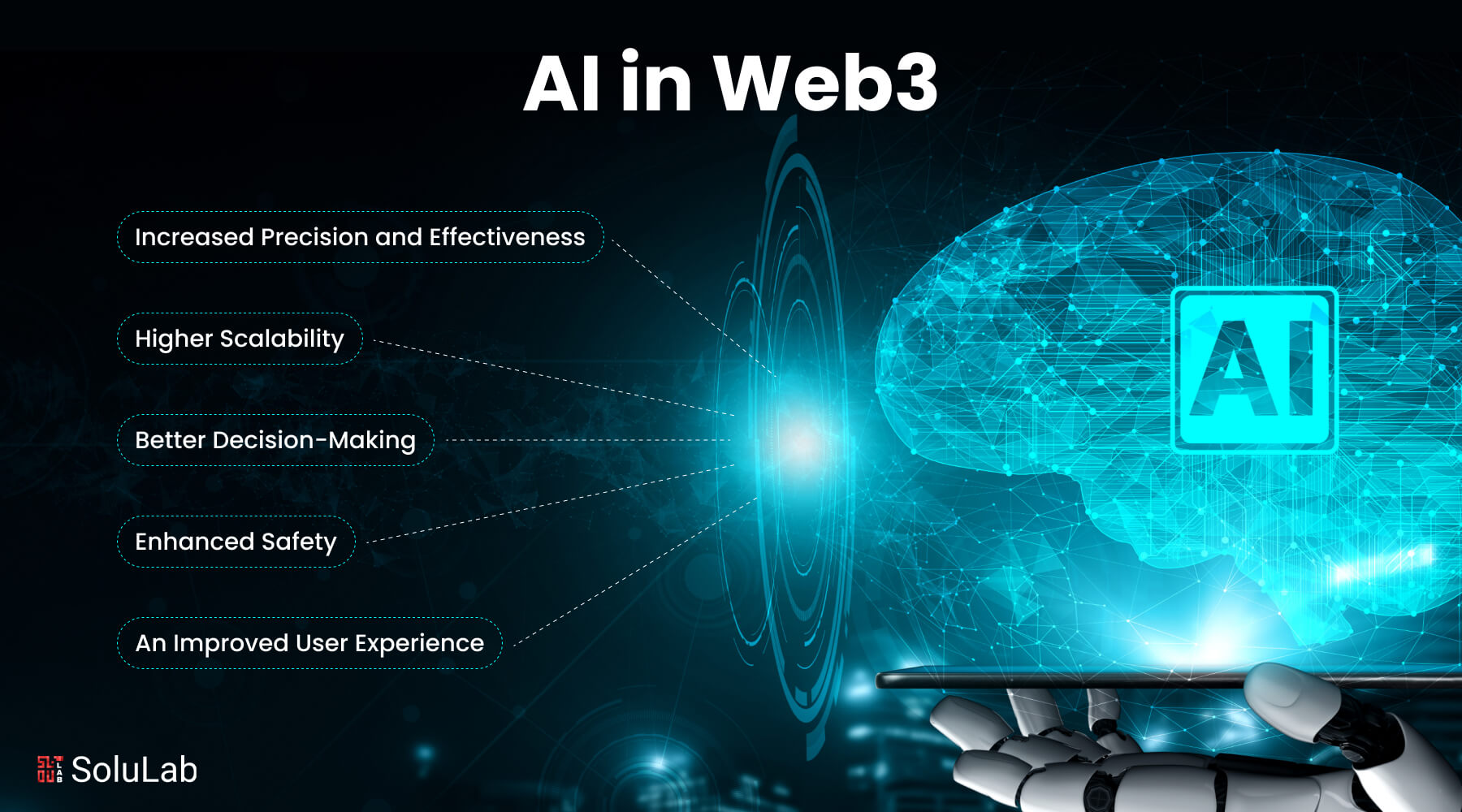

The Benefits of Using AI in Web3

Integrating artificial intelligence (AI) into web3 applications has several benefits. Among these benefits are the following:

1. Increased Precision and Effectiveness

In web3 applications, AI automation replaces human procedures, increasing productivity and accuracy. The application’s overall quality is improved by this decrease in mistakes.

2. An Improved User Experience

Artificial Intelligence enhances web3 apps’ usefulness by providing users with tailored and pertinent results. By doing this, the program becomes more user-friendly and improves the user experience.

3. Higher Scalability

Because artificial intelligence (AI) can automate processes, its applications are more scalable than traditional ones. When growing their operations, firms may save money and time thanks to this scalability.

4. Better Decision-Making

AI makes it possible to make decisions that would not be possible without certain insights. This entails recognizing patterns, forecasting future results, and comprehending consumer behavior.

5. Enhanced Safety

Ensuring online apps are secure is crucial. An extra security layer is created to fend against dangers like data leaks and cyberattacks by integrating AI into web3 apps.

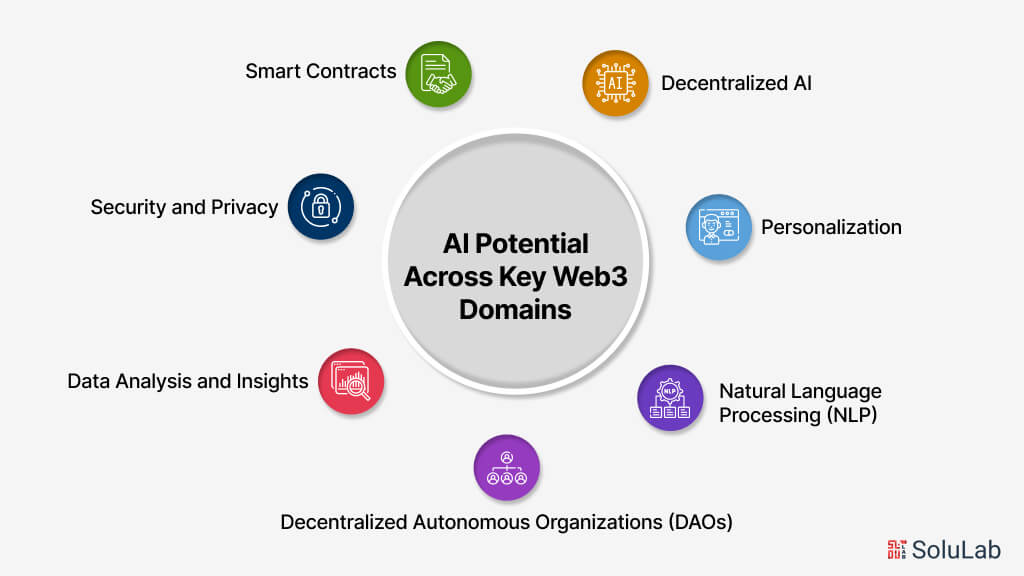

Key Domains in Web3 Where AI Is Potential

The advancement of Web3 and the realization of a more decentralized, safe, and user-focused Internet are both greatly helped by AI. We may anticipate seeing increasingly intelligent, effective, and customized digital experiences as a result of the integration of AI capabilities into different Web3 domains.

Here are several key domains in Web3 AI can make a big difference:

Smart Contracts

- AI integration for intelligent decision-making: By incorporating AI capabilities into smart contracts, they can go beyond simple execution of predefined rules. AI can analyze data from various sources, such as market trends or user behavior, to make informed decisions within the contract. This could include dynamic pricing based on market conditions or personalized terms based on individual user preferences.

- Automation of complex workflows: AI-powered smart contracts can automate intricate processes involving multiple parties and conditional actions. For instance, in supply chain management, smart contracts could automatically trigger actions such as ordering raw materials or scheduling shipments based on real-time data analysis.

- Optimization and refinement: AI techniques like reinforcement learning can continuously optimize smart contract code to improve performance, security, and reliability. By iteratively testing and refining the code, AI can identify inefficiencies or vulnerabilities and suggest improvements, leading to more robust and efficient contracts.

Decentralized Autonomous Organizations (DAOs)

- Enhanced governance and decision-making: AI can automate and streamline decision-making processes within DAOs by analyzing data such as proposals, member preferences, and historical outcomes. This can help identify relevant proposals, predict their impact, and prioritize them for consideration, making the governance process more efficient and transparent.

- Improved transparency and adaptability: AI-driven insights can provide clear justifications for decisions, fostering trust and accountability within DAOs. Additionally, AI can enable DAOs to respond more effectively to changing conditions or emerging challenges by identifying and adapting to shifts in the environment or user behavior.

- Resource allocation optimization: AI can help DAOs manage and allocate resources such as funds or computing power more effectively by analyzing data on project performance, needs, and priorities. This ensures that resources are allocated to maximize impact and effectiveness, driving the success of DAO initiatives.

Related: AI in Web3 – Exploring How AI Manifests in the World of Web3

Decentralized AI

- Distributed model training: AI models can be trained on distributed data sets while maintaining data privacy through techniques like federated learning. This allows for the development of AI systems without centralizing sensitive data, enhancing privacy and security.

- Collaborative model development: Secure multi-party computation and homomorphic encryption enable multiple parties to collaborate on AI model development without sharing raw data. This fosters collaboration while protecting sensitive information.

- Incentive mechanisms: Decentralized AI systems can incentivize data sharing, model training, and resource utilization through token rewards, encouraging participation and contribution from network participants.

Personalization

- AI-driven recommendations: By analyzing user data, AI algorithms can generate personalized recommendations for content, products, or services. This enhances user engagement and satisfaction by delivering relevant and tailored experiences.

- Context-aware communication: Natural language processing (NLP) techniques enable Web3 applications to understand and respond to user queries or commands in natural language. This improves user interactions and facilitates more intuitive interfaces.

- Automated content generation: AI can automate the creation of personalized content such as news articles or product recommendations, reducing the need for manual curation and enhancing scalability.

Natural Language Processing (NLP)

- Seamless communication: NLP enables Web3 applications to interpret and respond to user queries or commands in natural language, making interactions more intuitive and user-friendly.

- Contextual understanding: AI-powered NLP can analyze the context and sentiment behind user-generated content, allowing for more personalized and relevant interactions.

- Automated content generation: NLP techniques can automate the generation of human-readable content, reducing the burden of manual content creation and curation.

Data Analysis and Insights

- Uncovering patterns and trends: AI-driven data analysis can uncover valuable insights from decentralized data sets, informing the development and optimization of Web3 applications and services.

- Optimization and innovation: Actionable insights generated by AI can drive optimization and innovation within Web3, identifying opportunities for improvement or new market trends.

- Enhancing security: AI-powered threat detection can proactively identify and address potential vulnerabilities or malicious activities, enhancing the security and trustworthiness of Web3 platforms and applications.

Security and Privacy

- AI-powered threat detection: AI can monitor and analyze data to detect and prevent cyber threats, ensuring the integrity and security of Web3 platforms.

- Robust authentication methods: AI techniques like biometric recognition and behavioral analysis can enhance authentication processes, making them more secure and resistant to fraud.

- Advanced encryption and anonymization: AI-driven encryption and anonymization techniques protect user data, ensuring privacy and confidentiality in decentralized environments.

Why Web3 Adopts ML Technologies Top-Down?

The adoption of machine learning Web3 follows a top-down approach, mainly due to the intricate nature of the underlying infrastructure and the necessity for expertise in integrating ML solutions with decentralized systems. This approach entails the development and implementation of ML technologies by experts and organizations with a deep understanding and knowledge of Web3 before broader adoption among users.

1. Complex Technical Integration: Integrating ML technologies into Web3 platforms demands a comprehensive understanding of both decentralized infrastructure and ML algorithms. The intricate nature of underlying systems like blockchain, smart contracts, and decentralized applications necessitates expertise for the seamless integration of ML solutions.

2. Prioritizing Security and Privacy: Web3 emphasizes secure and privacy-preserving solutions. Careful integration of ML technologies is crucial to uphold these principles. Top-down adoption allows experts and organizations with a nuanced understanding of security and privacy concerns to design and implement ML solutions that align with Web3’s core values.

3. Emphasis on Standardization and Interoperability: Effective adoption of ML technologies across Web3 platforms requires standardization and interoperability. Top-down adoption facilitates the development of common frameworks, protocols, and standards, fostering easier integration of ML solutions into the Web3 ecosystem and reducing fragmentation.

4. Addressing Scalability and Performance Challenges: Implementing ML technologies within Web3 necessitates tackling challenges related to scalability and performance inherent to decentralized systems. Top-down adoption ensures that ML solutions are designed and optimized to overcome these challenges, resulting in more efficient and scalable implementations.

5. Facilitating Ecosystem Growth and Maturity: As the Web3 ecosystem continues to evolve, a top-down approach allows for the gradual adoption of ML technologies in alignment with the ecosystem’s growth and needs. This approach ensures that ML technologies are introduced in a manner that supports the maturation and expansion of the Web3 community.

Related: Web3 Trends Shaping the Future of AI

What Challenges Does AI Face?

In order for artificial intelligence to be widely used and optimized, a number of issues must be resolved. Among the principal challenges are:

1. Moral Issues: Robust rules and laws are necessary since the combination of AI and Web3 brings ethical concerns about privacy, prejudice, accountability, and possible employment displacement.

2. Availability and Quality of Data: Data is a major component of AI systems, and the representativeness, quality, and accessibility of the data can affect the fairness and accuracy of AI results.

3. Transparency and Interpretability: It can be tricky to comprehend and describe the decision-making processes of AI and Web3 algorithms due to their complexity and difficulty to interpret.

4. Risks to Security: Data integrity and system operation may be in danger from adversarial manipulation and assaults on AI and Web3 systems.

5. Insufficient Domain Expertise: Deep domain knowledge is generally necessary for developing successful AI solutions, although this expertise may be scarce in some fields.

Conclusion

The convergence of Web3 with AI has enormous potential to change many different fields and industries. We can develop more beneficial AI systems for society by utilizing the benefits of both technologies to produce more transparent, ethical, and effective systems.

Blockchain and decentralized networks are examples of Web3 technologies that have the ability to address some of the most critical issues confronting the artificial intelligence industry, from data management to computing and algorithm creation. More cooperation, openness, and incentives are made possible by these technologies, which eventually enhance AI models, data quality, and resource allocation.

SoluLab is a leading AI development company, that provides complete solutions to address the intricate problems associated with decentralized technology. By utilizing our combined knowledge of blockchain technology and artificial intelligence, we enable companies to fully utilize AI in Web3 ecosystems. SoluLab provides creative solutions that are customized to meet the unique needs of our clients, whether the goal is to optimize smart contracts through AI-driven decision-making, improve decentralized autonomous organizations (DAOs) with AI-powered governance, or use decentralized AI for machine learning that protects privacy. By prioritizing ethics, transparency, and security, we make sure that our AI-driven Web3 solutions surpass industry norms rather than just meeting them. Reach out to us right now to start along the path to Web3 development success powered by AI.

FAQs

1. What is the significance of integrating AI in Web3 development?

Integrating AI in Web3 development brings numerous benefits, including enhanced efficiency, intelligent decision-making, and personalized user experiences. AI can optimize smart contracts, automate complex processes in decentralized organizations, and provide valuable insights from decentralized data sets, ultimately driving innovation and growth in Web3 ecosystems.

2. How does AI address privacy concerns in Web3 development?

AI offers solutions to privacy concerns in Web3 by enabling privacy-preserving techniques such as federated learning and secure multi-party computation. These approaches allow AI models to be trained on distributed data sets without compromising individual data privacy, ensuring that sensitive information remains secure in decentralized environments.

3. What are the challenges of implementing AI in Web3 development?

Challenges of implementing AI in Web3 development include ethical considerations, data quality and availability, interpretability of AI algorithms, security risks, and the need for domain expertise. Overcoming these challenges requires robust frameworks, regulations, and collaboration among stakeholders to ensure responsible and effective integration of AI in Web3.

4. How can AI enhance governance in decentralized autonomous organizations (DAOs)?

AI can enhance governance in DAOs by automating decision-making processes, analyzing proposals and member preferences, and improving transparency and accountability. AI-driven insights enable more efficient and informed decision-making within DAOs, fostering trust among members and stakeholders while promoting adaptability to changing conditions.

5. What role does SoluLab play in AI-powered Web3 development?

SoluLab plays a pivotal role in AI-powered Web3 development by offering comprehensive solutions tailored to the specific needs of businesses. From optimizing smart contracts to enhancing decentralized organizations with AI-driven governance, SoluLab empowers clients to harness the full potential of AI within Web3 ecosystems. With a focus on ethics, transparency, and security, SoluLab ensures that AI-powered Web3 solutions meet and exceed industry standards, driving innovation and success in decentralized technologies.