Artificial intelligence has evolved from being a luxury to a necessity in today’s competitive market. A thorough grasp of the AI technology stack is advantageous and required for developing innovative approaches capable of transforming corporate processes. AI has revolutionized and upgraded how we engage with technology, and the AI tech stack is essential for this shift.

This article will go over every aspect of the AI tech stack, that can help users to generate new data similar to the dataset on which it was trained.

We’ll look at the many components of the AI tech stack and how they interact to develop novel AI solutions. So, let’s get started!

AI Tech Stack Layers

The tech stack for AI is a structural arrangement made up of interconnected layers, each of which plays an important role in ensuring the system’s efficiency and efficacy. Unlike a monolithic design, in which each component is closely connected and entangled, the AI stack’s layered structure promotes flexibility, scalability, and ease of troubleshooting. The fundamental elements of this architecture consist of APIs, machine learning algorithms, user interfaces, processing, storage, and data input. These layers serve as the core foundations that underpin an AI system’s complex network of algorithms, data pipelines, and application interfaces. Let’s go further into what these layers are all about!

1. Application Layer

The Application Layer is the embodiment of the user experience, containing anything from web apps to REST APIs that control data flow across client-side and server-side contexts. This layer is responsible for critical processes such as gathering inputs through GUIs, displaying visualizations on dashboards, and giving insights based on data via API endpoints. React for frontend and Django for backend are frequently used, each picked for its own benefits in activities such as validation of data, user authentication, and API request routing. The Application Layer acts as an entry point, routing user inquiries to the underlying machine-learning models while adhering to strict security measures to ensure data integrity.

2. Model Layer

The Model Layer serves as the engine room for decision-making and data processing. TensorFlow and PyTorch are specialized libraries that provide a diverse toolbox for a variety of machine learning operations including natural language processing, computer vision, and predictive analytics. This area includes feature engineering, model training, and hyperparameter optimization. Various machine learning techniques, ranging from regression models to complicated neural networks, are evaluated using performance measures such as accuracy, recall, and F1-score. This layer serves as an intermediate, receiving data from the Application Layer, doing computation-intensive activities, and then returning the insights to be presented or acted upon.

3. Infrastructure Layer

The Infrastructure Layer plays an essential role in both model training and inference. This layer allocates and manages computing resources like CPUs, GPUs, and TPUs. Scalability, latency, and fault tolerance are built at this level with orchestration technologies such as Kubernetes for container management. On the cloud side, services such as AWS’s EC2 instances and Azure’s AI-specific accelerators may be used to handle the intensive computing. This infrastructure is more than simply the passive recipient of requests; it is an evolving system designed to deploy resources wisely. Load balancing, data storage solutions, and network latency are all designed to fit the unique demands of the aforementioned levels, making sure that limitations in processing capability do not become a stumbling obstacle.

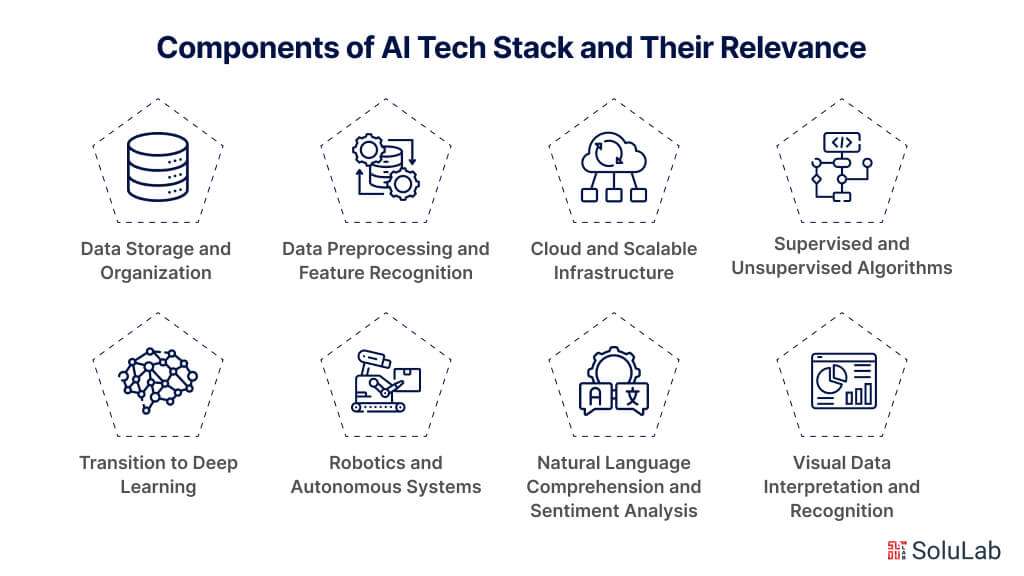

Components of AI Tech Stack and Their Relevance

The architecture of artificial intelligence (AI) solutions consists of numerous modules, each focused on separate tasks yet cohesively interconnected for overall operation. From data input to ultimate application, this complex stack of technologies is crucial to developing AI capabilities. The Gen AI tech stack is made up of the following components:

1. Data Storage and Organization

The first stage in AI processing is to store data securely and efficiently. Storage solutions like SQL databases for structured data and NoSQL databases for unstructured data are critical. For large-scale data, Big Data solutions such as Hadoop’s HDFS and Spark’s in-memory processing are required. The kind of storage choice has a direct influence on the retrieval of information speed, which is critical for real-time data analysis and machine learning.

2. Data Preprocessing and Feature Recognition

Following storage comes the tedious work of data preparation and feature recognition. Normalization, missing value management, and outlier identification are all part of preprocessing, which is done in Python with packages like Scikit-learn and Pandas. Feature recognition is critical for decreasing dimensionality and is carried out utilizing methods such as Principal Component Analysis (PCA) or Feature Importance Ranking. These cleaned and reduced characteristics are used as input for machine learning algorithms, resulting in higher efficiency and precision.

3. Supervised and Unsupervised Algorithms

Once the preprocessed data is accessible, machine learning methods are used. Support Vector Machines (SVMs) for categorization, Random Forest for ensemble learning, and k-means for clustering all serve distinct functions in data modeling. The algorithm used has a direct influence on computing efficiency and predicted accuracy, thus it must be appropriate for the situation.

4. Transition to Deep Learning

Traditional machine-learning techniques may fail to meet the increasing complexity of computing issues. This requires the usage of deep learning frameworks like TensorFlow, PyTorch, or Keras. These frameworks facilitate the design and training of complicated neural network topologies such as Convolutional Neural Networks (CNNs) for recognition of images and Recurrent Neural Networks (RNNs) for sequential data processing.

5. Natural Language Comprehension and Sentiment Analysis

Whenever it comes to reading human language, Natural Language Processing (NLP) libraries including NLTK and spaCy form the basis. Transformer-based models, such as GPT-4 or BERT, provide more comprehension and context recognition for more complex applications, such as sentiment analysis. These NLP tools and models are often included in the AI stack after deep learning parts for applications that need natural language interaction.

6. Visual Data Interpretation and Recognition

In the area of visual data, computer vision technologies like OpenCV are essential. CNNs may be used in advanced applications like as facial recognition and object identification. These computer vision components frequently collaborate with machine learning techniques to facilitate multi-modal analysis of information.

7. Robotics and Autonomous Systems

Sensor fusion methods are used in physical applications such as robotics and autonomous systems. Simultaneous Localization and Mapping (SLAM) and algorithms for making decisions such as Monte Carlo Tree Search (MCTS) are used. These features work in tandem with the machine learning and computer vision components to power the AI’s capacity to communicate with its environment.

8. Cloud and Scalable Infrastructure

The complete AI technology stack is frequently run on a cloud-based infrastructure such as AWS, Google Cloud, or Azure. These systems offer scalable, upon-request computational resources necessary for data storage, processing speed, and algorithmic execution. The cloud infrastructure serves as an enabler, allowing all of the aforementioned components to operate seamlessly and harmoniously.

AI Tech Stack: A Must for AI Success

The importance of a precisely chosen technological stack in building strong AI systems cannot be emphasized. Machine learning frameworks, programming languages, cloud services, and data manipulation utilities all play important roles. The following is a detailed breakdown of these important components.

-

Machine Learning Frameworks

The construction of AI models needs complex machine-learning frameworks for inductive and training purposes. TensorFlow, PyTorch, and Keras are more than just libraries; they are ecosystems that provide a plethora of tools and Application Programming Interfaces (APIs) for developing, optimizing, and validating machine learning models. They also provide a variety of pre-configured models for jobs including natural language processing to computer vision. Such frameworks need to serve as the foundation of the technological stack, providing opportunities to alter models for specific metrics like accuracy, recall, and F1 score.

-

Programming Languages

The selection of programming language has an impact on the harmonic equilibrium between user accessibility and model efficiency. Python is often used in machine learning due to its readability and extensive package repositories. While Python is the most popular, R and Julia are widely used, notably for statistical evaluation and high-performance computing work.

-

Cloud Resources

Generative AI models require significant computational and storage resources. The inclusion of cloud services into the technological stack provides these models with the necessary power. Services like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure provide configurable resources including virtual machines, as well as specific machine learning platforms. The cloud infrastructure’s inherent scalability means that AI systems can adapt to changing workloads without losing performance or causing outages.

-

Data Manipulation Utilities

Raw data is rarely appropriate for rapid model training; preprocessing techniques such as normalization, encoding, and imputation are frequently required. Utilities such as Apache Spark and Apache Hadoop provide data processing capabilities that can handle large datasets efficiently. Their enhanced data visualization feature supports exploration data analysis by allowing for the discovery of hidden trends or abnormalities within the data.

By systematically choosing and combining these components into a coherent technological stack, one may create not just a functioning, but also an optimal AI system. The resulting system will have increased precision, scalability, and dependability, all of which are required for the fast growth and implementation of AI applications.

The combination of these carefully picked resources creates a technological stack that is not only comprehensive but also important in obtaining the greatest levels of performance in AI systems.

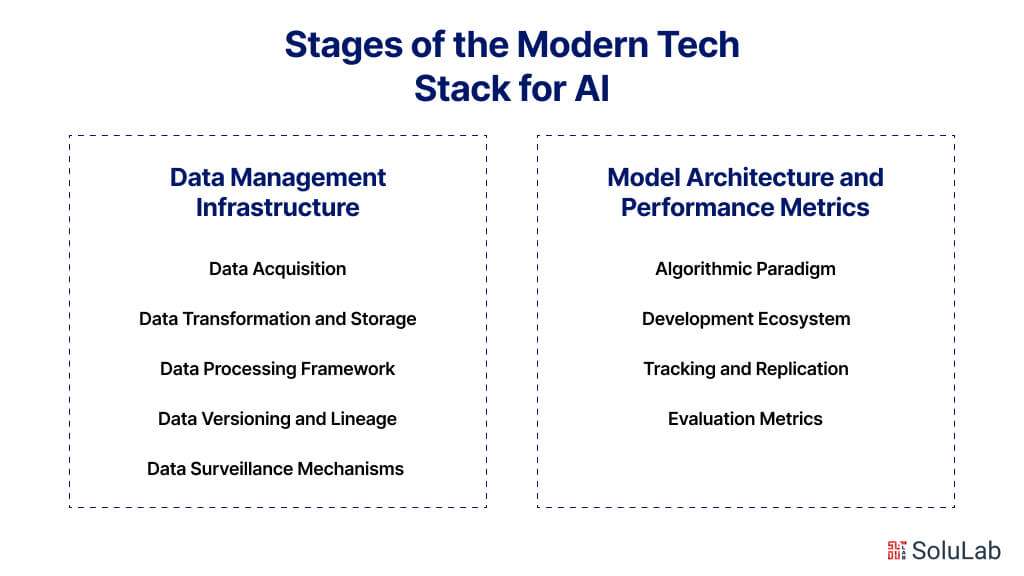

Stages of the Modern Tech Stack for AI

A methodical approach is essential for efficiently building, deploying, and scaling AI systems. This complicated framework serves as the foundation for AI applications, providing a tiered approach to addressing the various issues associated with AI development. This framework is often separated into phases, each tasked with handling a certain element of the AI life cycle, that includes data management, data transformation, and machine learning, among others. Let’s look at each phase to see how important each layer is, as well as the tools and approaches used.

Phase 1: Data Management Infrastructure

Data is the driving force behind machine learning algorithms, analytics, and, eventually, decision-making. Therefore, the first section of our AI Tech Sttalk focuses on Data Management Infrastructure, a critical component for gathering, refining, and making data usable. This phase is separated into multiple stages, each focused on a different component of data handling, such as data acquisition, transformation, storage, and data processing frameworks. We will deconstruct each level to offer a thorough grasp of its mechanics, tools, and relevance.

Stage 1. Data Acquisition

1.1 Data Aggregation

The data-collecting method is a complicated interplay between internal tools and external applications. These various sources combine to provide an actionable dataset for future operations.

1.2 Data Annotation

The collected data goes through a labeling procedure, which is required for machine learning in a supervised setting. Automation has gradually replaced this arduous job with software solutions such as V7 Labs and ImgLab. Nonetheless, manual verification is necessary owing to algorithmic limits in finding outlier occurrences.

1.3 Synthetic Data Generation

Despite the large amounts of available data, gaps persist, particularly in narrow use cases. As a result, techniques like TensorFlow and OpenCV have been used to generate synthetic picture data. Libraries like SymPy and Pydbgen are used for expressing symbols and categorical data. Additional tools, such as Hazy and Datomize, provide interaction with other platforms.

Stage 2: Data Transformation and Storage

2.1 Data Transformation Mechanisms

ETL and ELT are two competing concepts for data transformation. ETL emphasizes data refinement by briefly staging data for processing prior to ultimate storage. In contrast, ELT is concerned with practicalities, putting data first and then changing it. Reverse ETL has arisen as a method for synchronizing data storage with end-user interfaces such as CRMs and ERPs, democratizing data across applications.

2.2 Storage Modalities

Data storage techniques vary, with each serving a distinct purpose. Data lakes are excellent at keeping unstructured data, while data warehouses are designed to store highly processed, structured data. An assortment of cloud-based systems, such as Google Cloud Platform and Azure Cloud, provide extensive storage capabilities.

Stage 3: Data Processing Framework

3.1 Analytical Operations

Following acquisition, the data must be processed into a consumable format. This data processing step makes extensive use of libraries such as NumPy and pandas. Apache Spark is an effective tool for managing large amounts of data quickly.

3.2 Feature Handling

Feature stores like Iguazio, Tecton, and Feast are critical components of effective feature management, considerably improving the robustness of feature pipelines among machine-learning systems.

Stage 4: Data Versioning and Lineage

Versioning is crucial for managing data and ensuring repeatability in a dynamic context. DVC is a technology-agnostic tool that smoothly integrates with a variety of storage formats. On the lineage front, systems such as Pachyderm allow data versioning and an extensive representation of data lineage, resulting in a cohesive data story.

Stage 5: Data Surveillance Mechanisms

Censius and other automated monitoring tools help preserve data quality by spotting inconsistencies such as missing values, type clashes, and statistical deviations. Supplementary monitoring technologies, such as Fiddler and Grafana, provide similar functions but add complexity by measuring data traffic quantities.

Phase 2: Model Architecture and Performance Metrics

Modeling in the field of machine learning and AI is an ongoing procedure that involves repeated developments and assessments. It starts following the data has been collected, properly stored, examined, and converted into functional qualities. Model creation should be approached via the perspective of computational restrictions, operational conditions, and data security governance rather than merely algorithmic choices.

2.1 Algorithmic Paradigm

Machine learning libraries include TensorFlow, PyTorch, scikit-learn, and MXNET, each having its own selling point—computational speed, versatility, simplicity of learning curve, or strong community support. Once a library meets the project criteria, one may begin the normal operations of model selection, parameter tweaking, and iterative experimentation.

2.2 Development Ecosystem

The Integrated Development Environment (IDE) facilitates AI and software development. It streamlines the programming workflow by integrating crucial components such as code editors, compilation procedures, debugging tools, and more. PyCharm stands out for its simplicity of handling dependencies and code linking, which ensures the project stays stable even when switching between engineers or teams.

Visual Studio Code (VS Code) comes as another dependable IDE that is extremely adaptable between operating systems and has connections with external tools such as PyLint and Node. JS. Other IDEs, like as Jupyter and Spyder, are mostly used throughout the prototype phase. MATLAB, a longtime academic favorite, is increasingly gaining traction in business applications for end-to-end code compliance.

2.3 Tracking and Replication

The machine learning technology stack is fundamentally experimental, necessitating repeated testing spanning data subsets, feature engineering, and resource allocation to fine-tune the best model. The ability to duplicate tests is critical for retracing the research path and manufacturing implementation.

Tools such as MLFlow, Neptune, and Weights & Biases make it easier to track rigorous experiments. At the precise same time, Layer provides one platform for handling all project metadata. This assures a collaborative environment that can adapt to scale, which is an important consideration for firms looking to launch strong, collaborative machine learning initiatives.

2.4 Evaluation Metrics

In machine learning, performance evaluation entails making complex comparisons across many trial outcomes and data categories. Automated technologies like Comet, Evidently AI, and Censius are quite useful in this situation. These tools automate monitoring, allowing data scientists to concentrate on essential goals rather than laborious performance tracking.

These systems offer standard and configurable metric assessments for basic and sophisticated use cases. Detailing performance difficulties with additional obstacles, such as data quality degradation or model deviations, is critical for root cause investigation.

Future Trends in the AI Tech Stack

As technological innovation accelerates, bringing in an era of unparalleled possibilities, the future of the Gen AI tech stack appears to be as dynamic and transformational. Emerging trends in artificial intelligence are set to transform how we design, create, and deploy AI-powered products, altering sectors and redefining what is possible. From decentralized learning methodologies such as federated learning to the democratization of AI via open-source projects, the environment is rapidly changing, bringing both difficulties and possibilities for organizations and engineers. In this part, we cover some of the most fascinating future trends positioned to transform the AI tech stack in the next years, providing insights into the advancements that will drive the next generation of AI-powered innovation.

Emerging Technologies Shaping the Future of AI

- Federated Learning: Federated Learning allows models to be trained across multiple decentralized edge devices without the need to share raw data. This privacy-preserving approach is gaining traction in scenarios where data privacy is paramount, such as healthcare and finance.

- GPT (Generative Pre-trained Transformer) Models: OpenAI GPT has completely changed the field of natural language processing (NLP). Future advancements may include more sophisticated models capable of understanding context and generating more coherent and contextually relevant responses.

- AutoML (Automated Machine Learning): AutoML platforms are democratizing AI by automating the process of model selection, hyperparameter tuning, and feature engineering. As these platforms become more sophisticated, they will enable non-experts to leverage AI more effectively.

Potential Advancements and Innovations

- Interoperability and Standardization: As the AI ecosystem continues to expand, there is a growing need for interoperability and standardization across different components of the Gen AI tech stack. Efforts towards standardizing APIs, data formats, and model architectures will facilitate seamless integration and collaboration.

- Ethical AI and Responsible AI Practices: With increasing concerns around bias, fairness, and transparency in AI systems, there will be a greater emphasis on developing and implementing ethical AI frameworks and responsible AI practices. This includes techniques for bias detection and mitigation, as well as tools for interpreting and explaining AI decisions.

- AI-driven DevOps (AIOps): AI will play an increasingly central role in DevOps processes, with AI-powered tools and techniques automating tasks such as code deployment, monitoring, and troubleshooting. This convergence of AI and DevOps, often referred to as AIOps, will streamline development workflows and improve system reliability.

- Edge AI and Federated Learning: The proliferation of IoT devices and the need for real-time processing will drive the adoption of Edge AI, where AI models are deployed directly on edge devices. Federated Learning will play a crucial role in this context, enabling collaborative learning across distributed edge devices while preserving data privacy.

- Open AI Tech Stack: The concept of an open AI tech stack, comprising open-source tools and platforms, will continue to gain traction. This democratizes access to AI technologies, fosters collaboration and innovation, and accelerates the development and deployment of AI solutions across various domains.

By embracing these emerging technologies and advancements, organizations can stay ahead of the curve and leverage the full potential of the AI tech stack to drive innovation and achieve business objectives.

Conclusion

To summarize, traversing the AI tech stack is like beginning a voyage across the limitless worlds of creativity and opportunity. From data collecting to model deployment and beyond, each tier of the stack brings unique problems and possibilities, influencing the future of AI-powered solutions across sectors. As we look ahead at developing trends and breakthroughs, it’s apparent that the growth of the AI tech stack will continue to drive revolutionary change, allowing organizations to reach new levels of efficiency, intelligence, and value creation.

If you want to start your AI experience or need expert coaching to traverse the intricacies of the AI tech stack, SoluLab is here to help. As a top AI development company, we provide a team of experienced individuals with knowledge in all aspects of AI technology and processes. Whether you want to hire AI developers to create unique solutions or need strategic advice to connect your AI activities with your business goals, SoluLab is your reliable partner every step of the way. Contact us today to see how we can help you achieve every advantage of AI and catapult your company into the future.

FAQs

1. What is the AI tech stack, and why is it important?

The AI tech stack refers to the collection of technologies, tools, and frameworks used to develop, deploy, and maintain artificial intelligence solutions. It encompasses various layers, including data acquisition, model building, deployment, and monitoring. Understanding the AI tech stack is crucial as it provides a structured framework for building AI applications, streamlining development processes, and ensuring the efficient utilization of resources.

2. What are some common challenges faced when implementing the AI tech stack?

Implementing the AI tech stack can present challenges such as data quality issues, selecting the right algorithms and models, managing scalability and performance, and ensuring ethical and responsible AI practices. Additionally, integrating disparate components of the stack and staying abreast of rapidly evolving technologies can pose further hurdles.

3. How can federated learning revolutionize AI development?

Federated learning enables model training across decentralized edge devices while preserving data privacy. By allowing models to learn from distributed data sources without centralizing sensitive information, federated learning addresses privacy concerns and enables more robust and scalable AI solutions, particularly in sectors like healthcare and finance.

4. What role does SoluLab play in AI development?

SoluLab is a leading AI development company offering comprehensive services to businesses seeking to leverage AI technologies. Our team of skilled AI developers specializes in building custom AI solutions tailored to your specific needs. Whether you require AI consulting, development, deployment, or maintenance services, SoluLab provides end-to-end solutions to help you harness the power of AI and drive business growth.

5. How can I hire AI developers from SoluLab?

Hiring AI developers from SoluLab is a straightforward process. Simply reach out to us through our website or contact us directly to discuss your project requirements. Our team will work closely with you to understand your objectives, assess your needs, and recommend the best-suited AI experts for your project. With SoluLab, you gain access to top-tier talent with deep expertise in AI technologies, ensuring the successful execution of your AI initiatives.