Generative AI, or generative artificial intelligence, is similar to a clever digital artist. This type of technology can produce text, pictures, and even thoughts on its own. Think of it as a robotic artist who can write, draw, or create new things by absorbing vast amounts of previously viewed knowledge. Why is generative AI important in the context of cybersecurity? Well, until recently, cyber threats were like puzzles that were simple to solve. However, with the advent of generative AI, the challenge has expanded. This implies that cybercriminals are also armed with more intelligent weapons, resulting in more potent and advanced attacks.

It is the goal of cybersecurity to keep our digital world safe. Generative AI provides us with both a weapon and a shield. On the one hand, generative AI helps cyber defenders by providing them with defense mechanisms against online intrusions. Nevertheless, there is a chance. Generative AI is another tool available to cybercriminals to enhance the stealth and potency of their assaults.

In this blog, we will go through the importance of Generative AI in Cybersecurity along with the benefits associated with it. So, let’s get started!

Knowing the Effects Of Generative AI

In the field of machine learning known as “generative AI,” models are trained to produce new data that bears similarities to the features and patterns of the input data. With the use of this technology, there are countless opportunities now available for advances in problem-solving, creativity, and content production. According to McKinsey, generative AI has the potential to boost the world economy by trillions of dollars per year.

But because generative AI uses a lot of data, businesses need to be careful about data security and privacy. Large language models (LLMs) and other Generative AI models create privacy problems due to their nature, which involves memory and association. Large volumes of training data, including potentially sensitive information that might be exploited or leaked, can be memorized by LLMs.

Role of Generative AI in Cybersecurity

What part does generative AI play in cybersecurity, then? There are several possible uses for this, like:

- Creating Phishing Emails: Using GenAI in cybersecurity, cybercriminals may generate realistic phishing emails that deceive recipients into clicking on dangerous links or divulging personal information.

- Making Fake Websites: With generative AI, malicious actors may produce phony websites that look real. Users may be tricked by this into downloading malicious files or divulging personal information.

- Creating Malicious Code: Viral AI may be used by malevolent actors to create code that targets security holes in computer systems.

There might be drawbacks and benefits to using generative AI in cybersecurity. It may also be utilized to craft complex attacks that are challenging to counter.

However, AI may also be utilized to provide fresh approaches to security. Attack detection and prevention may be improved by these strategies.

The Working of Generative AI

Machine learning (ML) is a subset of AI that gives rise to generative AI. Machine learning (ML) uses algorithms that automatically get better by identifying patterns in massive volumes of data. One of the many applications of machine learning is deep learning, which makes use of layered algorithms, or neural networks, to simulate how neurons in the human brain work. This gives systems the ability to learn and decide for themselves.

Transformers are a particular kind of neural network architecture used in deep learning. The transformer model analyzes incoming data in parallel by using layers of artificial neurons, which results in a very efficient process. Among them, the Generative Pre-Trained Transformer model (abbreviated GPT) is one of the most well-known.

In a nutshell, generative AI comprises the following actions:

- The model starts using an enormously big dataset for training.

- The fundamental structures and patterns in the data are recognized and understood by the model.

- The generative method makes it possible to generate fresh data that replicates these discovered structures and patterns.

Benefits and Drawbacks of Generative AI in Cybersecurity

Generative AI in cybersecurity offers major benefits and answers to many of the problems that cybersecurity experts are currently facing.

- Efficiency: Cyber threat detection and response may be made more effective with the help of GenAI. An AI-native system can assist security analysts in finding the information they need to make choices fast as it picks up new skills. This speeds up analyst processes, allowing them to concentrate on other projects and increasing the production of their team.

- Comprehensive Analysis and Summarization: GenAI can help teams examine data from various modules or sources, allowing them to quickly and accurately do laborious, time-consuming data analysis that was previously done by hand. Additionally, using GenAI to provide natural language summaries of occurrences and threat assessments and multiplying team output.

- Proactive Threat Detection: The transition from reactive to proactive cybersecurity is arguably the biggest benefit of GenAI. GenAI enables teams to take preventative measures before a breach happens by warning them about possible risks based on learned patterns.

Even though AI-based cybersecurity has many applications, it’s vital to take into account the difficulties that accompany it. Its usage needs to be handled carefully, just like any other technology, to reduce hazards and potential abuse.

- High Processing Resources: A significant amount of processing power and storage are needed for training GenAI models. This might be a barrier for smaller businesses.

- Threat of Attackers Using AI: Open-source, low-cost, cloud-based methods are making GenAI models and associated tools more and more available. Cybercriminals may utilize GenAI to create complex assaults that are skilled at eluding cybersecurity defenses, just as corporations can use it for cybersecurity. GenAI is reducing the barrier to extremely sophisticated assault by new threat actors through an expanding ecosystem of GPT-based tools.

- Ethical Issues: Discussions nowadays are bringing up moral issues pertaining to data control and privacy, particularly in relation to the kinds of data AI models utilize for training datasets.

Read Blog: Generative AI Use Cases

How Generative AI Enhances Cybersecurity?

Let’s examine how Gen AI is assisting security teams in protecting their enterprises in a more precise, effective, and productive manner below.

1. Assisting Security Units That are Understaffed

Artificial Intelligence security is being utilized to enhance security results and support security staff. The majority of IT leaders (93%) are either exploring or are using AI and ML to improve their security capabilities. These AI adopters have already seen gains in performance in terms of reducing false positives and noise, identifying zero-day assaults and threats, and prioritizing Tier 1 threats. More than half of managers (52%) believe that generative AI security will enable businesses to more effectively allocate people, resources, capacity, or skills as a consequence of these early success indicators.

2. Real-time Threat Detection

One of the most popular applications of generative AI nowadays is threat detection. Organizations may greatly accelerate their capacity to discover new threat vectors by employing them to sift event alerts more effectively, eliminate false positives, and spot trends and anomalies quickly.

3. Improving the Quality of Threat Intelligence

Threat intelligence is also improved by generative AI. In the past, analysts had to examine enormous volumes of data to comprehend risks using complicated query languages, procedures, and reverse engineering. They may now make use of generative AI algorithms, which automatically look for dangers in code and network traffic and offer insightful information to assist analysts in comprehending how malicious scripts and other threats behave.

4. Putting Security Patching in Motion Automatically

Patch analysis and application processes may be automated with generative AI. It can apply or recommend suitable fixes using natural language processing (NLP) pattern matching or a machine learning approach called the K-nearest neighbors (KNN) algorithm. Neural networks are used to scan codebases for vulnerabilities.

5. Enhancing Reaction to Incidents

Incident response is a successful area in which generative AI is used in cybersecurity. Security analysts can expedite incident response times by using generative AI to generate response plans based on effective techniques from previous occurrences. Additionally, as events unfold, Gen AI may keep learning from them and modify these reaction plans accordingly. Additionally, generative AI may be used by organizations to automate the generation of incident response reports.

Examples of Generative AI in Cybersecurity

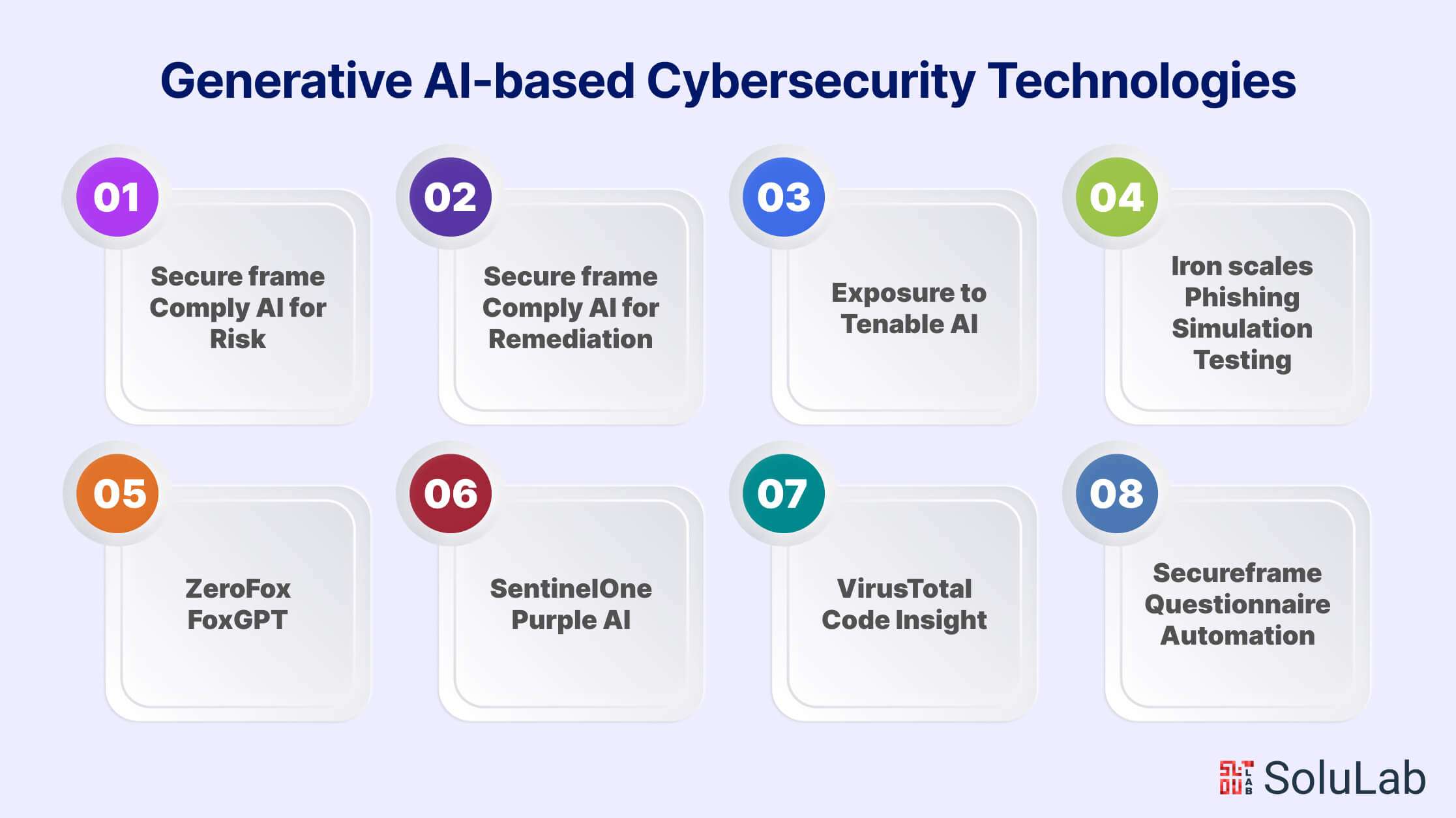

After learning about some of the broad uses of Generative AI in cybersecurity, let’s examine a few particular generative AI-based cybersecurity technologies.

-

Secureframe Comply AI for Risk

Recently, Secureframe Comply AI for Risk was introduced to automate risk assessment, saving time and money for businesses.

Comply AI for Risk generates comprehensive insights into risk, including the chance and effect of the risk before a response, a treatment plan to address the risk, and the probability and effect of the risk that remains after treatment, all from a risk description and corporate information. Organizations may improve their risk awareness and reaction by using these comprehensive Comply AI for Risk outputs, which help them better comprehend the possible effect of risk and appropriate mitigation techniques.

-

Secureframe Comply AI for Remediation

In order to give enterprises a more relevant, accurate, and customized user experience for fixing failed tests and expediting time-to-compliance, Secureframe introduced Comply AI for Remediation.

With the help of Comply AI for Remediation, users can simply correct the underlying problem causing the failing configuration in their environment by receiving remediation information that is specifically targeted to their environment. As a result, they can quickly become audit-ready, strengthen their overall security and compliance posture, and repair failed controls to pass tests.

In order to receive further information on the remediation code or more specialized advice for their unique security and compliance needs, users may also use the chatbot to ask follow-up questions.

-

Exposure to Tenable AI

Tenable introduced ExposureAI to give analysts fresh, in-depth insights and to facilitate easier exposure management. These new generative AI capabilities speed up the search, analysis, and decision-making process for analysts about exposures by:

- Enabling analysts to search for specific exposure and asset data Using natural language search queries.

- Providing a written narrative summary of the entire attack path to help analysts better understand exposures.

- Presenting insights into high-risk exposures and suggesting actions to help analysts more easily prioritize and address high-risk exposures.

-

Ironscales Phishing Simulation Testing

Phishing Simulation Testing (PST) powered by GPT was introduced by Ironscales as a beta feature. This application creates phishing simulation testing campaigns that are tailored to employees and the sophisticated phishing assaults they could come across using Ironscales’ proprietary big language model.

The objective is to assist businesses in quickly customizing security awareness seminars in order to counter the increasing sophistication and prevalence of socially engineered assaults.

-

ZeroFox FoxGPT

FoxGPT, a generative AI tool created by ZeroFox, is intended to speed up the study and summarization of intelligence throughout big datasets. Security teams may use it to examine and put phishing scams, harmful material, and possible account takeovers in perspective.

-

SentinelOne Purple AI

SentinelOne revealed a threat-hunting platform driven by generative AI, which blends a large language model (LLM)-based natural language interface with real-time embedding neural networks to assist analysts in identifying, analyzing, and mitigating threats more quickly.

Analysts may manage their corporate environment by posing sophisticated threats and adversary-hunting queries using natural language, and they can receive prompt, precise, and comprehensive answers in a matter of seconds. In addition to analyzing hazards, Purple AI may offer insights into observed behavior and suggest actions to do next.

-

VirusTotal Code Insight

VirusTotal Code Insight generates natural language summaries of code snippets using Sec-PaLM, one of the generative AI models provided on Google Cloud AI. This can assist security teams in examining and comprehending the actions of scripts that may be harmful. VirusTotal Code Insight is designed to be a potent tool for cybersecurity analysts, supporting them around the clock to improve productivity and efficacy.

-

Secureframe Questionnaire Automation

Security analysts and other stakeholders may find it time-consuming and laborious to respond to security questionnaires since there is no established structure, set of questions, or sequence for the inquiries, and the questions differ from client to customer.

Generative AI is used by Secureframe’s Questionnaire Automation to automate and simplify the procedure. In order to provide more accuracy, this tool makes suggestions for questionnaire answers based on authorized previous responses as well as the context and subject matter from the Secureframe platform. Users may share completed surveys back to prospects and customers in the same way they were submitted after quickly assessing the responses and making any necessary modifications.

The Hazards That Make Cybersecurity For Generative AI Essential

Generative AI, while promising tremendous advancements in various fields, poses significant cybersecurity risks that cannot be ignored. These risks stem from the potential misuse of AI-generated content for malicious purposes, such as deepfakes, fake news dissemination, and phishing attacks. As generative AI algorithms become more sophisticated, the need for robust cybersecurity measures becomes increasingly imperative to safeguard against potential threats to privacy, security, and societal trust.

Here are some of the hazards associated with Generative AI security.

1. Data Overflow: Users are frequently able to enter a variety of data kinds, including private and sensitive information, using generative AI services. This gives rise to worries over the possible disclosure of private customer information or intellectual property, which is why generative AI cybersecurity controls and protections must be put in place.

2. IP Leak: Because web-based Generative AI tools are so user-friendly, there is a greater chance of IP leakage and confidentiality violations due to the shadow IT that results from data being sent and processed online. Employing techniques like virtual private networks (VPNs) can give an extra degree of protection by disguising IP addresses and encrypting data while it’s being sent.

3. Data Training: Large volumes of data are needed to train generative AI models, and if this data is not handled properly, privacy concerns might surface. It is imperative to guarantee that confidential information is not inadvertently disclosed, so contravening privacy laws.

4. Data Storage: Businesses must safely store this data as generative AI models get better with additional input. If private company information is kept in unprotected third-party storage facilities, it may be misused or leaked. To stop breaches, it’s essential to put in place a thorough data strategy that includes access restrictions and encryption.

5. Compliance: Sending sensitive data to other sources is a common practice for generative AI services. Compliance problems might occur if this data contains personally identifiable information (PII), necessitating adherence to data protection laws like the GDPR or CPRA.

6. Synthetic Data: Generative AI has the ability to produce synthetic data that closely mimics actual data, which may allow for the identification of specific people or delicate characteristics. It is imperative that great care be taken to minimize the hazards posed by the possibility of person identification using fake data.

7. Unintentional Leaks: Information from the training data that ought to have stayed private may inadvertently be included by generative models. This emphasizes the significance of carefully reviewing and validating the outputs of generative AI, as they may contain private or sensitive company information.

8. Hostile Attacks and AI Misuse: Deepfakes and misleading information may be produced by hostile actors using generative AI, which helps disseminate misinformation and fake news.

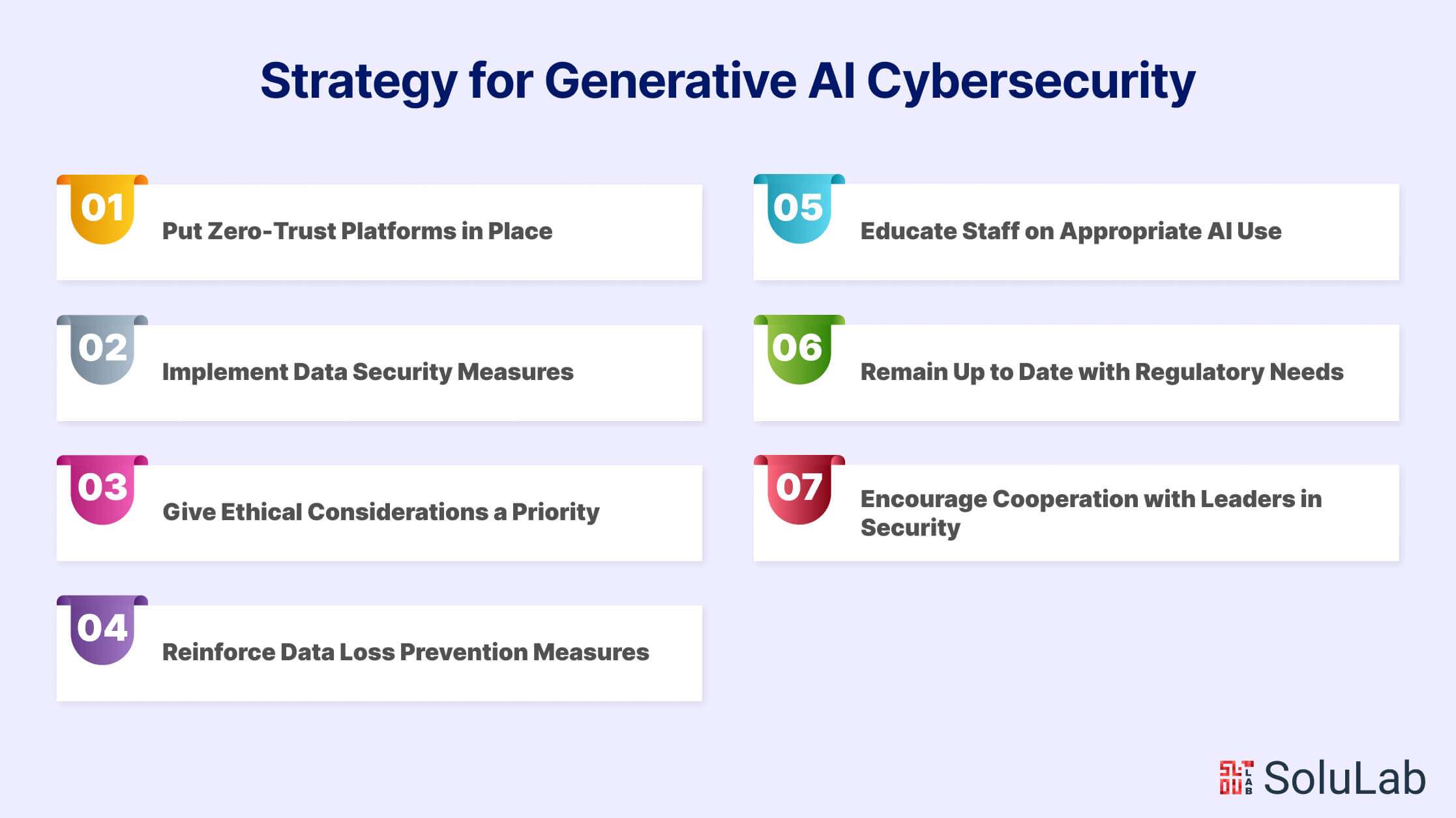

Reducing Hazards: An Active Strategy for Generative AI Cybersecurity

In order to fully benefit from generative AI security, companies need to take a proactive, all-encompassing strategy to generative AI cybersecurity. The following are some crucial methods for reducing risks:

-

Put Zero-Trust Platforms in Place

The complex cyber threats linked to generative AI may be too advanced for standard antivirus software to handle. Anomaly detection-based zero-trust systems can improve threat identification and mitigation, reducing the likelihood of cybersecurity breaches.

-

Implement Data Security Measures

Controls must be incorporated into the model-building procedures in order to reduce hazards. Companies must set aside enough funds to guarantee that models abide by the strictest security requirements. In order to manage AI initiatives, tools, and teams while reducing risk and guaranteeing adherence to industry standards, data governance frameworks should be put in place.

-

Give Ethical Considerations a Priority

When using Generative AI, corporate operations need to prioritize ethical issues. Organizations should include ethical concerns in their operations in order to reduce prejudice and guarantee the ethical usage of technology. Ignoring ethical issues can cause data to become accidentally biased, which can produce AI products that are discriminatory.

-

Reinforce Data Loss Prevention Measures

To properly secure digital assets, endpoints, and perimeters must have improved data loss prevention policies. Preventing unwanted access and data breaches may be achieved by the use of encryption and access restrictions, as well as routine audits and risk assessments.

-

Educate Staff on Appropriate AI Use

Workers are essential to maintaining the ethical use of generative AI and advancing generative AI cybersecurity. Employee understanding of the dangers and possible effects on data security and privacy may be improved by offering Cyber Security Course and training on the appropriate and safe use of AI technology. Risks may be considerably reduced by giving staff members the tools they need to assess generative AI results critically and follow best practices.

-

Remain Up to Date with Regulatory Needs

Laws and rules pertaining to data protection and privacy apply to generative AI. Companies need to be aware of the most recent laws, including CPRA, GDPR, and industry-specific standards. It is imperative to comply with these requirements in order to prevent noncompliance and possible fines.

-

Encourage Cooperation with Leaders in Security

Organizations may successfully handle the cybersecurity concerns related to generative AI by working closely with security executives. Through proactive efforts such as risk identification, mitigation strategy development, and corporate policy enforcement, businesses may enhance generative AI cybersecurity by safeguarding data privacy and security.

Final Words

Generative AI opens up vast prospects for innovation and advancement across sectors. However, enterprises must not underestimate the significance of cybersecurity and data privacy. Organizations may benefit from generative AI while limiting possible hazards by taking a proactive approach to cybersecurity, installing strong controls, and addressing ethical issues. Staying compliant with legislation, educating personnel, and developing partnerships with security professionals are all critical steps toward ensuring the responsible and secure usage of generative AI in the digital age.

SoluLab- a Generative AI development company provides modern generative AI services to support cybersecurity efforts with unique solutions. Our team of skilled AI developers harnesses the power of advanced algorithms to develop robust systems capable of detecting and mitigating emerging threats, including deepfakes and AI-generated cyberattacks. With SoluLab, organizations can hire expert AI developers to create tailored cybersecurity solutions that safeguard digital assets and enhance resilience against evolving cyber threats. Take a proactive step in securing your digital infrastructure today by partnering with SoluLab for your generative AI cybersecurity needs.

FAQs

1. What is generative AI, and how does it relate to cybersecurity?

Generative AI refers to a subset of Artificial Intelligence that focuses on generating new content, such as images, text, or even videos, that mimic real data. In cybersecurity, generative AI is crucial for detecting and combating emerging threats like deepfakes and AI-generated malware, as it can help in creating robust defense mechanisms against these evolving cyber risks.

2. How does generative AI enhance traditional cybersecurity measures?

Generative AI adds an extra layer of protection by leveraging advanced algorithms to identify patterns and anomalies in large datasets more efficiently than traditional methods. This enables faster detection of cyber threats and enables cybersecurity professionals to proactively address potential vulnerabilities before they are exploited.

3. What are some potential risks associated with the use of generative AI in cybersecurity?

While generative AI offers significant benefits, its misuse can lead to the creation of sophisticated cyber threats, such as convincing deepfake videos or AI-generated phishing emails. Additionally, there are concerns about the ethical implications of using AI to create deceptive content and the potential for AI systems to be manipulated or biased.

4. How can organizations leverage generative AI in their cybersecurity strategies?

Organizations can integrate generative AI into their cybersecurity frameworks by implementing AI-powered threat detection systems, deploying AI-driven authentication mechanisms, and utilizing AI-generated simulations to test the resilience of their networks against cyberattacks.

5. How does SoluLab contribute to generative AI in cybersecurity?

SoluLab offers comprehensive generative AI services that empower organizations to strengthen their cybersecurity posture. By leveraging its expertise in AI development, SoluLab helps businesses deploy advanced algorithms tailored to detect and mitigate emerging cyber threats effectively. With a team of skilled AI developers, SoluLab enables organizations to stay ahead of cyber threats and safeguard their digital assets effectively.